The Annals of Statistics

2020, Vol. 48, No. 3, 1304–1328

https://doi.org/10.1214/19-AOS1848

© Institute of Mathematical Statistics, 2020

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE TEST

OF HIGH-DIMENSIONAL MEANS

B

Y KAIJIE XUE

1

AND FANG YAO

2

1

2

Department of Probability and Statistics, School of Mathematical Sciences, Center for Statistical Science, Peking University,

We propose a two-sample test for high-dimensional means that requires

neither distributional nor correlational assumptions, besides some weak con-

ditions on the moments and tail properties of the elements in the random vec-

tors. This two-sample test based on a nontrivial extension of the one-sample

central limit theorem (Ann. Probab. 45 (2017) 2309–2352) provides a prac-

tically useful procedure with rigorous theoretical guarantees on its size and

power assessment. In particular, the proposed test is easy to compute and does

not require the independently and identically distributed assumption, which

is allowed to have different distributions and arbitrary correlation structures.

Further desired features include weaker moments and tail conditions than ex-

isting methods, allowance for highly unequal sample sizes, consistent power

behavior under fairly general alternative, data dimension allowed to be expo-

nentially high under the umbrella of such general conditions. Simulated and

real data examples have demonstrated favorable numerical performance over

existing methods.

1. Introduction. Two-sample test of high dimensional means as one of the key issues

has attracted a great deal of attention due to its importance in various applications, including

[2–5, 10–12, 19, 24–26, 29]and[21], among others. In this article, we tackle this problem

with the theoretical advance brought by a high-dimensional two-sample central limit the-

orem. Based on this, we propose a new type of testing procedure, called distribution and

correlation-free (DCF) two-sample mean test, which requires neither distributional nor cor-

relational assumptions and greatly enhances its generality in practice.

We denote two samples by X

n

={X

1

,...,X

n

} and Y

m

={Y

1

,...,Y

m

} respectively, where

X

n

is a collection of mutually independent (not necessarily identically distributed) random

vectors in R

p

with X

i

= (X

i1

,...,X

ip

)

and E(X

i

) = μ

X

= (μ

X

1

,...,μ

X

p

)

, i = 1,...,n,

and Y

m

is defined in a similar fashion with E(Y

i

) = μ

Y

= (μ

Y

1

,...,μ

Y

p

)

for all i = 1,...,m.

The normalized sums S

X

n

and S

Y

m

are denoted by S

X

n

= n

−1/2

n

i=1

X

i

= (S

X

n1

,...,S

X

np

)

and

S

Y

m

= m

−1/2

m

i=1

Y

i

= (S

Y

m1

,...,S

Y

mp

)

, respectively. Note that we only assume independent

observations, and each sample with a common mean. The hypothesis of interest is

H

0

: μ

X

= μ

Y

v.s. H

a

: μ

X

= μ

Y

,

and the proposed two-sample DCF mean test is such that we reject H

0

: μ

X

= μ

Y

at signifi-

cance level α ∈ (0, 1), provided that

T

n

=

S

X

n

− n

1/2

m

−1/2

S

Y

m

∞

≥ c

B

(α),

where T

n

=S

X

n

− n

1/2

m

−1/2

S

Y

m

∞

is the test statistic that only depends on the infinity

norm of the sample mean difference, and c

B

(α) that plays a central role in this test is a data-

driven critical value defined in (5)ofTheorem3. It is worth mentioning that c

B

(α) is easy to

Received October 2018; revised January 2019.

MSC2010 subject classifications. 62H05, 62F05.

Key words and phrases. High-dimensional central limit theorem, Kolmogorov distance, multiplier bootstrap,

power function.

1304

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1305

compute via a multiplier bootstrap based on a set of independently and identically distributed

(i.i.d.) standard normal random variables that are independent of the data, where the explicit

calculation is described after (6). Note that the computation of the proposed test is of an order

O{n(p + N)}, more efficient than O(Nnp) that is usually demanded by a general resampling

method. In spite of the simple structure of T

n

, we shall illustrate its desirable theoretical

properties and superior numerical performance in the rest of the article.

We emphasize that our main contributions reside on developing a practically useful test

that is computationally efficient with rigorous theoretical guarantees given in Theorem 3–

5. We begin with deriving nontrivial two-sample extensions of the one-sample central limit

theorems and its corresponding bootstrap approximation theorems in high dimensions [9],

where we do not require the ratio between sample sizes n/(n + m) to converge but merely

reside within any open interval (c

1

,c

2

),0<c

1

≤ c

2

< 1, as n, m →∞. Further, Theorem 3

lays down a foundation for conducting the two-sample DCF mean test uniformly over all

α ∈ (0, 1). The power of the proposed test is assessed in Theorem 4 that establishes the

asymptotic equivalence between the estimated and true versions. Moreover, the asymptotic

power is shown consistent in Theorem 5 under some general alternatives with no sparsity or

correlation constraints.

The proposed test sets itself apart from existing methods by allowing for non-i.i.d. ran-

dom vectors in both samples. The distribution-free feature is in the sense that, under the

umbrella of some mild assumptions on the moments and tail properties of the coordinates,

there is no other restriction on the distributions of those random vectors. In contrast, exist-

ing literature require the random vectors within sample to be i.i.d. [3–6], and some methods

further restrict the coordinates to follow a certain type of distribution, such as Gaussian or

sub-Gaussian [26, 29]. This feature sets the proposed test free of making assumptions such as

i.i.d. or sub-Gaussianity, which is desirable as distributions of real data are often confounded

by numerous factors unknown to researchers. Another key feature is correlation-free in the

sense that individual random vectors may have different and arbitrary correlation structures.

By contrast, most previous works assume not only a common within-sample correlation ma-

trix, but also some structural conditions, such as those on trace [5], mixing conditions [21]

or bounded eigenvalues from below [3]. It is worth noting that our assumptions on the mo-

ments and tail properties of the coordinates in random vectors are also weaker than those

adopted in literature, for example, [3, 11]and[21] assumed a common fixed upper bound to

those moments, [5]and[19] allowed a portion of those moments to grow but paid a price on

correlation assumptions.

We also stress that the proposed test possesses consistent power behavior under fairly gen-

eral alternative (a mild separation lower bound on μ

X

− μ

Y

in Theorem 5) with neither spar-

sity nor correlation conditions, while previous work requiring either sparsity [26] or structural

assumption on signal strength [5, 11] or correlation [21], or both [3]. Lastly, we point out that

thedatadimensionp can be exponentially high relative to the sample size under the umbrella

of such mild assumptions. This is also favorable compared to previous work, as [3, 5]and

[21] allowed such ultrahigh dimensions under nontrivial conditions on either the distribution

type (e.g., sub-Gaussian) or the correlation structure (or both) as a tradeoff.

We conclude the Introduction by noting relevant work on one-sample high-dimensional

mean test, such as [14–18, 20, 23, 27, 28]and[1], among others. It is relatively easier to

develop a one-sample DCF mean test with similar advantages based on results in [9], thus is

not pursued here. The rest of the article is organized as follows. In Section 2,wepresentthe

two-sample high-dimensional central limit theorem, and the result on multiplier bootstrap for

evaluating the Gaussian approximation. In Section 3, we establish the main result Theorem 3

for conducting the proposed test, and Theorem 4 to approximate its power function, followed

by Theorem 5 to analyze its asymptotic power under alternatives. Simulation study is carried

1306 K. XUE AND F. YAO

out in Section 4 to compare with existing methods, and an application to a real data example

is presented in Section 5. We collect the auxiliary lemmas and the proofs of the main results,

Theorems 3–5 in the Appendix, and delegate the proofs of Theorems 1–2, Corollary 1 and

the auxiliary lemmas to an online Supplementary Material [22] for space economy.

2. Two-sample central limit theorem and multiplier bootstrap in high dimensions.

In this section, we first present an intelligible two-sample central limit theorem in high di-

mensions, which is derived from its more abstract version in Lemma 4 in the Appendix.Then

the result on the asymptotic equivalence between the Gaussian approximation appeared in the

two-sample central limit theorem and its multiplier bootstrap term is also elaborated, whose

abstract version can be referred to Lemma 5.

We first list some notation used throughout the paper. For two vectors x = (x

1

,...,x

p

) ∈

R

p

and y = (y

1

,...,y

p

)

∈ R

p

, write x ≤ y if x

j

≤ y

j

for all j = 1,...,p.Foranyx =

(x

1

,...,x

p

)

∈ R

p

and a ∈ R, denote x + a = (x

1

+ a,...,x

p

+ a)

.Foranya, b ∈ R,use

the notation a ∨ b = max{a,b} and a ∧ b = min{a,b}. For any two sequences of constants

a

n

and b

n

, write a

n

b

n

if a

n

≤ Cb

n

up to a universal constant C>0, and a

n

∼ b

n

if

a

n

b

n

and b

n

a

n

. For any matrix A = (a

ij

),defineA

∞

= max

i,j

|a

ij

|. For any function

f : R → R, write f

∞

= sup

z∈R

|f(z)|. For a smooth function g : R

p

→ R, we adopt

indices to represent the partial derivatives for brevity, for example, ∂

j

∂

k

∂

l

g = g

jkl

.Forany

α>0, define the function ψ

α

(x) = exp(x

α

) − 1forx ∈[0, ∞), then for any random variable

X,define

(1) X

ψ

α

= inf

λ>0 : E

ψ

α

|X|/λ

≤ 1

,

which is an Orlicz norm for α ∈[1, ∞) and a quasi-norm for α ∈ (0, 1).

Denote F

n

={F

1

,...,F

n

} as a set of mutually independent random vectors in R

p

such

that F

i

= (F

i1

,...,F

ip

)

and F

i

∼ N

p

(μ

X

,E{(X

i

− μ

X

)(X

i

− μ

X

)

}) for all i = 1,...,n,

which denotes a Gaussian approximation to X

n

. Likewise, define a set of mutually inde-

pendent random vectors G

m

={G

1

,...,G

m

} in R

p

such that G

i

= (G

i1

,...,G

ip

)

and

G

i

∼ N

p

(μ

Y

,E{(Y

i

− μ

Y

)(Y

i

− μ

Y

)

}) for all i = 1,...,m to approximate Y

m

.Thesets

X

n

, Y

m

, F

n

and G

m

are assumed to be independent of each other. To this end, de-

note the normalized sums S

X

n

, S

F

n

, S

Y

m

and S

G

m

by S

X

n

= n

−1/2

n

i=1

X

i

= (S

X

n1

,...,S

X

np

)

,

S

F

n

= n

−1/2

n

i=1

F

i

= (S

F

n1

,...,S

F

np

)

, S

Y

m

= m

−1/2

m

i=1

Y

i

= (S

Y

m1

,...,S

Y

mp

)

and S

G

m

=

m

−1/2

m

i=1

G

i

= (S

G

m1

,...,S

G

mp

)

,whereS

F

n

and S

G

m

serve as the Gaussian approximations

for S

X

n

and S

Y

m

, respectively. Lastly, denote a set of independent standard normal random

variables e

n+m

={e

1

,...,e

n+m

} that is independent of any of X

n

, F

n

, Y

m

and G

m

.

2.1. Two-sample central limit theorem in high dimensions. To introduce Theorem 1,a

list of useful notation are given as follows. Denote

L

X

n

= max

1≤j≤p

n

i=1

E

X

ij

− μ

X

j

3

/n, L

Y

m

= max

1≤j≤p

m

i=1

E

Y

ij

− μ

Y

j

3

/m.

We denote the key quantity ρ

∗∗

n,m

by

ρ

∗∗

n,m

= sup

A∈A

Re

P

S

X

n

− n

1/2

μ

X

+ δ

n,m

S

Y

m

− δ

n,m

m

1/2

μ

Y

∈ A

− P

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A

,

(2)

where P(S

X

n

− n

1/2

μ

X

+ δ

n,m

S

Y

m

− δ

n,m

m

1/2

μ

Y

∈ A) represents the unknown probability of

interest, and P(S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A) serves as a Gaussian approxi-

mation to this probability of interest, and ρ

∗∗

n,m

measures the error of approximation over all

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1307

hyperrectangles A ∈ A

Re

. Note that A

Re

is the class of all hyperrectangles in R

p

of the form

{w ∈ R

p

: a

j

≤ w

j

≤ b

j

for allj = 1,...,p} with −∞ ≤ a

j

≤ b

j

≤∞for all j = 1,...,p.

By assuming more specific conditions, Theorem 1 gives a more explicit bound on ρ

∗∗

n,m

com-

paredtoLemma4.

T

HEOREM 1. For any sequence of constants δ

n,m

, assume we have the following condi-

tions (a)–(e):

(a) There exist universal constants δ

1

>δ

2

> 0 such that δ

2

< |δ

n,m

| <δ

1

.

(b) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

(c) There exists a sequence of constants B

n,m

≥ 1 such that L

X

n

≤ B

n,m

and L

Y

m

≤ B

n,m

.

(d) The sequence of constants B

n,m

defined in (c) also satisfies

max

1≤i≤n

max

1≤j≤p

E

exp

X

ij

− μ

X

j

/B

n,m

≤ 2,

max

1≤i≤m

max

1≤j≤p

E

exp

Y

ij

− μ

Y

j

/B

n,m

≤ 2.

(e) There exists a universal constant c

1

> 0 such that

(B

n,m

)

2

log(pn)

7

/n ≤ c

1

,(B

n,m

)

2

log(pm)

7

/m ≤ c

1

.

Then we have the following property, where ρ

∗∗

m,n

is defined in (2):

ρ

∗∗

n,m

≤ K

3

(B

n,m

)

2

log(pn)

7

/n

1/6

+

(B

n,m

)

2

log(pm)

7

/m

1/6

,

for a universal constant K

3

> 0.

Conditions (a)–(c) correspond to the moment properties of the coordinates, and (d) con-

cerns the tail properties. It follows from (a) and (b) that the moments on average are bounded

below away from zero, hence allowing certain proportion of these moments to converge to

zero. This is weaker than previous work that usually require a uniform lower bound on all

moments [3, 11, 21]. Condition (c) implies that the moments on average has an upper bound

B

n,m

that can diverge to infinity without restriction on correlation, thus offers more flexibil-

ity than those in literature that demands either a fixed upper bound or a certain correlation

structure or both. To appreciate this, letting B

n,m

∼ n

1/3

, one notes that all the variances of

the coordinates are allowed to be uniformly as large as B

2/3

n,m

∼ n

2/9

→∞under condition

(c), while no restriction on correlation is needed. As a comparison, if we assign a common

covariance to two samples, say = (

jk

)

1≤j,k≤p

with each

jk

= n

2/9

ρ

1{j=k}

for some

constant ρ ∈ (0, 1), then the trace condition in [5] implies that p = o(1). Compared with a

fixed upper bound on the tails of the coordinates [3, 21], condition (d) allows for uniformly

diverging tails as long as B

n,m

→∞. Condition (e) indicates that the data dimension p can

grow exponentially in n, provided that B

n,m

is of some appropriate order. These conditions

as a whole set the basis for the so-called “distribution and correlation-free” features.

2.2. Two-sample multiplier bootstrap in high dimensions. Due to the unknown probabil-

ity in ρ

∗∗

n,m

(2) denoting the Gaussian approximation, it limits the applicability of the central

limit theorem for inference. The idea is to adopt a multiplier bootstrap to approximate its

Gaussian approximation, and quantify its approximation error bound. Denote

X

= n

−1

n

i=1

E

X

i

− μ

X

X

i

− μ

X

,

ˆ

X

= n

−1

n

i=1

(X

i

−

¯

X)(X

i

−

¯

X)

,

1308 K. XUE AND F. YAO

where

¯

X = n

−1

n

i=1

X

i

= (

¯

X

1

,...,

¯

X

p

)

. Analogously, denote

Y

,

ˆ

Y

and

¯

Y .Nowwe

introduce the multiplier bootstrap approximation in this context. Let e

n+m

={e

1

,...,e

n+m

}

be a set of i.i.d. standard normal random variables independent of the data, we further denote

(3) S

eX

n

= n

−1/2

n

i=1

e

i

(X

i

−

¯

X), S

eY

m

= m

−1/2

m

i=1

e

i+n

(Y

i

−

¯

Y),

and it is obvious that E

e

(S

eX

n

S

eX

n

) =

ˆ

X

and E

e

(S

eY

n

S

eY

n

) =

ˆ

Y

,whereE

e

(·) means the

expectation with respect to e

n+m

only. Then, for any sequence of constants δ

n,m

that depends

on both n and m, we denote the quantity of interest ρ

MB

n,m

by

ρ

MB

n,m

= sup

A∈A

Re

P

e

S

eX

n

+ δ

n,m

S

eY

m

∈ A

− P

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A

,

(4)

where P

e

(·) means the probability with respect to e

n+m

only, and P

e

(S

eX

n

+δ

n,m

S

eY

m

∈ A) acts

as the multiplier bootstrap approximation for the Gaussian approximation P(S

F

n

− n

1/2

μ

X

+

δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A). In particular, ρ

MB

n,m

can be understood as a measure of error

between the two approximations over all hyperrectangles A ∈

A

Re

. The following theorem

provides a more explicit bound on ρ

MB

n,m

in contrast to its abstract version stated in Lemma 5

in the Appendix.

T

HEOREM 2. For any sequence of constants δ

n,m

, assume we have the following condi-

tions (a)–(e),

(a) There exists a universal constant δ

1

> 0 such that |δ

n,m

| <δ

1

.

(b) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

(c) There exists a sequence of constants B

n,m

≥ 1 such that

max

1≤j≤p

n

i=1

E

X

ij

− μ

X

j

4

/n ≤ B

2

n,m

,

max

1≤j≤p

m

i=1

E

Y

ij

− μ

Y

j

4

/m ≤ B

2

n,m

.

(d) The sequence of constants B

n,m

defined in (c) also satisfies

max

1≤i≤n

max

1≤j≤p

E

exp

X

ij

− μ

X

j

/B

n,m

≤ 2,

max

1≤i≤m

max

1≤j≤p

E

exp

Y

ij

− μ

Y

j

/B

n,m

≤ 2.

(e) There exists a sequence of constants α

n,m

∈ (0,e

−1

) such that

B

2

n,m

log

5

(pn) log

2

(1/α

n,m

)/n ≤ 1,

B

2

n,m

log

5

(pm) log

2

(1/α

n,m

)/m ≤ 1.

Then there exists a universal constant c

∗

> 0 such that with probability at least 1 − γ

n,m

where

γ

n,m

= (α

n,m

)

log(pn)/3

+ 3(α

n,m

)

log

1/2

(pn)/c

∗

+ (α

n,m

)

log(pm)/3

+ 3(α

n,m

)

log

1/2

(pm)/c

∗

+ (α

n,m

)

log

3

(pn)/6

+ 3(α

n,m

)

log

3

(pn)/c

∗

+ (α

n,m

)

log

3

(pm)/6

+ 3(α

n,m

)

log

3

(pm)/c

∗

,

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1309

we have the following property, where ρ

MB

n,m

is defined in (4),

ρ

MB

n,m

B

2

n,m

log

5

(pn) log

2

(1/α

n,m

)/n

1/6

+

B

2

n,m

log

5

(pm) log

2

(1/α

n,m

)/m

1/6

.

Conditions (a)–(c) pertain to the moment properties of the coordinates, condition (d) con-

cerns the tail properties and condition (e) characterizes the order of p. These conditions

have the desirable features as those in Theorem 1, such as allowing for uniformly diverging

moments and tails and so on. Moreover, by combining Theorem 2 withatwo-sampleBorel–

Cantelli lemma (i.e., Lemma 6), where condition (f) is needed for Lemma 6, one can deduce

Corollary 1 below, which facilitates the derivation of our main result in Theorem 3.

C

OROLLARY 1. For any sequence of constants δ

n,m

, assume the conditions (a)–(e) in

Theorem 2 hold. Also suppose that the condition (f) holds as follows:

(f) The sequence of constants γ

n,m

defined in Theorem 2 also satisfies

n

m

γ

n,m

< ∞.

Then with probability one, we have the following property, where ρ

MB

n,m

is defined in (4),

ρ

MB

n,m

B

2

n,m

log

5

(pn) log

2

(1/α

n,m

)/n

1/6

+

B

2

n,m

log

5

(pm) log

2

(1/α

n,m

)/m

1/6

.

3. Two-sample mean test in high dimensions. In this section, based on the theoretical

results from the preceding section, we first establish the main result, Theorem 3, which gives

a confidence region for the mean difference (μ

X

− μ

Y

) and, equivalently, the DCF test pro-

cedure. We note that the theoretical guarantee is uniform for all α ∈ (0, 1) with probability

one.

T

HEOREM 3. Assume we have the following conditions (a)–(e):

(a) n/(n + m) ∈ (c

1

,c

2

), for some universal constants 0 <c

1

<c

2

< 1.

(b) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

2

+ E

S

Y

mj

− m

1/2

μ

Y

j

2

≥ b.

(c) There exists a sequence of constants B

n,m

≥ 1 such that

max

1≤j≤p

n

i=1

E

X

ij

− μ

X

j

k+2

/n ≤ B

k

n,m

,

max

1≤j≤p

m

i=1

E

Y

ij

− μ

Y

j

k+2

/m ≤ B

k

n,m

,

for all k = 1, 2.

(d) The sequence of constants B

n,m

defined in (c) also satisfies

max

1≤i≤n

max

1≤j≤p

E

exp

X

ij

− μ

X

j

/B

n,m

≤ 2,

max

1≤i≤m

max

1≤j≤p

E

exp

Y

ij

− μ

Y

j

/B

n,m

≤ 2.

(e) B

2

n,m

log

7

(pn)/n → 0 as n →∞.

1310 K. XUE AND F. YAO

Then with probability one, the Kolmogorov distance between the distributions of the quantity

S

X

n

−n

1/2

m

−1/2

S

Y

m

−n

1/2

(μ

X

−μ

Y

)

∞

and the quantity S

eX

n

−n

1/2

m

−1/2

S

eY

m

∞

satisfies

sup

t≥0

P

S

X

n

− n

1/2

m

−1/2

S

Y

m

− n

1/2

μ

X

− μ

Y

∞

≤ t

− P

e

S

eX

n

− n

1/2

m

−1/2

S

eY

m

∞

≤ t

B

2

n,m

log

7

(pn)/n

1/6

,

where S

eX

n

and S

eY

m

are as in (3), and P

e

(·) means the probability with respect to e

n+m

only.

Consequently,

sup

α∈(0,1)

P

S

X

n

− n

1/2

m

−1/2

S

Y

m

− n

1/2

μ

X

− μ

Y

∞

≤ c

B

(α)

− (1 − α)

B

2

n,m

log

7

(pn)/n

1/6

,

where

(5) c

B

(α) = inf

t ∈ R : P

e

S

eX

n

− n

1/2

m

−1/2

S

eY

m

∞

≤ t

≥ 1 − α

,

for α ∈ (0, 1), where S

eX

n

and S

eY

m

are as in (3), and P

e

(·) denotes the probability with respect

to e

n+m

only.

Note that condition (a) is on the relative sample sizes that allows the ratio n/(n + m) to

diverge within any open interval (c

1

,c

2

) for 0 <c

1

<c

2

< 1, rather than demanding conver-

gence as in existing work. Conditions (b) and (c) concern the moment properties of the coor-

dinates, while condition (d) is associated with the tail properties, and condition (e) quantifies

the order of p. By inspection, these conditions are slightly stronger than those in Theorems

1 and 2, but still maintain all desired advantages. To appreciate such benefits, consider the

following example:

n/(n + m) ∈ (0.1, 0.9), B

n,m

∼ n

1/9

, log p ∼ n

α

,α∈ (0, 1/9),

X

1

,...,X

n/2

i.i.d.

∼ N(0

p

,), X

n/2+1

,...,X

n

i.i.d.

∼ N(0

p

, 2),

Y

1

,...,Y

m/3

i.i.d.

∼ N(1

p

, 3), Y

m/3+1

,...,Y

m

i.i.d.

∼ N(1

p

, 4),

where 1

p

is the vector of ones, and the covariance matrix = (

jk

) ∈ R

p×p

with each

jk

= n

2/27

ρ

1{j=k}

for some constant ρ ∈ (0, 1). Then one can verify that this example ful-

fills all conditions in Theorem 3, but violates the assumptions in most existing articles which

requires i.i.d. samples or trace conditions [5].

From Theorem 3, the 100(1 − α)% confidence region for (μ

X

− μ

Y

) can be expressed as

CR

1−α

=

μ

X

− μ

Y

:

S

X

n

− n

1/2

m

−1/2

S

Y

m

− n

1/2

μ

X

− μ

Y

∞

≤ c

B

(α)

.

Equivalently, the proposed test procedure in (6) is such that, we reject H

0

: μ

X

= μ

Y

at

significance level α ∈ (0, 1),if

(6) T

n

=

S

X

n

− n

1/2

m

−1/2

S

Y

m

∞

≥ c

B

(α),

where the data-driven critical value c

B

(α) in (5) admits fast computation via the multiplier

bootstrap using independent set of i.i.d. standard normal random variables, which is imple-

mented as follows:

• Generate N sets of standard normal random variables, each of size (n + m), denoted by

e

n+m

1

, ..., e

n+m

N

as random copies of e

n+m

={e

1

,...,e

n+m

}. Then calculate N times of

T

e

n

=S

eX

n

− n

1/2

m

−1/2

S

eY

m

∞

while keeping X

n

and Y

m

fixed, where S

eX

n

and S

eY

m

are

in (3). These values are denoted as {T

e1

n

,...,T

eN

n

} whose 100(1 − α)th quantile is used to

approximate c

B

(α).

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1311

It is easy to see that the computation of the DCF test is of the order O{n(p + N)}, compared

with O(Nnp) that is usually demanded by a general resampling method.

According to (6), the true power function for the test can be formulated as

(7) Power

μ

X

− μ

Y

= P

S

X

n

− n

1/2

m

−1/2

S

Y

m

∞

≥ c

B

(α) | μ

X

− μ

Y

.

To quantify the power of the DCF test, the expression (7) is not directly applicable since

the distribution of (S

X

n

− n

1/2

m

−1/2

S

Y

m

) is unknown. Motivated by Theorem 3, we propose

another multiplier bootstrap approximation for Power(μ

X

− μ

Y

), based on a different set of

standard normal random variables e

∗n+m

={e

∗

1

,...,e

∗

n+m

} independent of e

n+m

that are used

to calculate c

B

(α),

(8)

Power

∗

μ

X

− μ

Y

= P

e

∗

S

e

∗

X

n

− n

1/2

m

−1/2

S

e

∗

Y

m

+ n

1/2

μ

X

− μ

Y

∞

≥ c

B

(α)

,

where S

e

∗

X

n

and S

e

∗

Y

m

are as defined in (3) with e

∗n+m

instead of e

n+m

,andP

e

∗

(·) means the

probability with respect to e

∗n+m

only. The following theorem is devoted to establishing the

asymptotic equivalence between Power(μ

X

− μ

Y

) and Power

∗

(μ

X

− μ

Y

) under the same

conditions as those in Theorem 3.

T

HEOREM 4. Assume the conditions (a)–(e) in Theorem 3 hold, then for any μ

X

− μ

Y

∈

R

p

, we have with probability one,

Power

∗

μ

X

− μ

Y

− Power

μ

X

− μ

Y

B

2

n,m

log

7

(pn)/n

1/6

.

By inspection of the conditions in Theorem 4, it is worth mentioning that neither sparsity

nor correlation restriction is required, as opposed to previous work requiring sparsity [3]

for instance. To appreciate this point, the asymptotic power under fairly general alternatives

specified by condition (f) is analyzed in the theorem below.

T

HEOREM 5. Assume the conditions (a)–(e) in Theorem 3 and that

(f)

F

n,m,p

={μ

X

∈ R

p

,μ

Y

∈ R

p

:μ

X

− μ

Y

∞

≥ K

s

{B

n,m

log(pn)/n}

1/2

}, for a suffi-

ciently large universal constant K

s

> 0.

Then for any μ

X

− μ

Y

∈ F

n,m,p

, we have with probability tending to one,

Power

∗

μ

X

− μ

Y

→ 1 as n →∞.

The set

F

n,m,p

in (f) imposes a lower bound on the separation between μ

X

and μ

Y

,which

is comparable to the assumption max

i

|δ

i

/σ

1/2

i,i

|≥{2β log(p)/n}

1/2

in Theorem 2 in [3]. The

latter is in fact a special case of condition (f) when the sequence B

n,m

is constant. It is worth

mentioning that the asymptotic power converges to 1 under neither sparsity nor correlation as-

sumptions in the context of our theorem. In contrast, Theorem 2 in [3] requires not only sparse

alternatives, but also restrictions on the correlation structure, for example, condition 1 in that

theorem such that the eigenvalues of the correlation matrix diag ()

−1/2

diag ()

−1/2

is

lower bounded by a positive universal constant. These comparisons reveal that the proposed

DCF is powerful for a broader range of alternatives. We conclude this section by noting that

the theory for the DCF-type test based on L

2

-norm can also be of interest but is not yet

established, which needs further investigation.

1312 K. XUE AND F. YAO

4. Simulation studies. In the two-sample test for high-dimensional means, methods that

are frequently used and/or recently proposed include those proposed by [5] (abbreviated as

CQ, an L

2

norm test), [3] (abbreviated as CL, an L

∞

norm test) and [21] (abbreviated as XL,

a test combining L

2

and L

∞

norms) tests. We conduct comprehensive simulation studies to

compare our DCF test with these existing methods in terms of size and power under various

settings. The two samples X

n

={X

i

}

n

i=1

and Y

m

={Y

i

}

m

i=1

have sizes (n, m), while the data

dimension is chosen to be p = 1000. Without loss of generality, we let μ

X

= 0 ∈ R

p

.The

structure of μ

Y

∈ R

p

is controlled by a signal strength parameter δ>0 and a sparsity level

parameter β ∈[0, 1]. To construct μ

Y

, in each scenario, we first generate a sequence of i.i.d.

random variables θ

k

∼ U(−δ,δ) for k = 1,...,p and keep them fixed in the simulation

under that scenario. We set δ(r) ={2r log(p)/(n ∨ m)}

1/2

that gives appropriate scale of

signal strength [3, 5, 28]. We take μ

Y

= (θ

1

,...,θ

βp

, 0

p−βp

)

∈ R

p

,wherea denotes

the nearest integer no more than a,and0

q

is the q-dimensional vector of 0’s. Thus the signal

becomes sparser for a smaller value of β, with β = 0 corresponding to the null hypothesis

and β = 1 representing the fully dense alternative. The covariance matrices of the random

vectors are denoted by cov(X

i

) =

X

i

,cov(Y

i

) =

Y

i

for all i = 1,...,n, i

= 1,...,m.

The nominal significance level is α = 0.05, and the DCF test is conducted based on the

multiplier bootstrap of size N = 10

4

.

To have comprehensive comparison, we first consider the following six different set-

tings. The first setting is standard with (n,m,p) = (200, 300, 1000), where the elements

in each sample are i.i.d. Gaussian, and the two samples share a common covariance ma-

trix = (

jk

)

1≤j,k≤p

. The matrix is specified by a dependence structure such that

jk

= (1 +|j − k|)

−1/4

. Beginning with δ = 0.1, where the implicit chosen value r = 0.217

corresponds to quite weak signal according to [3, 28], we calculate the rejection proportions

of the four tests based on 1000 Monte Carlo runs over a full range of sparsity levels from

β = 0 (corresponding to null hypothesis) to β = 1 (corresponding to fully dense alternative).

Then the the signals are gradually strengthened to δ = 0.15, 0.2, 0.25, 0.3. The second set-

ting is similar to the first, except for

Y

i

= 2

X

i

= 2 for all i = 1,...,n, i

= 1,...,m,

where is defined in the first setting. These two settings are denoted by “i.i.d. equal (resp.,

unequal) covariance setting.”

In the third setting, the random vectors in each sample have completely different distribu-

tions and covariance matrices from one another. The procedure to generate the two samples

is as follows. First, a set of parameters {φ

ij

: i = 1,...,m,j = 1,...,p} are generated from

the uniform distribution U(1, 2) independently, and are kept fixed for all Monte Carlo runs.

In a similar fashion, {φ

∗

ij

: i = 1,...,m,j = 1,...,p} are generated from U(1, 3) indepen-

dently. Then, for every i = 1,...,n,wedefineap × p matrix

i

= (ω

ij k

)

1≤j,k≤p

with each

ω

ij k

= (φ

ij

φ

ik

)

1/2

(1 +|j − k|)

−1/4

. Likewise, for every i = 1,...,m,defineap × p matrix

∗

i

= (ω

∗

ij k

)

1≤j,k≤p

with each ω

∗

ij k

= (φ

∗

ij

φ

∗

ik

)

1/2

(1 +|j − k|)

−1/4

. Subsequently, we gener-

ate a set of i.i.d. random vectors

˘

X

n

={

˘

X

i

}

n

i=1

with each

˘

X

i

= (

˘

X

i1

,...,

˘

X

ip

)

∈ R

p

,such

that {

˘

X

i1

,...,

˘

X

i,2p/5

} are i.i.d. standard normal random variables, {

˘

X

i,2p/5+1

,...,

˘

X

i,p

} are

i.i.d. centered Gamma(16, 1/4) random variables, and they are independent of each other. Ac-

cordingly, we construct each X

i

by letting X

i

= μ

X

+

1/2

i

˘

X

i

for all i = 1,...,n.Itisworth

noting that

X

i

=

i

for all i = 1,...,n,thatis,X

i

’s have different covariance matrices and

distributions. The other sample Y

m

={Y

i

}

m

i=1

is constructed in the same way with

Y

i

=

∗

i

for all i = 1,...,m. Then we obtained the results for various signal strength levels of δ over

a full range of sparsity levels of β, and we denote this setting as “completely relaxed.” The

fourth setting is analogous to the third, except that we set (n,m,p)= (100, 400, 1000),where

two sample sizes deviates substantially from each other. Since this setting is concerned with

highly unequal sample sizes, and is therefore denoted as “completely relaxed and highly un-

equal setting.” The fifth setting is similar to the third, except that we replace the standard

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1313

normal innovations in

˘

X

i

and

˘

Y

i

by independent and heavy-tailed innovations (5/3)

−1/2

t(5)

with mean zero and unit variances, referred to as “completely relaxed and heavy-tailed set-

ting.” The sixth setting is also analogous to the third, while independent and skewed innova-

tions 8

−1/2

{χ

2

(4) − 4} with mean zero and unit variances are used, denoted by “completely

relaxed and skewed setting.”

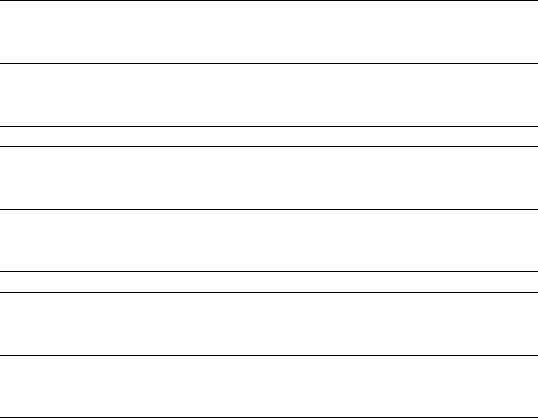

We conduct the four tests and calculate the rejection proportions to assess the empirical

power at different signal levels δ and sparsity levels β in each setting as described above,

based on 1000 Monte Carlo runs. The numerical results of these six settings are shown in

Tables 1–2. For visualization, we depict the empirical power plots of all settings in Figure 1.

We also display the multiplier bootstrap approximation based on another independent set of

size N = 10

4

, which agrees well with the empirical size/power of the DCF test and justifies

the theoretical assessment in Theorem 4. We see that the empirical sizes of proposed DCF

test agree well with the nominal level 0.05 in all six settings. By comparison, the CQ test is

not as stable, and the CL and XL tests show underestimation of type I error in all settings.

Regarding power performance under alternatives in these six settings, despite all tests suf-

fering low power for the weak signals δ = 0.1andδ = 0.15, the DCF test still dominates the

other tests at all levels of β. When the signal strength rises to δ = 0.2, the results in Setting I

indicate that the DCF test outperforms the other tests, except for the CQ test when β ≥ 80%

(a very dense alternative). Although the power of CQ test increases above that of DCF test

at β = 80%, the gains are not substantial when both tests have high power. Similar patterns

are observed in Settings II, III, V, VI with δ = 0.25 for β ranging between 80% and 83%,

and Settings III, IV with δ = 0.3forβ at 80% and 90%, respectively. This phenomenon is

visually shown in the power plot in Figure 1. It is also noted the DCF test dominates the CL

(L

∞

type) and XL (combined type) uniformly in these settings over all levels of δ and β.

To summarize, except for the rapidly increased power of CQ test in very dense alternatives,

the DCF test outperforms the other tests over various signal levels of δ in a broad range of

sparsity levels β, for alternatives with varied magnitudes and signs. Moreover, the gains are

sustainable in the situations that the data structures get more complex, for example, highly

unbalanced sizes, heavy-tailed or skewed distributions.

We further examine alternatives with common/fixed signal upon reviewer’s request

under the “completely relaxed setting,” denoted by Setting VII, where we let μ

Y

=

δ(1,...,1

βp

, 0

p−βp

)

. Note that the empirical sizes of four tests in Setting VII are the

same as those in Setting III (thus not reported), while the power patterns appear to favor

the CQ test when increasing for dense alternatives (DCF still dominates in the range of less

dense levels). Here, numerical power values are not tabulated for conciseness, given that the

visualization in Figure 1 suffices. We conclude this section by pointing out that, compared

to Settings I–VI in which nonzero signals θ

k

∼ U(−δ,δ), the alternatives in Setting VII with

common/fixed signal are more stringent and easy to be violated in practice.

5. Real data example. We analyze a dataset obtained from the UCI Machine Learning

Repository, https://archive.ics.uci.edu/ml/datasets/eeg+database. The data consist of 122 in-

dividuals, out of which n = 45 participants belong to the control group, while the remaining

m = 77 are in the alcoholic group. In the experiment, each subject was shown to a single

stimulis (e.g., picture of object) selected from the 1980 Snodgrass and Vanderwart picture

set. Then, for each individual, the researchers recorded the EEG measurements which were

sampled at 256 Hz (3.9-msec epoch) for one second from 64 electrodes on that person’s

scalps, respectively. As a common practice of data reduction, for each electrode, we pool the

256 records to form 64 measurements by taking the average of the original records on four

proximal grid points. Likewise, we also pool the 64 electrodes by taking the average on ev-

ery four proximal electrodes, resulting 16 combined electrodes. For the control group, we let

1314 K. XUE AND F. YAO

TABLE 1

Rejection proportions (%) calculated for four testing methods at different signal strength levels of δ and sparsity levels of β based on 1000 Monte Carlo runs, where β = 0

corresponds to the null hypothesis β = 1 to the fully dense alternative, and (n,m,p)= (200, 300, 1000)

Setting I: i.i.d. equal cov

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.20 2.40 3.90 5.80 4.30 2.30 2.40 3.60 4.50 2.80 3.70 6.00 4.60 2.70 2.20 3.80 5.00 3.10 3.80 6.10

β = 0.02 5.00 3.20 2.50 3.40 7.50 4.80 3.70 3.50 15.410.56.50 3.90 31.723.314.64.40 59.047.932.64.90

β = 0.04 5.80 3.70 2.80 3.60 10

.06.20 4.30 3.90 20.614.28.80 4.70 40.630.820.05.10 72.058.941.55.30

β = 0.29.90 6.50 3.90 4.50 22.715.99.10 5.30 48.737.323.77.40 84.572.452.011.699.397.187.223.4

β = 0.413.99.40 5.30 5.20 35.325.414.47.80 68.857.

137.916.596.891.172.742.5 100 100 97.796.9

β = 0.617.811.86.70 5.60 45.833.720.312.882.771.851.139.999.697.286.899.1 100 100 100 100

β = 0.822.413.89.00 8.30 55.540.124.423.191.381.761.591.7 100 99.295.7 100 100 100 100 100

β = 126.517.910.910.764.548.

130.639.595.088.570.1 100 100 99.6 100 100 100 100 100 100

Setting II: i.i.d. unequal cov

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.90 1.80 3.70 6.10 5.20 1.30 2.20 3.80 5.00 1.60 3.60 6.00 4.80 1.20 3.50 6.30 5.00 1.90 3.90 6.20

β = 0.02 4.70 1.00 2.40 3.80 6.60 1.40 2.70 4.10 10.72.60 2.90 4.10 19.16.70 4.80 4.40 33.314.48.80 4.50

β = 0.04 5.80 1.30 2.50 4.10 7

.90 1.80 2.80 4.30 12.53.50 3.40 4.50 24.79.30 6.00 4.60 42.520.312.25.00

β = 0.28.10 1.90 2.70 4.60 15.04.40 3.80 4.90 30.911.27.20 6.40 57.626.516.38.40 86.852.133.911.8

β = 0.410.62.80 3.10 5.70 22.47.20 5.70 6.50 47.319.

611.610.078.743.226.619.197.574.153.245.7

β = 0.613.53.30 3.80 6.70 29.29.60 6.70 8.40 59.026.517.118.790.556.236.754.499.888.170.199.6

β = 0.816.44.60 4.50 7.40 37.411.98.60 12.670.932.921.439.695.667.047.098

.9 100 94.290.5 100

β = 119.25.20 5.00 8.10 43.514.410.718.379.439.928.179.898.276.267.8 100 100 97.799.9 100

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1315

TABLE 1

(Continued)

Setting III: completely relaxed

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.70 2.00 3.90 6.30 4.50 1.70 2.30 3.50 4.80 1.90 3.70 6.10 4.60 2.20 2.80 3.90 5.10 2.10 3.80 6.20

β = 0.02 4.90 2.10 3.20 4.40 6.50 2.70 3.50 5.30 9.40 4.30 4.00 5.60 13.67.80 6.20 5.70 24.912.910.15.90

β = 0.04 5.60 2.40 3.50 4.70 7

.60 3.40 4.20 5.40 12.16.00 5.00 5.80 19.110.88.80 6.00 32.819.113.86.50

β = 0.27.50 3.80 4.30 5.80 12.16.00 5.60 6.60 23.912.58.90 7.50 44.226.316.69.30 71.650.232.114.1

β = 0.49.40 3.90 4.50 6.30 18.49.00 8.00 7.60 35.819.

912.711.762.340.826.418.589.369.948.631.5

β = 0.611.54.90 6.20 6.80 24.010.88.90 9.50 48.028.218.217.876.855.337.035.796.583.864.683.1

β = 0.813.66.40 6.60 7.00 30.313.511.712.757.336.423.428.586.765.045.181

.298.591.677.4 100

β = 0.83 14.37.10 6.80 7.50 31.014.611.813.158.037.623.930.887.666.146.188.098.992.679.2 100

β = 116.68.50 7.40 8.00 35.017.213.917.365.642.828.348.290.875.756.099.999.295.595.7 100

1316 K. XUE AND F. YAO

TABLE 2

Rejection proportions (%) calculated for four testing methods at different signal strength levels of δ and sparsity levels of β based on 1000 Monte Carlo runs, where β = 0

corresponds to the null hypothesis β = 1 to the fully dense alternative, (n,m,p)= (100, 400, 1000) for Setting IV, and (n,m,p)= (200, 300, 1000) for Settings V and VI

Setting IV: completely relaxed and highly unequal sample sizes

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.70 0.800 3.90 6.80 4.90 0.900 3.80 6.30 5.20 0.700 3.90 6.10 4.50 0.600 3.50 6.00 4.90 0.500 3.40 6.10

β = 0.02 5.20 1.10 2.90 4.70 5.90 1.00 3.60 5.60 6.70 1.40 4.60 5.80 8.90 2.40 5.00 5.80 13.24.20 6.20 5.90

β = 0.04 5.40 1.20 3.00 4.80 6

.30 1.30 4.50 5.70 7.80 1.90 5.00 6.00 11.23.30 5.60 6.10 17.65.70 7.10 6.20

β = 0.26.60 1.30 3.30 5.40 9.20 2.20 5.10 5.80 14.93.90 5.70 6.20 25.38.70 7.00 7.50 42.816.511.88.80

β = 0.47.80 2.00 4.30 5.50 12.43.40 5.20 6.10 22.36.

60 7.10 8.60 38.213.09.70 10.761.324.817.015.8

β = 0.69.10 2.40 4.60 5.80 16.13.80 5.50 7.90 29.510.09.20 10.849.919.314.317.675.333.721.934.2

β = 0.810.52.50 4.70 6.10 19.95.20 6.70 9.20 36.912.710.914.560.124.019.332

.284.946.633.678.2

β = 0.911.32.80 4.80 6.40 21.95.40 7.10 9.90 39.513.312.617.764.626.621.643.888.048.635.394.0

β = 112.12.90 5.30 7.30 23.45.90 7.30 11.042.014.612.821.768.629.624.559.090.953.141.999.4

Setting V: completely relaxed and heavy-tailed

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.20 2.20 3.80 6.20 5.20 2.50 3.90 6.10 4.70 1.90 2.90 6.00 4.30 2.00 1.70 3.90 4.50 2.30 2.00 3.70

β = 0.02 5.50 2.10 3.70 5.40 6.40 2.50 3.90 5.50 9.50 4.40 4.60 6.10 15.37.40 6.30 6.10 25.515.010.36.20

β = 0.04 6.20 2.30 3.80 5.50 7

.20 3.60 4.20 6.00 12.66.60 5.80 6.20 18.99.80 7.00 6.50 33.320.713.07.10

β = 0.27.50 3.60 4.00 5.80 12.46.80 6.50 7.30 23.513.09.60 8.90 45.627.617.911.371.752.633.814.1

β = 0.49.50 4.20 4.40 5.90 18.19.00 8.30 8.90 35.921.

314.012.764.443.226.918.590.373.452.033.7

β = 0.611.55.10 4.50 6.00 23.812.610.111.746.729.219.417.877.555.937.438.997.486.565.688.2

β = 0.813.77.30 6.20 8.80 29.416.012.314.156.536.924.928.987.469.148.381

.499.293.680.0 100

β = 0.83 14.17.50 6.30 9.20 30.617.313.015.258.138.126.032.088.170.149.587.599.394.182.1 100

β = 116.18.90 7.40 9.40 34.918.915.017.264.544.630.552.291.675.156.699.899.796.596.0 100

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1317

TABLE 2

(Continued)

Setting VI: completely relaxed and skewed

δ = 0.1 δ = 0.15 δ = 0.2 δ = 0.25 δ = 0.3

Test DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ DCF CL XL CQ

β = 04.20 2.10 2.40 3.60 4.90 1.40 2.70 3.80 5.00 1.60 2.50 3.90 4.90 2.40 3.70 5.80 4.70 1.90 2.70 3.90

β = 0.02 4.80 1.30 2.70 4.40 6.20 1.70 3.10 4.70 7.50 2.70 3.80 4.90 12.95.80 5.00 5.00 24.311.88.30 5.00

β = 0.04 5.30 1.40 3.00 4.60 7

.00 2.30 3.30 4.90 11.35.20 4.50 5.10 17.18.70 7.00 5.10 32.217.312.05.30

β = 0.27.40 3.00 3.30 4.80 12.85.80 5.00 5.80 23.012.99.20 6.40 42.425.617.78.40 71.348.632.512.4

β = 0.49.40 4.50 4.00 5.10 18.79.30 6.80 7.20 37.321.

913.410.662.943.328.617.389.470.951.830.7

β = 0.611.55.70 4.50 6.20 24.712.39.60 9.50 48.129.818.116.575.755.037.634.895.983.764.586.4

β = 0.814.26.30 5.80 6.60 30.514.910.512.558.037.623.427.186.765.444.980

.298.792.077.5 100

β = 0.83 14.37.50 6.30 6.70 31.615.310.813.260.139.324.229.887.966.546.287.498.992.881.0 100

β = 116.38.90 6.70 7.40 35.919.314.616.467.044.729.449.391.074.657.299.999.396.197.2 100

1318 K. XUE AND F. YAO

FIG.1. Shown are the bootstrap approximated power curve of the DCF test (crosses), and the empirical power

curves of four methods: the DCF test (squares), the CL test (triangles point down), the XL test (circles) and the

CQ test (triangles point up) based on 1000 Monte Carlo runs under Settings I–VII across different signal levels

of δ and sparsity levels of β.

μ

c,j

= (μ

c,j,1

,...,μ

c,j,64

)

∈ R

64

be the common mean vector of the EEG measurements

on j ’th electrode for j = 1,...,16. For convenience, we write μ

c

= (μ

c,1

,...,μ

c,16

)

∈ R

p

with p = 64 × 16 = 1024 that is much larger than n and m. Similarly, for the alcoholic group,

let μ

a,j

= (μ

a,j,1

,...,μ

a,j,64

)

∈ R

64

be the common mean vector of EEG measurements on

j ’th electrode for j = 1,...,16, and denote μ

a

= (μ

a,1

,...,μ

a,16

)

∈ R

p

. We are interested

in the hypothesis test

H

0

: μ

c

= μ

a

versus H

a

: μ

c

= μ

a

to determine whether there is any difference in means of EEG between two groups. We first

carry out the DCF, CL, XL and CQ tests, whose p-values are given by 0.006, 0.1708, 0.093

and 0.0955, shown in Table 3. In literature, [13] provided evidence for the mean difference

between two groups, the proposed DCF test indeed detected the difference with statistical

significance while the other tests failed to.

For further verification, we carry out random bootstrap with replacement separately within

each sample, and repeat for 500 times. The rejection proportions for the four tests over the

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1319

TABLE 3

Shown are the results of four tests based the original dataset, the

bootstrapped samples and the random permutations

p-values of the four tests based on the

dataset

Test DCF CL XL CQ

p-value 0.006 0.1708 0.093 0.0955

Rejection proportions (%) of the four tests

over 500 bootstrapped datasets

Test DCF CL XL CQ

Rejection proportion 82 65.865 58

Rejection proportions (%) of the four tests

over 500 random permutations

Test DCF CL XL CQ

Rejection proportion 4.61.83.47.4

500 bootstrapped datasets are given in Table 3, which shows that the highest rejection pro-

portion among the four tests is achieved by DCF at 82%. This is in line with the smallest

and significant p-value given by the DCF test based on the dataset itself. We also perform

500 random permutations of the whole dataset (i.e., mixing up two groups that eliminate the

group difference) and conduct four tests over each permuted dataset. From Table 3,wesee

that the rejection proportion of the DCF test (0.046) is close to the nominal level α = 0.05,

while those of the other tests differ considerably.

APPENDIX

We first present some auxiliary lemmas that are key for deriving the main theorems. To

introduce Lemma 1,foranyβ>0andy ∈ R

p

, we define a function F

β

(w) as

F

β

(w) = β

−1

log

p

j=1

exp

β(w

j

− y

j

)

,w∈ R

p

,

which satisfies the property

0 ≤ F

β

(w) − max

1≤j≤p

(w

j

− y

j

) ≤ β

−1

log p,

for every w ∈ R

p

by (1) in [8]. In addition, we let ϕ

0

: R →[0, 1] be a real valued function

such that ϕ

0

is thrice continuously differentiable and ϕ

0

(z) = 1forz ≤ 0andϕ

0

(z) = 0for

z ≥ 1. For any φ ≥ 1, define a function ϕ(z) = ϕ

0

(φz), z ∈ R. Then, for any φ ≥ 1and

y ∈ R

p

, denote β = φ log p and define a function κ : R

p

→[0, 1] as

(9) κ(w) = ϕ

0

φF

φ log p

(w)

= ϕ

F

β

(w)

,w∈ R

p

.

Lemma 1 is devoted to characterize the properties of the function κ defined in (9), which can

be also referred to Lemmas A.5 and A.6 in [7].

L

EMMA 1. For any φ ≥ 1 and y ∈ R

p

, we denote β = φ log p, then the function κ defined

in (9) has the following properties, where κ

jkl

denotes ∂

j

∂

k

∂

l

κ. For any j,k,l = 1,...,p,

there exists a nonnegative function Q

jkl

such that:

1320 K. XUE AND F. YAO

(1) |κ

jkl

(w)|≤Q

jkl

(w) for all w ∈ R

p

,

(2)

p

j=1

p

k=1

p

l=1

Q

jkl

(w) (φ

3

+ φ

2

β + φβ

2

) φβ

2

for all w ∈ R

p

,

(3) Q

jkl

(w) Q

jkl

(w +˜w) Q

jkl

(w) for all w ∈ R

p

and ˜w ∈{w

∗

∈ R

p

:

max

1≤j≤p

|w

∗

j

|β ≤ 1}.

To state Lemma 2, a two-sample extension of Lemma 5.1 in [9], for any sequence of

constants δ

n,m

that depends on both n and m, we denote the quantity ρ

n,m

by

ρ

n,m

= sup

v∈[0,1]

sup

y∈R

p

P

v

1/2

S

X

n

− n

1/2

μ

X

+ δ

n,m

S

Y

m

− δ

n,m

m

1/2

μ

Y

+ (1 − v)

1/2

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

≤ y

− P

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

≤ y

.

(10)

Lemma 2 provides a bound on ρ

n,m

under some general conditions.

L

EMMA 2. For a ny φ

1

,φ

2

≥ 1 and any sequence of constants δ

n,m

, assume the following

condition (a) holds,

(a) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

Then we have

ρ

n,m

n

−1/2

φ

2

1

(log p)

2

φ

1

L

X

n

ρ

n,m

+ L

X

n

(log p)

1/2

+ φ

1

M

n

(φ

1

)

+ m

−1/2

φ

2

2

(log p)

2

|δ

n,m

|

3

φ

2

L

Y

m

ρ

n,m

+ L

Y

m

(log p)

1/2

+ φ

2

M

∗

m

(φ

2

)

+

min{φ

1

,φ

2

}

−1

(log p)

1/2

,

up to a positive universal constant that depends only on b, where ρ

n,m

is defined in (10).

To state Lemma 3 that is a two-sample version of Corollary 5.1 in [9], for any sequence of

constants δ

n,m

that depends on both n and m, we denote the quantity ρ

∗

n,m

by

ρ

∗

n,m

= sup

v∈[0,1]

sup

A∈A

Re

P

v

1/2

S

X

n

− n

1/2

μ

X

+ δ

n,m

S

Y

m

− δ

n,m

m

1/2

μ

Y

+ (1 − v)

1/2

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A

− P

S

F

n

− n

1/2

μ

X

+ δ

n,m

S

G

m

− δ

n,m

m

1/2

μ

Y

∈ A

,

(11)

which has a similar form to the key quantity ρ

∗∗

n,m

in Theorems 1 and 2. Lemma 3 gives a

bound on ρ

∗

n,m

under some general conditions, and it is important for deriving Lemma 4 and

Theorem 1.

L

EMMA 3. For a ny φ

1

,φ

2

≥ 1 and any sequence of constants δ

n,m

, assume the following

condition (a) holds,

(a) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1321

Then we have

ρ

∗

n,m

≤ K

∗

n

−1/2

φ

2

1

(log p)

2

φ

1

L

X

n

ρ

∗

n,m

+ L

X

n

(log p)

1/2

+ φ

1

M

n

(φ

1

)

+ m

−1/2

φ

2

2

(log p)

2

|δ

n,m

|

3

φ

2

L

Y

m

ρ

∗

n,m

+ L

Y

m

(log p)

1/2

+ φ

2

M

∗

m

(φ

2

)

+

min{φ

1

,φ

2

}

−1

(log p)

1/2

,

up to a universal constant K

∗

> 0 that depends only on b, where ρ

∗

n,m

is defined in (11).

Before stating the next lemma, for any φ ≥ 1, we denote M

n

(φ) = M

X

n

(φ) + M

F

n

(φ),

where M

X

n

(φ) and M

F

n

(φ) are given as follows, respectively,

n

−1

n

i=1

E

max

1≤j≤p

X

ij

− μ

X

j

3

1

max

1≤j≤p

X

ij

− μ

X

j

>n

1/2

(4φ log p)

,

n

−1

n

i=1

E

max

1≤j≤p

F

ij

− μ

F

j

3

1

max

1≤j≤p

F

ij

− μ

F

j

>n

1/2

(4φ log p)

,

similar to those adopted in [9]. Likewise, for any φ ≥ 1 and any sequence of constants δ

n,m

that depends on both n and m, we denote M

∗

m

(φ) = M

Y

m

(φ) + M

G

m

(φ) with M

Y

m

(φ) and

M

G

m

(φ) as follows, respectively,

m

−1

m

i=1

E

max

1≤j≤p

Y

ij

− μ

Y

j

3

1

max

1≤j≤p

Y

ij

− μ

Y

j

>m

1/2

4|δ

n,m

|φ log p

,

m

−1

m

i=1

E

max

1≤j≤p

G

ij

− μ

G

j

3

1

max

1≤j≤p

G

ij

− μ

G

j

>m

1/2

4|δ

n,m

|φ log p

.

Recalling the definition of ρ

∗∗

n,m

in (2), Lemma 4 gives an abstract upper bound on ρ

∗∗

n,m

under

mild conditions as follows.

L

EMMA 4. For any sequence of constants δ

n,m

, assume we have the following conditions

(a)–(b):

(a) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

(b) There exist two sequences of constants

¯

L

∗

n

and

¯

L

∗∗

m

such that we have

¯

L

∗

n

≥ L

X

n

and

¯

L

∗∗

m

≥ L

Y

m

, respectively. Moreover, we also have

φ

∗

n

= K

1

¯

L

∗

n

2

(log p)

4

/n

−1/6

≥ 2,

φ

∗∗

m

= K

1

¯

L

∗∗

m

2

(log p)

4

|δ

n,m

|

6

/m

−1/6

≥ 2,

for a universal constant K

1

∈ (0,(K

∗

∨ 2)

−1

], where the positive constant K

∗

that depends

on n as defined in Lemma 3 in the Appendix.

Then we have the following property, where ρ

∗∗

n,m

is defined in (2),

ρ

∗∗

n,m

≤ K

2

¯

L

∗

n

2

(log p)

7

/n

1/6

+

M

n

φ

∗

n

/

¯

L

∗

n

+

¯

L

∗∗

m

2

(log p)

7

|δ

n,m

|

6

/m

1/6

+

M

∗

m

φ

∗∗

m

/

¯

L

∗∗

m

,

for a universal constant K

2

> 0 that depends only on b.

1322 K. XUE AND F. YAO

To introduce Lemma 5, for any sequence of constants δ

n,m

that depends on both n and m,

denote a useful quantity

ˆ

n,m

=

ˆ

X

−

X

+ δ

2

n,m

(

ˆ

Y

−

Y

)

∞

. Lemma 5 below gives an

abstract upper bound on ρ

MB

n,m

defined in (4).

L

EMMA 5. For any sequence of constants δ

n,m

, assume we have the following condition

(a):

(a) There exists a universal constant b>0 such that

min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ b.

Then for any sequence of constants

¯

n,m

> 0, on the event {

ˆ

n,m

≤

¯

n,m

}, we have the

following property, where ρ

MB

n,m

is defined in (4),

ρ

MB

n,m

(

¯

n,m

)

1/3

(log p)

2/3

.

Lastly, we present two-sample Borel–Cantelli lemma in Lemma 6.

L

EMMA 6. Let {A

n,m

: n ≥ 1,m≥ 1,(n,m)∈ A} be a sequence of events in the sample

space , where A is the set of all possible combinations (n, m), which has the form A =

{(n, m) : n ≥ 1,m∈ σ(n)} where σ(n) is a set of positive integers determined by n, possibly

the empty set. Assume the following condition (a):

(a)

∞

n=1

m∈σ(n)

P(A

n,m

)<∞.

Then we have the following property:

P

∞

k

1

=1

∞

k

2

=1

∞

n=k

1

m∈(k

2

)∩σ(n)

A

n,m

= 0,

where (k

2

) ={k : k ∈ Z,k≥ k

2

}.

Note that if m ∈ σ(n) = ∅, we just delete the roles of those A

n,m

and A

c

n,m

during any

operations such as union and intersection, and the same applies to P(A

n,m

) and P(A

c

n,m

)

during summation and deduction.

Before preceding, we mention that the derivations of Theorems 1–2 essentially follow

those of their counterparts in [9], but need more technicality to employ the aforesaid Lemmas

4–5 to address the challenge arising from unequal sample sizes. The derivation of Corollary 1

is based on Theorem 1 as well as a two-sample Borel–Cantelli lemma (Lemma 6)thatfirst

appears in this work as far as we know.

Theorems 3–5 regarding the DCF test are newly developed, while no comparable results

are present in literature. Thus we present the proofs of Theorems 3–5 below, while the proofs

of Theorems 1–2, Corollary 1 and the auxiliary lemmas are delegated to an online Supple-

mentary Material for space economy.

P

ROOF OF THEOREM 3. First of all, we define a sequence of constants δ

n,m

by

(12) δ

n,m

=−n

1/2

m

−1/2

.

Together with condition (a), it can deduced that

(13) δ

2

< |δ

n,m

| <δ

1

,

DISTRIBUTION AND CORRELATION-FREE TWO-SAMPLE MEAN TEST 1323

with δ

1

={c

2

/(1 − c

2

)}

1/2

> 0andδ

2

={c

1

/(1 − c

1

)}

1/2

> 0. Moreover, by combining (12),

(13) with condition (b), we have

(14) min

1≤j≤p

E

S

X

nj

− n

1/2

μ

X

j

+ δ

n,m

S

Y

mj

− δ

n,m

m

1/2

μ

Y

j

2

≥ min

1,δ

2

2

b.

In addition, based on condition (a) and condition (e), one has

(15) B

2

n,m

log

7

(pm)/m ∼ B

2

n,m

log

7

(pn)/n → 0.

To this end, by combining (12), (13), (14), (15), condition (c), condition (d) with Theorem 1,

it can be shown that

(16)

sup

t≥0

P

S

X

n

− n

1/2

m

−1/2

S

Y

m

− n

1/2

μ

X

− μ

Y

∞

≤ t

− P

S

F

n

− n

1/2

m

−1/2

S

G

m

− n

1/2

μ

X

− μ

Y

∞

≤ t

≤ ρ

∗∗

n,m

B

2

n,m

log

7

(pn)/n

1/6

.

Next, we denote a sequence of constants α

n,m

by

(17) α

n,m

= (pn)

−1

,

and it is obvious that

(18) α

n,m

∈

0,e

−1

.

Moreover, by combining condition (a), condition (e) with (17), we conclude that

(19) B

2

n,m

log

5

(pm) log

2

(1/α

n,m

)/m ∼ B

2

n,m

log

5

(pn) log

2

(1/α

n,m

)/n → 0.

To this end, by combining (12), (13), (14), (17), (18), (19), condition (c), condition (d) with

Theorem 2, it follows that there exists a universal constant c

∗

> 0 such that with probabil-

ity at least 1 − γ

n,m

,wehaveρ

MB

n,m

{B

2

n,m

log

7

(pn)/n}

1/6

,whereγ

n,m

= (α

n,m

)

log(pn)/3

+

3(α

n,m

)

log

1/2

(pn)/c

∗

+ (α

n,m

)

log(pm)/3

+ 3(α

n,m

)

log

1/2

(pm)/c

∗

+ (α

n,m

)

log

3

(pn)/6

+ 3 ×

(α

n,m

)

log

3

(pn)/c

∗

+ (α

n,m

)

log

3

(pm)/6

+ 3(α

n,m

)

log

3

(pm)/c

∗

. Together with (a), (17)and(18),

it is not hard to prove that

(20)

n

m

γ

n,m

< ∞.

Henceforth, by combining (12), (13), (14), (17), (18), (19), (20), condition (c), condition (d)

with Corollary 1, we reach a conclusion that with probability one,

(21)

sup

t≥0

P

e

S

eX

n

− n

1/2

m

−1/2

S

eY

m

∞

≤ t

− P

S

F

n

− n

1/2

m

−1/2

S

G

m

− n

1/2

μ

X

− μ

Y

∞

≤ t

≤ ρ

MB

n,m

B

2

n,m

log

7

(pn)/n

1/6

.

Finally, according to (16)and(21), the assertion holds trivially.

1324 K. XUE AND F. YAO

PROOF OF THEOREM 4. Given any (μ

X

− μ

Y

),wehave

(22)

Power

∗

μ

X

− μ

Y

= P

e

∗

S

e

∗

X

n

− n

1/2

m

−1/2

S

e

∗

Y

m

+ n

1/2

μ

X

− μ

Y

∞

≥ c

B

(α)

= 1 − P

e

∗

S

e

∗

X

n

− n

1/2

m

−1/2

S

e

∗

Y

m

+ n

1/2

μ

X

− μ

Y

∞

<c

B

(α)

= 1 − P

e

∗

−n

1/2

μ

X

− μ

Y

− c

B

(α) < S

e

∗

X

n

− n

1/2

m

−1/2

S

e

∗

Y

m

<

−n

1/2

μ

X

− μ

Y

+ c

B

(α)

= 1 − P

e

∗

−n

1/2

μ

X

− μ

Y

− c

B

(α) < S

e

∗

X

n

− n

1/2

m

−1/2

S

e

∗

Y

m

<