Multi-Relational Hyperbolic Word Embeddings

from Natural Language Definitions

Marco Valentino

1

, Danilo S. Carvalho

2,3

, André Freitas

1,2,3

1

Idiap Research Institute, Switzerland

2

Department of Computer Science, University of Manchester, UK

3

National Biomarker Centre, CRUK-MI, University of Manchester, UK

1

{firstname.lastname}@idiap.ch

2

{firstname.lastname}@manchester.ac.uk

Abstract

Natural language definitions possess a recur-

sive, self-explanatory semantic structure that

can support representation learning methods

able to preserve explicit conceptual relations

and constraints in the latent space. This paper

presents a multi-relational model that explicitly

leverages such a structure to derive word em-

beddings from definitions. By automatically ex-

tracting the relations linking defined and defin-

ing terms from dictionaries, we demonstrate

how the problem of learning word embeddings

can be formalised via a translational framework

in Hyperbolic space and used as a proxy to

capture the global semantic structure of defini-

tions. An extensive empirical analysis demon-

strates that the framework can help imposing

the desired structural constraints while preserv-

ing the semantic mapping required for control-

lable and interpretable traversal. Moreover, the

experiments reveal the superiority of the Hy-

perbolic word embeddings over the Euclidean

counterparts and demonstrate that the multi-

relational approach can obtain competitive re-

sults when compared to state-of-the-art neural

models, with the advantage of being intrinsi-

cally more efficient and interpretable

1

.

1 Introduction

A natural language definition is a statement whose

core function is to describe the essential meaning of

a word or a concept. As such, extensive collections

of definitions (Miller, 1995; Zesch et al., 2008),

such as the ones found in dictionaries or technical

discourse, are often regarded as rich and reliable

sources of information from which to derive textual

embeddings (Tsukagoshi et al., 2021; Bosc and

Vincent, 2018; Tissier et al., 2017; Noraset et al.,

2017; Hill et al., 2016).

A fundamental characteristic of natural language

definitions is that they are widely abundant, pos-

1

Code and data available at:

https://github.

com/neuro-symbolic-ai/multi_relational_

hyperbolic_word_embeddings

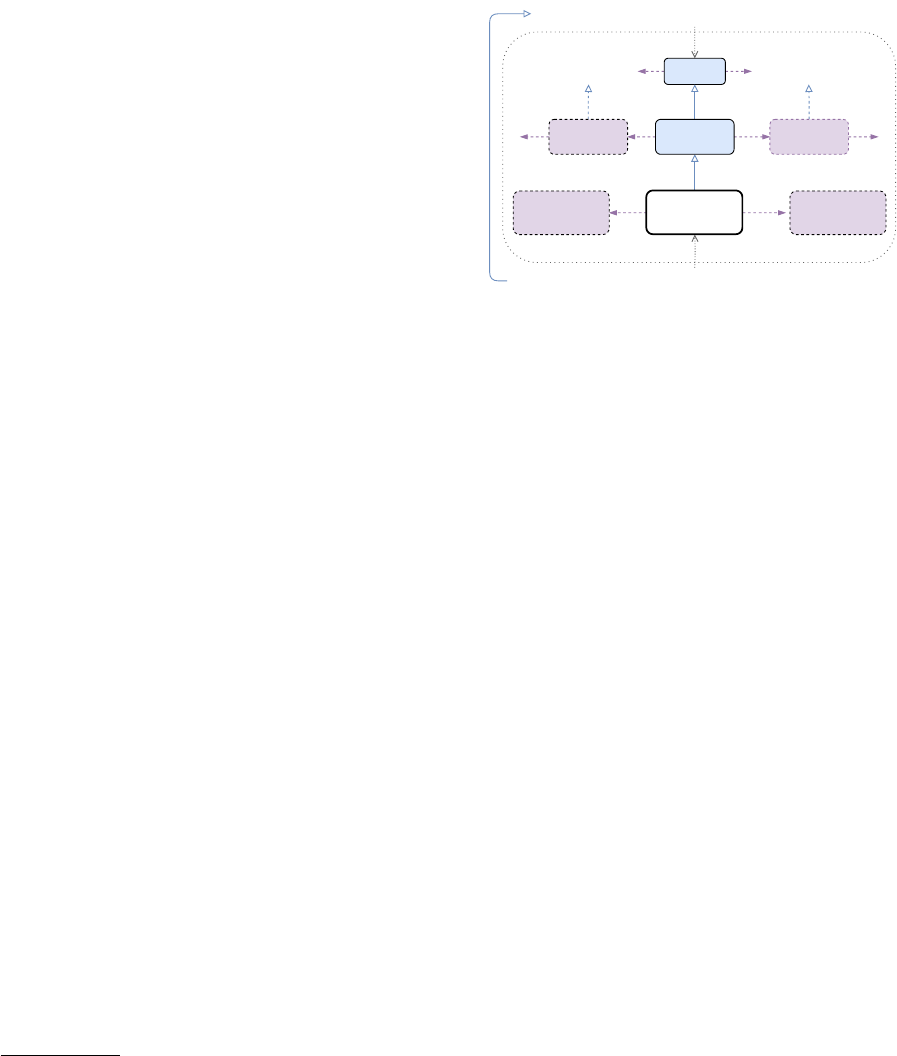

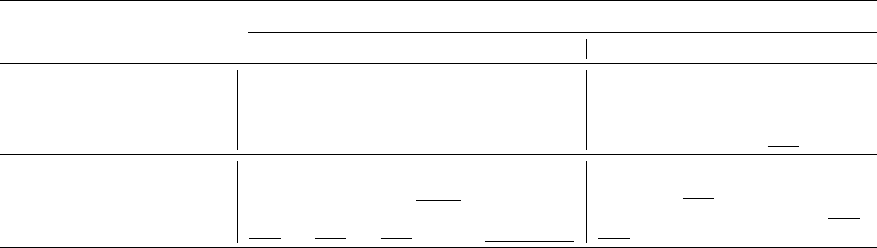

Definition Graph

Line: a set of products or services sold by a business

Set: a matching collection of similar things

Line

Collection

Set

ServiceProduct

Matching Thing

Differentia-Quality Supertype

Figure 1: How can we inject the recursive, hierarchi-

cal structure of natural language definitions into word

embeddings? This paper investigates Hyperbolic man-

ifolds to learn multi-relational representations exclu-

sively from definitions, formalising the problem via a

translational framework to preserve the semantic map-

ping between concepts in latent space.

sessing a recursive, self-explanatory semantic struc-

ture which typically connects the meaning of terms

composing the definition (definiens) to the mean-

ing of the terms being defined (definiendum). This

structure is characterised by a well-defined set of

semantic roles linking the terms through explicit

relations such as subsumption and differentiation

(Silva et al., 2016) (see Figure 1). However, ex-

isting paradigms for extracting embeddings from

natural language definitions rarely rely on such a

structure, often resulting in poor interpretability

and semantic control (Mikolov et al., 2013; Pen-

nington et al., 2014; Reimers and Gurevych, 2019).

This paper investigates new paradigms to over-

come these limitations. Specifically, we posit the

following research question: “How can we lever-

age and preserve the explicit semantic structure

of natural language definitions for neural-based

embeddings?” To answer the question, we explore

multi-relational models that can learn to explic-

itly map definenda, definiens, and their correspond-

ing semantic relations within a continuous vector

arXiv:2305.07303v5 [cs.CL] 16 Feb 2024

space. Our aim, in particular, is to build an em-

bedding space that can encode the structural prop-

erties of the relevant semantic relations, such as

concept hierarchy and differentiation, as a product

of geometric constraints and transformations. The

multi-relational nature of such embeddings should

be intrinsically interpretable, and define the move-

ment within the space in terms of mapped relations

and entities. Since Hyperbolic manifolds have been

demonstrated to correspond to continuous approx-

imations of recursive and hierarchical structures

(Nickel and Kiela, 2017), we hypothesise them to

be the key to achieve such a goal.

Following these motivations and research hy-

potheses, we present a multi-relational framework

for learning word embeddings exclusively from

natural language definitions. Our methodology

consists of two main phases. First, we build a spe-

cialised semantic role labeller to automatically ex-

tract multi-relational triples connecting definienda

and definiens. This explicit mapping allows cast-

ing the learning problem into a link prediction task,

which we formalise via a translational objective

(Balazevic et al., 2019; Feng et al., 2016; Bor-

des et al., 2013). By specialising the translational

framework in Hyperbolic space through Poincaré

manifolds, we are able to jointly embed entities and

semantic relations, imposing the desired structural

constraints while preserving the explicit mapping

for a controllable traversal of the space.

An extensive empirical evaluation led to the fol-

lowing conclusions:

1.

Instantiating the multi-relational framework

in Euclidean and Hyperbolic spaces reveals

the explicit gains of Hyperbolic manifolds in

capturing the global semantic structure of def-

initions. The Hyperbolic embeddings, in fact,

outperform the Euclidean counterparts on the

majority of the benchmarks, being also supe-

rior on one-shot generalisation experiments

designed to assess the structural organisation

and interpretability of the embedding space.

2.

A comparison with distributional approaches

and previous work based on autoencoders

demonstrates the impact of the semantic rela-

tions on the quality of the embeddings. The

multi-relational model, in fact, outperforms

previous approaches with the same dimen-

sions, while being intrinsically more inter-

pretable and controllable.

3.

The multi-relational framework is competitive

with state-of-the-art Sentence-Transformers,

having the advantage of requiring less compu-

tational and training resources, and possessing

a significantly lower number of dimensions.

4.

We conclude by performing a set of qualitative

analyses to visualise the interpretable nature

of the traversal for such vector spaces. We

found that the multi-relational framework en-

ables robust semantic control, clustering the

closely defined terms according to the target

semantic transformations.

To the best of our knowledge, we are the first

to conceptualise and instantiate a multi-relational

Hyperbolic framework for representation learning

from natural language definitions, opening new re-

search directions for improving the interpretability

and structural control of neural embeddings.

2 Background

2.1 Natural Language Definitions

Natural language definitions possess a recursive,

self-explanatory semantic structure. Such structure

connects the meaning of terms composing the defi-

nition (definiens) to the meaning of the terms being

defined (definiendum) through a set of semantic

roles (see Table 1). These roles describe particular

semantic relations between the concepts, such as

subsumption and differentiation (Silva et al., 2016).

Previous work has shown the possibility of auto-

mated categorisation of these semantic roles (Silva

et al., 2018a), and leveraging those can lead to

models with higher interpretability and better navi-

gation control over the semantic space (Carvalho

et al., 2023; Silva et al., 2019, 2018b).

It is important to notice that while the definitions

are lexically indexed by their respective definienda,

the terms they define are concepts, and thus a

single lexical item (definiendum) can have multiple

definitions. For example, the word “line” has the

following two definitions, among others:

“An infinitely extending one-dimensional figure

that has no curvature.”

“A set of products or services sold by a business,

or by extension, the business itself.”

from which upon analysis, we can find the roles

of supertype and differentia quality, as follows:

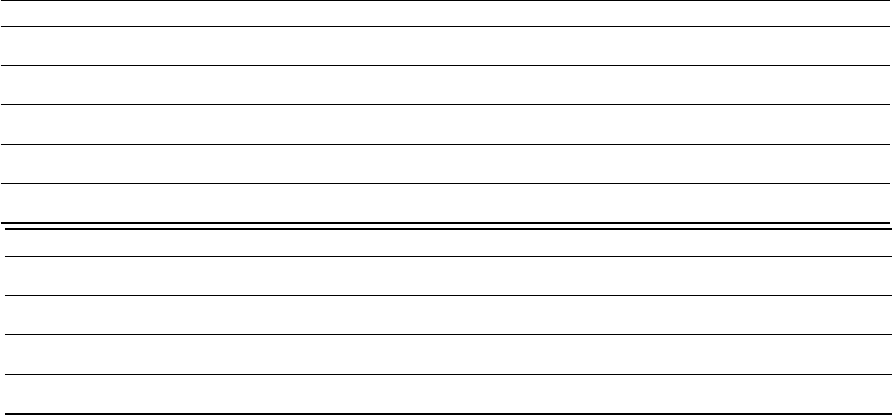

Multi-Relational Word EmbeddingsRelation Extraction

Line: a set of products or services

sold by a business

...

Set: a matching collection of similar

things

line; supertype; set

line; differentia-quality; business

...

set; supertype; collection

set; differentia-quality; things

Definition Semantic Role Labeling (DSRL)

Multi-Relational TriplesCorpus (Dictionary)

(A) (B)

Figure 2: An overview of the multi-relational framework for learning word embeddings from definitions. The

methodology consists of two main phases: (A) building a specialised semantic role labeller (DSRL) for the

annotation of natural language definitions and the extraction of relations from large dictionaries; (B) formalising

the learning problem as a link prediction task via a translational framework. The translational formulation acts as

a proxy for minimising the distance between words that are connected in the definitions (e.g., line and set) while

preserving the semantic relations for interpretable and controllable traversal of the space.

“An

infinitely extending one-dimensional

figure

that has no curvature.”

“A set

of products or services sold by a business

,

or by extension, the business itself.”

A definiendum can then be identified by the in-

terpretation of its associated terms, categorised ac-

cording to its semantic roles within the definition.

A line which has “figure” as supertype is thus a

different concept from a line which has “set” as

supertype. The same can be applied for the other

aforementioned roles: a line with supertype “set”

and distinguished by the differentia quality “prod-

uct” is different from a line distinguished by “point”

on the same role. This is a recursive process, as

each term in a definition is also representing a con-

cept, which may be defined in the dictionary. This

entails a hierarchical and multi-relational structure

linking the terms in the definiendum and in the

definiens.

2.2 Hyperbolic Embeddings

As the semantic roles induce multiple hierarchical

and recursive structures (e.g., the

supertype

and

differentia quality

relation), we hypoth-

esise that Hyperbolic geometry can play a crucial

role in learning word embeddings from definitions.

Previous work, in fact, have demonstrated that re-

cursive and hierarchical structures such as trees

can be represented in a continuous space via a d-

dimensional Poincaré ball (Nickel and Kiela, 2017;

Balazevic et al., 2019).

A Poincaré ball (

B

d

c

,

g

B

) of radius

1/

√

c, c > 0

is a d-dimensional manifold equipped with the Rie-

mannian metric

g

B

. In such d-dimensional space,

the distance between two vectors

x, y ∈ B

can be

computed along a geodesic as follows:

d

B

(x, y) =

2

√

c

tanh

−1

√

c∥ − x ⊕

c

y∥

, (1)

where

∥·∥

denotes the Euclidean norm and

⊕

c

rep-

resents Mobiüs addition (Ungar, 2001):

x ⊕y =

(1 + 2c⟨x, y⟩ + c∥y∥

2

)x + (1 − c∥x∥

2

)y

1 + 2c⟨x, y⟩ + c

2

∥x∥

2

∥y∥

2

, (2)

with

⟨·, ·⟩

representing the Euclidean inner product.

A crucial feature of Equation 1 is that it allows

determining the organisation of hierarchical struc-

tures locally, simultaneously capturing the hierar-

chy of entities (via the norms) and their similarity

(via the distances) (Nickel and Kiela, 2017).

Remarkably, subsequent work has shown that

this formalism can be extended for multi-relational

graph embeddings via a translation framework

(Balazevic et al., 2019), parametrising multiple

Poincaré balls within the same embedding space

(Section 3.2).

3 Methodology

We present a multi-relational model to learn word

embeddings exclusively from natural language def-

initions that can leverage and preserve the semantic

relations linking definiendum and definiens.

The methodology consists of two main phases:

(1) building a specialised semantic role labeller

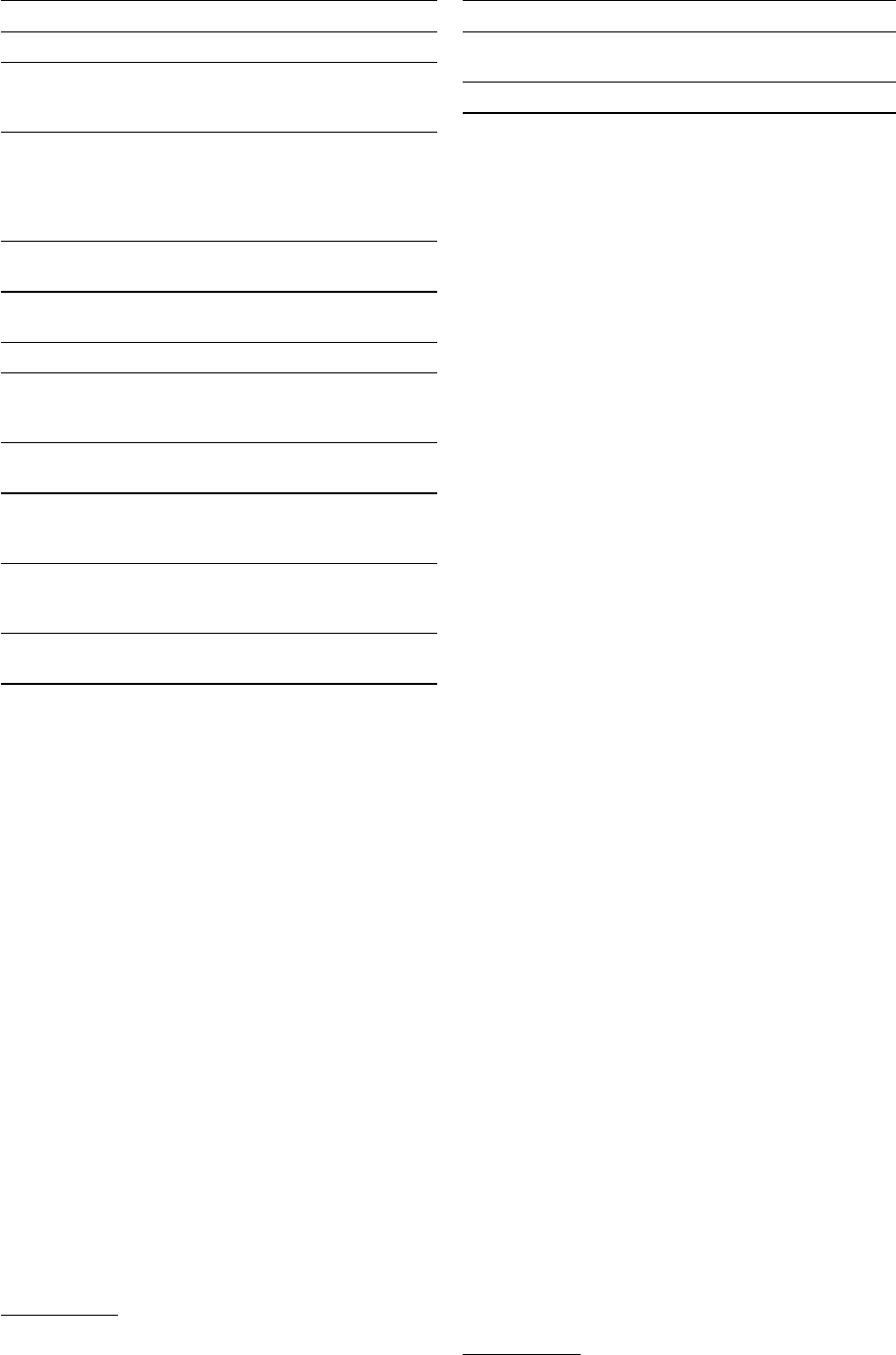

Role Description

Supertype An hypernym for the definiendum.

Differentia Quality

A quality that distinguishes the

definiendum from other concepts un-

der the same supertype.

Differentia Event

an event (action, state or process) in

which the definiendum participates

and is essential to distinguish it from

other concepts under the same super-

type.

Event Location

the location (spatial or abstract) of a

differentia event.

Event Time

the time in which a differentia event

happens.

Origin Location

the definiendum’s location of origin.

Quality Modifier

degree, frequency or manner modi-

fiers that constrain a differentia qual-

ity.

Purpose

the main goal of the definiendum’s

existence or occurrence.

Associated Fact

a fact whose occurrence is/was

linked to the definiendum’s exis-

tence or occurrence.

Accessory Deter-

miner

a determiner expression that doesn’t

constrain the supertype / differentia

scope.

Accessory Quality

a quality that is not essential to char-

acterize the definiendum.

Table 1: The complete set of Definition Semantic Roles

(DSRs) considered in this work.

(DSRL) for the automatic annotation of natural

language definitions from large dictionaries; (2)

formalising the task of learning multi-relational

word embeddings as a link prediction problem via

a translational framework.

3.1 Definition Semantic Roles (DSRs)

Given a natural language definition

D =

{w

1

, . . . , w

n

}

including terms

w

1

, . . . , w

n

and se-

mantic roles

SR = {r

1

, . . . , r

m

}

, we aim to build

a DSRL that assigns one of the semantic roles in

SR

to each term in

D

. To this end, we explore the

fine-tuning of different versions of BERT framing

the task as a token classification problem (Devlin

et al., 2019). To fine-tune the models, we adopt

a publicly available dataset of

≈

4000 definitions

extracted from Wordnet, each manually annotated

with the respective semantic roles

2

(Silva et al.,

2016). Specifically, we annotate the definition sen-

2

https://drive.google.com/drive/

folders/12nJJHo7ryS6gVT-ukE-BsuHvAqPLUh3S?

usp=sharing

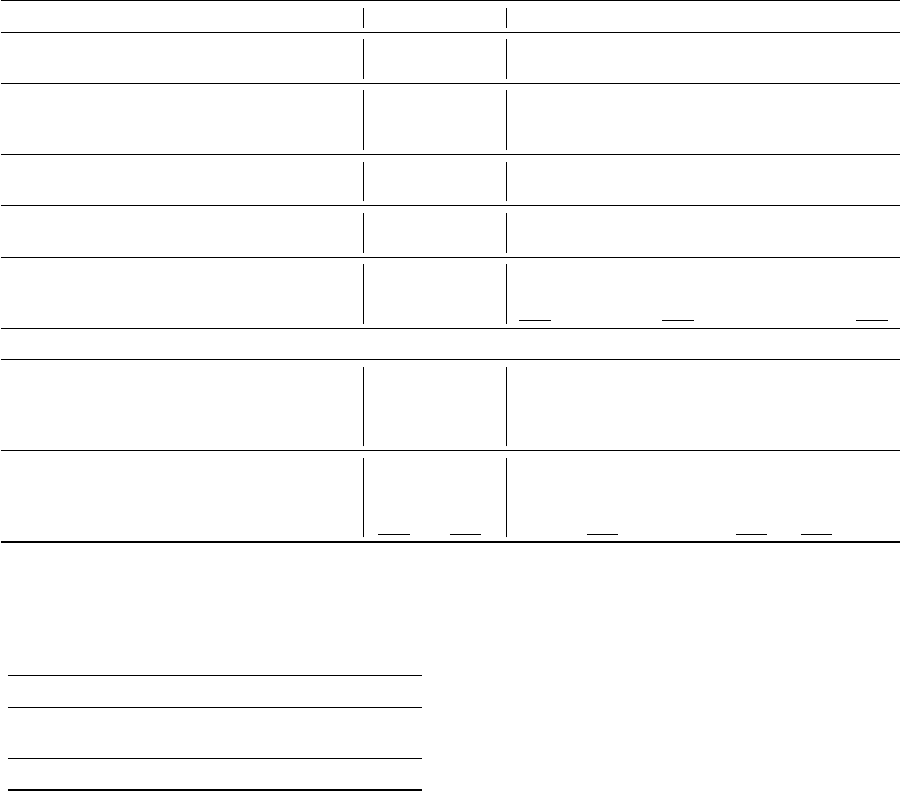

P R F1 Acc.

bert-base-uncased 0.76 0.80 0.78 0.86

bert-large-uncased 0.69 0.77 0.73 0.85

distilbert 0.76 0.79 0.77 0.86

Table 2: Micro-average results for the Definition Seman-

tic Role Labeling (DSRL) task using different versions

of BERT (Devlin et al., 2019).

tences using BERT to annotate each token with a

semantic role (i.e., supertype, differentia-quality,

etc.). After the annotation, as we aim to learn word

embeddings, we map back the tokens to the origi-

nal words and use the associated semantic roles to

construct multi-relational triples (Section 3.2).

Overall, we found

distilbert

(Sanh et al.,

2019) to achieve the best trade-off between ef-

ficiency and accuracy (86%), obtaining perfor-

mance comparable to

bert-base-uncased

while containing 40% less parameters. Therefore,

we decided to employ

distilbert

for subse-

quent experiments. While more accurate DSRLs

could be built via the fine-tuning of more recent

Transformers, we regard this trade-off as satisfac-

tory for current purposes.

Table 2 reports the detailed results achieved by

different versions of BERT in terms of precision,

recall, f1 score, and accuracy. To train the models,

we adopted a k-fold cross-validation technique with

k = 5

, fine-tuning the models for 3 epochs in total

via Huggingface

3

.

3.2 Multi-Relational Word Embeddings

Thanks to the semantic annotation, it is possible

to leverage the relational structure of natural lan-

guage definitions for training word embeddings.

Specifically, we rely on the semantic roles to cast

the task into a link prediction problem. Given a

set of definiendum-definition pairs, we first employ

the DSRL to automatically annotate the definitions,

and subsequently extract a set of subject-relation-

object triples of the form

(w

s

, r, w

o

)

, where

w

s

represents a defined term,

r

a semantic role, and

w

o

a term appearing in the definition of

w

s

with

semantic role

r

. To derive the final set of triples

for training, we remove the instances in which

w

o

represents a stop-word.

In order to train the word embeddings, the link

prediction problem is formalised via a translational

objective function ϕ(·):

3

https://huggingface.co/

ϕ(w

s

, r, w

o

) = −d(e

(r)

s

, e

(r)

o

)

2

+ b

s

+ b

o

= −d(Re

s

, e

o

+ r)

2

+ b

s

+ b

o

,

(3)

where

d(·)

is a generic distance function, e

s

,e

o

∈

R

d

represent the embeddings of

w

s

and

w

o

respec-

tively, and

b

s

,

b

o

∈ R

act as scalar biases for

subject and object word. On the other hand, r

∈ R

d

is a translation vector encoding the semantic

role

r

, while R

∈ R

d×d

is a diagonal relation ma-

trix. Therefore, the output of the objective function

ϕ(w

s

, r, w

o

)

is directly proportional to the simi-

larity between

e

(r)

s

and

e

(r)

o

, which represent the

subject and object word embedding after applying

a relation-adjusted transformation.

The choice behind the translational formulation

is dictated by a set of goals and research hypothe-

ses. First, we hypothesise that the global multi-

relational structure of dictionary definitions can be

optimised locally via the extracted semantic rela-

tions (i.e., making words that are semantically con-

nected in the definitions closer in the latent space).

Second, the translational formulation allows for

the joint embedding of words and semantic roles.

This plays a crucial function as it enables the ex-

plicit parametrisation of multiple relational struc-

tures within the same vector space (i.e., with each

semantic role vector acting as a geometrical trans-

formation), and second, it allows for the explicit

use of the semantic roles after training. By preserv-

ing the embeddings of the semantic relations, in

fact, we aim to make the vector space intrinsically

more interpretable and controllable.

Hyperbolic Model. Following previous work on

multi-relational Poincaré embeddings (Balazevic

et al., 2019), we specialise the general translational

objective function in Hyperbolic space:

ϕ

B

(w

s

, r, w

o

) = −d

B

(h

(r)

s

, h

(r)

o

)

2

+ b

s

+ b

o

= −d

B

(R ⊗

c

h

s

, h

o

⊕

c

r)

2

+ b

s

+ b

o

,

(4)

where

d

B

(·)

is the Poincaré distance,

h

s

,

h

o

, r

∈ B

d

c

are the hyperbolic embeddings of words and seman-

tic roles,

R ∈ R

d×d

is a diagonal relation matrix,

⊕

and

⊗

represents Mobiüs addition (Equation 2) and

matrix-vector multiplication (Ganea et al., 2018):

R ⊗

c

h = exp

c

0

(R log

c

0

(h)), (5)

with

log(·)

and

exp(·)

representing the logarithmic

and exponential maps for projecting a point into the

Euclidean tangent space and back to the Poincaré

ball.

Training & Optimization. The multi-relational

model is optimised for link prediction via the

Bernoulli negative log-likelihood loss (details in

Appendix A). We employ Riemmanian optimiza-

tion to train the Hyperbolic embeddings, enriching

the set of extracted triples with random negative

sampling. We found that the best results are ob-

tained with 50 negative examples for each positive

instance. In line with previous work on Hyper-

bolic embeddings (Balazevic et al., 2019) we set

c = 1

. Following guidelines for the development of

word embeddings models (Bosc and Vincent, 2018;

Faruqui et al., 2016), we perform model selection

on the dev-set of word relatedness and similarity

benchmarks (i.e., SimVerb (Gerz et al., 2016) and

MEN (Bruni et al., 2014)).

4 Empirical Evaluation

4.1 Empirical Setup

To assess the quality of the word embeddings,

we performed an extensive evaluation on word

similarity and relatedness benchmarks in En-

glish: SimVerb (Gerz et al., 2016), MEN (Bruni

et al., 2014), SimLex-999 (Hill et al., 2015),

SCWS (Huang et al., 2012), WordSim-353 (Finkel-

stein et al., 2001) and RG-65 (Rubenstein and

Goodenough, 1965), using WordNet (Fellbaum,

2010) as the main source of definitions

4

. In par-

ticular, we leverage the glosses in WordNet to ex-

tract the semantic roles via the methodology de-

scribed in Section 3.1 and train the multi-relational

word embeddings. While WordNet also provides

a knowledge graph of linguistic relations, our goal

is to test methods that are trained and evaluated

exclusively on natural language definitions and that

can more easily generalise to different dictionaries

and definitions in a broader setting.

The multi-relational word embeddings are

trained on a total of

≈ 400k

definitions from which

we are able to extract

≈ 2

million triples. In order

to compare Euclidean and Hyperbolic spaces we

train two different versions of the model by special-

ising the objective function accordingly (Equation

3). We experiment with varying dimensions for

both Euclidean and Hyperbolic embeddings (i.e.,

40, 80, 200, and 300), training the models for a

total of 300 iterations. In line with previous work

(Bosc and Vincent, 2018; Faruqui et al., 2016), we

evaluate the models on downstream benchmarks

4

https://github.com/tombosc/cpae/blob/

master/data/dict_wn.json

Model Dim FT PT SV-d MEN-d SV-t MEN-t SL999 SCWS 353 RG

Glove 300 yes no 12.0 54.8 7.8 57.0 19.8 46.8 44.4 57.5

Word2Vec 300 yes no 35.2 62.3 36.4 59.9 34.5 54.5 61.9 65.7

AE 300 yes no 34.9 42.7 32.5 42.2 35.6 50.2 41.4 64.8

CPAE 300 yes no 42.8 48.5 34.8 49.2 39.5 54.3 48.7 67.1

CPAE-P 300 yes yes 44.1 65.1 42.3 63.8 45.8 60.4 61.3 72.0

bert-base 768 no yes 13.5 27.8 13.3 30.6 15.1 37.8 20.0 68.1

bert-large 1024 no yes 16.1 23.4 14.4 26.8 13.4 35.7 19.8 60.7

defsent-bert 768 yes yes 40.0 60.2 40.0 60.0 42.0 56.8 46.6 82.4

defsent-roberta 768 yes yes 43.0 55.0 44.0 52.6 47.7 54.3 44.9 80.6

distilroberta-v1 768 no yes 35.8 61.2 36.7 62.2 43.4 57.1 52.0 77.4

mpnet-base-v2 768 no yes 45.9 64.9 42.5 67.5 49.5 58.6 56.5 81.3

sentence-t5-large 768 no yes 49.4 63.1 50.2 66.3 57.3 56.1 51.8 85.3

Multi-Relational

Euclidean 40 yes no 39.1 62.9 35.7 65.4 36.3 58.2 52.1 80.9

Euclidean 80 yes no 44.1 65.6 39.5 66.2 41.2 58.4 55.8 78.0

Euclidean 200 yes no 47.3 67.0 41.0 67.6 43.4 60.6 55.4 78.1

Euclidean 300 yes no 47.9 68.3 43.1 69.1 44.7 61.0 54.4 79.0

Hyperbolic 40 yes no 36.7 66.2 34.3 66.4 31.8 57.7 49.9 75.5

Hyperbolic 80 yes no 42.7 68.2 40.7 68.6 38.3 60.5 57.3 81.0

Hyperbolic 200 yes no 48.8 71.9 44.7 73.2 40.7 62.5 62.5 81.6

Hyperbolic 300 yes no 50.6 72.6 45.4 74.2 42.3 63.0 63.3 80.5

Table 3: Results on word similarity and relatedness benchmarks (Spearman’s correlation). The column FT indicates

whether the model is explicitly fine-tuned on natural language definitions, while PT indicates the adoption of a

pre-training phase on external corpora.

Model SV MEN SL999 353 RG

Glove 18.9 - 32.1 62.1 75.8

Word2Vec - 72.2 28.3 68.4 -

Our 45.4 74.2 42.3 63.3 80.5

Table 4: Comparison with Hyperbolic word embeddings

in the literature. The results for Glove and Word2Vec

are taken from (Tifrea et al., 2018) and (Leimeister and

Wilson, 2018) considering their best model.

comparing the predicted similarity between the pair

of words to the ground truth via a Spearman’s cor-

relation coefficient.

4.2 Baselines

We evaluate a range of word embedding mod-

els on the same set of definitions (Bosc and Vin-

cent, 2018). Specifically, we compare the pro-

posed multi-relational embeddings against different

paradigms adopted in previous work and state-of-

the-art approaches. Here, we provide a characteri-

sation of the models adopted for evaluation:

Distributional. We compare the multi-relational

approach against distributional word embeddings

(Mikolov et al., 2013; Pennington et al., 2014).

Both

Glove

and

Word2Vec

have the same di-

mensionality as the multi-relational approach but

are not designed to leverage or preserve explicit

semantic relations during training.

Autoencoders. This paradigm employs encoder-

decoder architectures to learn word representations

from natural language definitions. In particular,

we compare our approach to an autoencoder-based

model specialised for natural language definitions

known as CPAE (Bosc and Vincent, 2018), which

adopts LSTMs paired with a consistency penalty.

Differently from our approach, CPAE requires ini-

tialisation with pre-trained word vectors to achieve

the best results (i.e., CPAE-P).

Sentence-Transformers. Finally, we compare

our model against Sentence-Transformers (Reimers

and Gurevych, 2019). Here, we use Sentence-

Transformers to derive embeddings for the target

definienda via the encoding of the corresponding

definition sentences in the corpus. As the main

function of definitions is to describe the meaning of

words, semantically similar words tend to possess

similar definitions; therefore we expect Sentence-

Transformers to organise the latent space in a se-

mantically coherent manner when using definition

sentences as a proxy for the word embeddings. We

experiment with a diverse set of models ranging

from BERT (Devlin et al., 2019) to the current state-

of-the-art on semantic similarity benchmarks

5

(Ni

et al., 2022; Song et al., 2020; Liu et al., 2019)

and models trained directly on definition sentences

(e.g., Defsent (Tsukagoshi et al., 2021)). While

the evaluated Transformers do not require fine-

tuning on the word similarity benchmarks, they

are employed after being extensively pre-trained

on large corpora and specialised in sentence-level

semantic tasks. Moreover, the overall size of the

resulting embeddings is significantly larger than

the proposed multi-relational approach.

4.3 Word Embeddings Benchmarks

In this section, we discuss and analyse the quanti-

tative results obtained on the word similarity and

relatedness benchmarks (see Table 3).

Firstly, an internal comparison between Eu-

clidean and Hyperbolic embeddings supports the

central hypothesis that Hyperbolic manifolds are

particularly suitable for encoding the recursive and

hierarchical structure of definitions. As the dimen-

sions of the embeddings increase, the quantitative

analysis demonstrates that the Hyperbolic model

can achieve the best performance on the majority

of the benchmarks.

When compared to the distributional baselines,

the multi-relational Hyperbolic embeddings clearly

outperform both

Glove

and

Word2Vec

trained

on the same set of definitions. Similar results

can be observed when considering the autoencoder

paradigm (apart from CPAE-P on SL999). Since

the size of the embeddings produced by the models

is comparable (i.e., 300 dimensions), we attribute

the observed results to the encoded semantic rela-

tions, which might play a crucial role in imposing

structural constraints during training.

Finally, the multi-relational model produces em-

beddings that are competitive with state-of-the-art

Transformers. While the Hyperbolic approach can

clearly outperform BERT on all the downstream

tasks, we observe that Sentence-Transformers be-

come increasingly more competitive when con-

sidering larger models that are fine-tuned on

semantic similarity tasks and definitions (e.g.,

sentence-t5-large

(Ni et al., 2022) and

defsent

(Tsukagoshi et al., 2021)). However,

it is important to notice that the multi-relational

embeddings not only require a small fraction of

5

https://www.sbert.net/docs/

pretrained_models.html

the Transformers’ computational cost – e.g, T5-

large (Raffel et al., 2020) is pre-trained on the C4

corpus (

≈

750GB) while the multi-relational em-

beddings are only trained on WordNet glosses (

≈

19MB), a difference of

4

orders of magnitude – but

are also intrinsically more interpretable thanks to

the explicit encoding of the semantic relations (see

Section 4.5 and 5).

4.4 Hyperbolic Word Embeddings

In addition to the previous analysis, we performed

a comparison with existing Hyperbolic word em-

beddings in the literature (Table 4). In particular,

we compare the proposed multi-relational model

with Poincare Glove (Tifrea et al., 2018) and Hy-

perbolic Word2Vec (Leimeister and Wilson, 2018).

The results show that our approach can outperform

both models on the majority of the benchmarks,

remarking the impact of the multi-relational ap-

proach and definitional model on the quality of the

representation.

4.5 Multi-Relational Representation

To contrast the capacity of different geometric

spaces to learn multi-relational representations, we

design an additional experiment that tests the abil-

ity to encode out-of-vocabulary definienda (i.e.,

words never seen during training). In particular,

we aim to quantitatively measure the precision in

encoding the semantic relations by approximating

new word embeddings in one-shot, and use it as a

proxy for assessing the structural organisation of

Euclidean and Hyperbolic spaces. Our hypothesis

is that a vector space organised according to the

multi-relational structure induced by the definitions

should allow for a more precise approximation of

out-of-vocabulary word embeddings via relation-

specific transformations.

In order to perform this experiment, we adopt

the dev-set of SimVerb (Gerz et al., 2016) and

MEN (Bruni et al., 2014), removing all the triples

from our training set that contain a subject or an

object word occurring in the benchmarks. Sub-

sequently, we employ the pruned training set to

re-train the models. After training, we derive

the embeddings of the out-of-vocabulary words

via geometric transformations applied to the in-

vocabulary words. Specifically, given a target word

(e.g., "dog") and its definition (e.g., "a domesti-

cated carnivorous mammal that typically has a long

snout") we jointly use the in-vocabulary definiens

and their semantic relations (e.g., ["carnivorous",

Model Dimension

Mean-Pooling Multi-Relational Differentia Quality Supertype

SV MEN SV MEN SV MEN SV MEN

Euclidean 40 17.6 20.6 23.7 (+6.1) 31.7 (+11.1) 22.6 26.0 17.2 19.2

Euclidean 80 15.9 18.1 24.6 (+8.7) 29.4 (+11.3) 23.4 23.3 18.4 18.8

Euclidean 200 14.5 18.4 23.7 (+9.2) 30.7 (+12.3) 24.1 22.2 18.7 19.1

Euclidean 300 15.1 18.8 24.3 (+9.2) 30.3 (+11.5) 23.8 22.7 19.3 20.3

Hyperbolic 40 15.9 22.8 25.4 (+9.5) 35.2 (+12.4) 22.7 25.5 14.0 20.2

Hyperbolic 80 17.9 25.1 27.7 (+9.8) 37.8 (+12.7) 25.9 26.6 15.4 20.1

Hyperbolic 200 19.2 24.9 28.4 (+9.2) 38.2 (+13.3) 27.9 25.5 17.3 21.3

Hyperbolic 300 19.6 25.1 28.6 (+9.0) 39.7 (+14.6) 28.5 26.0 18.1 20.4

Table 5: Results on the one-shot approximation of out-of-vocabulary word embeddings. The numbers in the table

represent the Spearman correlation computed over the out-of-vocabulary set after the approximation. (Left) impact

of the multi-relational embeddings on the one-shot encoding of out-of-vocabulary words. (Right) ablations using

the two most common semantic roles for one-shot approximation. The results demonstrate the superior capacity of

the multi-relational Hyperbolic embeddings to capture the global semantic structure of definitions.

"supertype"], ["snout", "differentia-quality"]) to

approximate a new word embedding e() for the

definiendum via mean pooling and translation (i.e.,

e("dog") = mean(e("carnivorous"), ("snout")) +

mean(e("supertype"), e("differentia-quality"))) and

compare against a mean pooling baseline that

does not have access to the semantic relations (i.e.

e("dog") = mean(e("carnivorous"), ("snout"))).

The results reported in Table 5 demonstrate the

impact of the multi-relational framework, also con-

firming the property of the Hyperbolic embeddings

in better encoding the global semantic structure of

natural language definitions.

5 Qualitative Analysis

In addition to the qualitative evaluation, we perform

a qualitative analysis of the embeddings. This is

performed in two different ways: traversal of the

latent space and relation-adjusted transformations.

5.1 Latent Space Traversal

We perform traversal experiments to visualise the

organisation of the latent space. This is done by

sampling points at fixed intervals along the arc

(i.e., geodesic) connecting the embeddings of a

pair of predefined words (seeds), i.e., by interpo-

lating along the shortest path between two em-

beddings. The choice of word pairs was done

according to a group of semantic categories for

which intermediate concepts can be understood to

be semantically in between the pair. For exam-

ple:

(car, bicycle) → motorcycle

. Considering

the latent space structure that should result from

the proposed approach, we expect the traversal pro-

cess to capture such intermediate concepts, while

generalising the concepts towards the midpoint of

the arc. In a latent space organised according to the

semantic structure and concept hierarchy of defini-

tions, in fact, we expect the midpoint to be close to

concepts relating to both seed words.

The categories, sampled words and results for

the midpoint of the arcs can be found in Table 6

(top). From the traversal analysis, we can observe

that the intermediate concepts are indeed captured

for all the categories, with a noticeable degree of

generalisation in the Hyperbolic models. This in-

dicates the consistent interpretable nature of the

navigation for the latent space, and enables more

robust semantic control, setting the desired embed-

ded concept in terms of a symbolic conjunction of

its vicinity. We can also observe that, the space be-

tween the pair of embeddings is populated mostly

by concepts related to both entities of the pair in

the Euclidean models, while being populated by

concepts relating both entities in the Hyperbolic

models.

5.2 Relation-Adjusted Transformations

We analyse the organisation of the latent space be-

fore and after the application of a translational oper-

ation. As discussed in Section 2.1, such operation

should transform the embedding space according to

the corresponding semantic role. For example, the

operation

ϕ

B

(dog, supertype, w

o

)

should cluster

the space around the taxonomical branch related

to “dog”. It is important to notice that this opera-

tion does not correspond to link prediction as we

are not considering the scalar biases

b

s

, b

o

. The

goal here is to disentangle the impact of the se-

mantic transformations on the latent space. We

consider the

supertype

role for this analysis as

it induces a global hierarchical structure that is eas-

Category Word Pair Euclidean Hyperbolic

Concrete concepts car - bycicle

bicycle, car, pedal_driven, motorcycle, banked, multiplying, swivel-

ing, four_wheel, rented, no_parking

railcar, bicycle, car, pedal_driven, driving_axle, motor-

ized_wheelchair, tricycle, bike, banked, live_axle

Gender, Role man - woman

woman, man, procreation, men, non_jewish, three_cornered, mid-

dle_aged, bodice, boskop, soloensis

adulterer, boyfriend, ex-boyfriend, adult_female, manful, cuckold,

virile, stateswoman, womanlike, wardress

Animal Hybrids horse - donkey

donkey, horse, burro, hock_joint, neighing, dog_sized, tapirs, feath-

ered_legged, racehorse, gasterophilidae

burro, cow_pony, unbridle, hackney, unbridled, equitation, sidesad-

dle, palfrey, roughrider, trotter

Process, Time birth - death

death, birth, lifetime, childless, childhood, adityas, parturition, con-

demned, carta, liveborn

lifespan, life-time, firstborn, multiparous, full_term, teens, nonpreg-

nant, childless, widowhood, gestational

Location sea - land

land, sea, enderby, weddell, arafura, littoral, tyrrhenian, andaman,

maud, toads

tellurian, litoral, seabed, high_sea, body_of_water, littoral_zone, in-

ternational_waters, benthic_division, naval_forces, lake_michigan

w

s

No Transformation: −d

B

(h

s

, h

o

)

2

Relation-Adjusted (r = supertype): −d

B

(R ⊗ h

s

, h

o

⊕ r)

2

dog

dog, heavy_coated, smooth_coated, malamute, canidae, wolves, light_footed, long-

established, whippet, greyhound

huntsman, hunting_dog, sledge_dog, coondog, sled_dog, working_dog, rus-

sian_wolfhound, guard_dog, tibetan_mastiff, housedog

car

car, railcar, telpherage, telferage, subcompact, cable_car, car_transporter, re_start,

auto, railroad_car, driving_axle

railcar, marksman, subcompact, smoking_carriage, handcar, electric_automobile,

limousine, taxicab, freight_car , slip_coach

star

star, armillary_sphere, charles’s_wain, starlight, altair, drummer, northern_cross,

photosphere, sterope, rigel

rigel, betelgeuse, film_star, movie_star, television_star, tv_star, starlight, supergiant,

photosphere, starlet

king

louis_i, sultan, sir_gawain. uriah, camelot, dethrone, poitiers, excalibur, empress,

divorcee

chessman, gustavus_vi, grandchild, alfred_the_great, jr, rajah, knights, louis_the_far,

egbert, plantagenet, st._olav

Table 6: (Top) qualitative results for the latent space traversal, with midpoint nearest neighbours listed in descending

order. (Bottom) nearest neighbours of seed words before and after applying a supertype-adjusted transformation.

ily inspectable. The results can be found in Table 6

(bottom). We observe that the transformation leads

to a projection locus near all the closely defined

terms (the types of dogs or stars), abstracting the

subject words in terms of their conceptual exten-

sion (things that are dogs / stars). This displays

a particular way of generalisation that is likely re-

lated to the arrangement of the roles and how they

connect the concepts.

6 Related Work

Considering the basic characteristics of natural lan-

guage definitions here discussed, efforts to lever-

age dictionary definitions for distributional models

were proposed as a more efficient alternative to the

large unlabeled corpora, following the rising pop-

ularity of the latter (Tsukagoshi et al., 2021; Hill

et al., 2016; Tissier et al., 2017; Bosc and Vincent,

2018). Simultaneously, efforts to improve composi-

tionality (Chen et al., 2015; Scheepers et al., 2018)

and interpretability (de Carvalho and Le Nguyen,

2017; Silva et al., 2019) of word representations

led to different approaches towards the incorpora-

tion of definition resources to language modelling,

with the idea of modelling definitions becoming an

established task (Noraset et al., 2017).

More recently, research focus has shifted to-

wards the fine-tuning of large language models and

contextual embeddings for definition generation

and classification (Gadetsky et al., 2018; Bosc and

Vincent, 2018; Loureiro and Jorge, 2019; Mickus

et al., 2022), with interest in the structural proper-

ties of definitions also gaining attention (Shu et al.,

2020; Wang and Zaki, 2022).

Finally, research on Hyperbolic representation

spaces has provided evidence of improvements in

capturing hierarchical linguistic features, over tra-

ditional (Euclidean) ones (Balazevic et al., 2019;

Nickel and Kiela, 2017; Tifrea et al., 2018; Zhao

et al., 2020). This work builds upon the afore-

mentioned developments, and proposes a novel

approach to the incorporation of structural infor-

mation extracted from natural language definitions

by means of a translational objective guided by ex-

plicit semantic roles (Silva et al., 2016), combined

with a Hyperbolic representation able to embed

multi-relational structures.

7 Conclusion

This paper explored the semantic structure of def-

initions as a means to support novel learning

paradigms able to preserve semantic interpretability

and control. We proposed a multi-relational frame-

work that can explicitly map terms and their corre-

sponding semantic relations into a vector space. By

automatically extracting the relations from exter-

nal dictionaries, and specialising the framework in

Hyperbolic space, we demonstrated that it is possi-

ble to capture the hierarchical and multi-relational

structure induced by dictionary definitions while

preserving, at the same time, the explicit mapping

required for controllable semantic navigation.

8 Limitations

While the study here presented supports its findings

with all the evidence compiled to the best of our

knowledge, there are factors that limit the scope

of the current state of the work, from which we

understand as the most important:

1.

The automatic semantic role labeling process

is not 100% accurate, and thus is a limiting

factor in analysing the impact of this informa-

tion on the models. While we do not explore

DSRLs with varying accuracy, future work

can explicitly investigate the impact of the au-

tomatic annotation on the robustness of the

multi-relational embeddings.

2.

The embeddings obtained in this work are con-

textualizable (by means of a relation-adjusted

transformation), but are not contextualized,

i.e., they are not dependent on surrounding

text. Therefore, they are not comparable

on tasks dependant on contextualised embed-

dings.

3.

The current version of the embeddings coa-

lesces all senses of a definiendum into a single

representation. This is a general limitation of

models learning embeddings from dictionar-

ies. Fixing this limitation is possible in future

work, but it will require the non-trivial abil-

ity to disambiguate the terms appearing in the

definitions (i.e., definiens).

4.

The multi-relational embeddings presented in

the paper were initialised from scratch in or-

der to test their efficiency in capturing the

semantic structure of dictionary definitions.

Therefore, there is an open question regarding

the possible benefits of initialising the mod-

els with pre-trained distributional embeddings

such as Word2Vec and Glove.

Acknowledgements

This work was partially funded by the Swiss Na-

tional Science Foundation (SNSF) project Neu-

Math (200021_204617), by the EPSRC grant

EP/T026995/1 entitled “EnnCore: End-to-End

Conceptual Guarding of Neural Architectures” un-

der Security for all in an AI enabled society, by the

CRUK National Biomarker Centre, and supported

by the Manchester Experimental Cancer Medicine

Centre and the NIHR Manchester Biomedical Re-

search Centre.

References

Ivana Balazevic, Carl Allen, and Timothy Hospedales.

2019. Multi-relational poincaré graph embeddings.

Advances in Neural Information Processing Systems,

32.

Antoine Bordes, Nicolas Usunier, Alberto Garcia-

Duran, Jason Weston, and Oksana Yakhnenko.

2013. Translating embeddings for modeling multi-

relational data. Advances in neural information pro-

cessing systems, 26.

Tom Bosc and Pascal Vincent. 2018. Auto-encoding

dictionary definitions into consistent word embed-

dings. In Proceedings of the 2018 Conference on

Empirical Methods in Natural Language Processing,

pages 1522–1532.

Elia Bruni, Nam-Khanh Tran, and Marco Baroni. 2014.

Multimodal distributional semantics. Journal of arti-

ficial intelligence research, 49:1–47.

Danilo S Carvalho, Giangiacomo Mercatali, Yingji

Zhang, and Andre Freitas. 2023. Learning disentan-

gled representations for natural language definitions.

Findings of the European chapter of Association for

Computational Linguistics (Findings of EACL).

Tao Chen, Ruifeng Xu, Yulan He, and Xuan Wang. 2015.

Improving distributed representation of word sense

via wordnet gloss composition and context clustering.

In Proceedings of the 53rd Annual Meeting of the As-

sociation for Computational Linguistics and the 7th

International Joint Conference on Natural Language

Processing (Volume 2: Short Papers), pages 15–20.

Danilo Silva de Carvalho and Minh Le Nguyen. 2017.

Building lexical vector representations from concept

definitions. In Proceedings of the 15th Conference of

the European Chapter of the Association for Compu-

tational Linguistics: Volume 1, Long Papers, pages

905–915.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and

Kristina Toutanova. 2019. Bert: Pre-training of deep

bidirectional transformers for language understand-

ing. In Proceedings of the 2019 Conference of the

North American Chapter of the Association for Com-

putational Linguistics: Human Language Technolo-

gies, Volume 1 (Long and Short Papers), pages 4171–

4186.

Manaal Faruqui, Yulia Tsvetkov, Pushpendre Rastogi,

and Chris Dyer. 2016. Problems with evaluation of

word embeddings using word similarity tasks. In Pro-

ceedings of the 1st Workshop on Evaluating Vector-

Space Representations for NLP, pages 30–35.

Christiane Fellbaum. 2010. Wordnet. In Theory and ap-

plications of ontology: computer applications, pages

231–243. Springer.

Jun Feng, Minlie Huang, Mingdong Wang, Mantong

Zhou, Yu Hao, and Xiaoyan Zhu. 2016. Knowledge

graph embedding by flexible translation. In Fifteenth

International Conference on the Principles of Knowl-

edge Representation and Reasoning.

Lev Finkelstein, Evgeniy Gabrilovich, Yossi Matias,

Ehud Rivlin, Zach Solan, Gadi Wolfman, and Ey-

tan Ruppin. 2001. Placing search in context: The

concept revisited. In Proceedings of the 10th in-

ternational conference on World Wide Web, pages

406–414.

Artyom Gadetsky, Ilya Yakubovskiy, and Dmitry Vetrov.

2018. Conditional generators of words definitions.

In Proceedings of the 56th Annual Meeting of the

Association for Computational Linguistics (Volume

2: Short Papers), pages 266–271.

Octavian Ganea, Gary Bécigneul, and Thomas Hof-

mann. 2018. Hyperbolic neural networks. Advances

in neural information processing systems, 31.

Daniela Gerz, Ivan Vuli

´

c, Felix Hill, Roi Reichart, and

Anna Korhonen. 2016. Simverb-3500: A large-scale

evaluation set of verb similarity. In Proceedings

of the 2016 Conference on Empirical Methods in

Natural Language Processing, pages 2173–2182.

Felix Hill, Kyunghyun Cho, Anna Korhonen, and

Yoshua Bengio. 2016. Learning to understand

phrases by embedding the dictionary. Transactions of

the Association for Computational Linguistics, 4:17–

30.

Felix Hill, Roi Reichart, and Anna Korhonen. 2015.

Simlex-999: Evaluating semantic models with (gen-

uine) similarity estimation. Computational Linguis-

tics, 41(4):665–695.

Eric H Huang, Richard Socher, Christopher D Manning,

and Andrew Y Ng. 2012. Improving word representa-

tions via global context and multiple word prototypes.

In Proceedings of the 50th Annual Meeting of the As-

sociation for Computational Linguistics (Volume 1:

Long Papers), pages 873–882.

Matthias Leimeister and Benjamin J Wilson. 2018.

Skip-gram word embeddings in hyperbolic space.

arXiv preprint arXiv:1809.01498.

Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Man-

dar Joshi, Danqi Chen, Omer Levy, Mike Lewis,

Luke Zettlemoyer, and Veselin Stoyanov. 2019.

Roberta: A robustly optimized bert pretraining ap-

proach. arXiv preprint arXiv:1907.11692.

Daniel Loureiro and Alipio Jorge. 2019. Language

modelling makes sense: Propagating representations

through wordnet for full-coverage word sense disam-

biguation. In Proceedings of the 57th Annual Meet-

ing of the Association for Computational Linguistics,

pages 5682–5691.

Timothee Mickus, Kees van Deemter, Mathieu Con-

stant, and Denis Paperno. 2022. Semeval-2022 task

1: Codwoe–comparing dictionaries and word embed-

dings. arXiv preprint arXiv:2205.13858.

Tomas Mikolov, Kai Chen, Greg Corrado, and Jef-

frey Dean. 2013. Efficient estimation of word

representations in vector space. arXiv preprint

arXiv:1301.3781.

George A Miller. 1995. Wordnet: a lexical database for

english. Communications of the ACM, 38(11):39–41.

Jianmo Ni, Gustavo Hernandez Abrego, Noah Constant,

Ji Ma, Keith Hall, Daniel Cer, and Yinfei Yang. 2022.

Sentence-t5: Scalable sentence encoders from pre-

trained text-to-text models. In Findings of the As-

sociation for Computational Linguistics: ACL 2022,

pages 1864–1874.

Maximillian Nickel and Douwe Kiela. 2017. Poincaré

embeddings for learning hierarchical representations.

Advances in neural information processing systems,

30.

Thanapon Noraset, Chen Liang, Larry Birnbaum, and

Doug Downey. 2017. Definition modeling: Learn-

ing to define word embeddings in natural language.

In Thirty-First AAAI Conference on Artificial Intelli-

gence.

Jeffrey Pennington, Richard Socher, and Christopher D

Manning. 2014. Glove: Global vectors for word rep-

resentation. In Proceedings of the 2014 conference

on empirical methods in natural language processing

(EMNLP), pages 1532–1543.

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine

Lee, Sharan Narang, Michael Matena, Yanqi Zhou,

Wei Li, and Peter J Liu. 2020. Exploring the limits

of transfer learning with a unified text-to-text trans-

former. Journal of Machine Learning Research, 21:1–

67.

Nils Reimers and Iryna Gurevych. 2019. Sentence-bert:

Sentence embeddings using siamese bert-networks.

In Proceedings of the 2019 Conference on Empirical

Methods in Natural Language Processing and the 9th

International Joint Conference on Natural Language

Processing (EMNLP-IJCNLP), pages 3982–3992.

Herbert Rubenstein and John B Goodenough. 1965.

Contextual correlates of synonymy. Communications

of the ACM, 8(10):627–633.

Victor Sanh, Lysandre Debut, Julien Chaumond, and

Thomas Wolf. 2019. Distilbert, a distilled version

of bert: smaller, faster, cheaper and lighter. arXiv

preprint arXiv:1910.01108.

Thijs Scheepers, Evangelos Kanoulas, and Efstratios

Gavves. 2018. Improving word embedding composi-

tionality using lexicographic definitions. In Proceed-

ings of the 2018 World Wide Web Conference, pages

1083–1093.

Xiaobo Shu, Bowen Yu, Zhenyu Zhang, and Tingwen

Liu. 2020. Drg2vec: Learning word representations

from definition relational graph. In 2020 Interna-

tional Joint Conference on Neural Networks (IJCNN),

pages 1–9. IEEE.

Vivian Silva, André Freitas, and Siegfried Handschuh.

2018a. Building a knowledge graph from natural

language definitions for interpretable text entailment

recognition. In Proceedings of the Eleventh Inter-

national Conference on Language Resources and

Evaluation (LREC 2018).

Vivian Silva, Siegfried Handschuh, and André Freitas.

2016. Categorization of semantic roles for dictionary

definitions. In Proceedings of the 5th Workshop on

Cognitive Aspects of the Lexicon (CogALex-V), pages

176–184.

Vivian S Silva, André Freitas, and Siegfried Hand-

schuh. 2019. Exploring knowledge graphs in an in-

terpretable composite approach for text entailment.

In Proceedings of the AAAI Conference on Artificial

Intelligence, volume 33, pages 7023–7030.

Vivian S Silva, Siegfried Handschuh, and André Freitas.

2018b. Recognizing and justifying text entailment

through distributional navigation on definition graphs.

In Thirty-Second AAAI Conference on Artificial In-

telligence.

Kaitao Song, Xu Tan, Tao Qin, Jianfeng Lu, and Tie-

Yan Liu. 2020. Mpnet: Masked and permuted pre-

training for language understanding. Advances in

Neural Information Processing Systems, 33:16857–

16867.

Alexandru Tifrea, Gary Becigneul, and Octavian-Eugen

Ganea. 2018. Poincare glove: Hyperbolic word em-

beddings. In International Conference on Learning

Representations.

Julien Tissier, Christophe Gravier, and Amaury Habrard.

2017. Dict2vec: Learning word embeddings using

lexical dictionaries. In Proceedings of the 2017 Con-

ference on Empirical Methods in Natural Language

Processing, pages 254–263.

Hayato Tsukagoshi, Ryohei Sasano, and Koichi Takeda.

2021. Defsent: Sentence embeddings using defini-

tion sentences. In Proceedings of the 59th Annual

Meeting of the Association for Computational Lin-

guistics and the 11th International Joint Conference

on Natural Language Processing (Volume 2: Short

Papers), pages 411–418.

Abraham A Ungar. 2001. Hyperbolic trigonometry and

its application in the poincaré ball model of hyper-

bolic geometry. Computers & Mathematics with Ap-

plications, 41(1-2):135–147.

Qitong Wang and Mohammed J Zaki. 2022. Hg2vec:

Improved word embeddings from dictionary and the-

saurus based heterogeneous graph. In Proceedings of

the 29th International Conference on Computational

Linguistics, pages 3154–3163.

Torsten Zesch, Christof Müller, and Iryna Gurevych.

2008. Using wiktionary for computing semantic re-

latedness. In AAAI, volume 8, pages 861–866.

Wenyu Zhao, Dong Zhou, Lin Li, and Jinjun Chen. 2020.

Manifold learning-based word representation refine-

ment incorporating global and local information. In

Proceedings of the 28th International Conference on

Computational Linguistics, pages 3401–3412.

A Multi-Relational Embeddings

The multi-relational embeddings are trained on a

total of

≈ 400k

definitions from which we are

able to extract

≈ 2

million triples. We experiment

with varying dimensions for both Euclidean and

Hyperbolic embeddings (i.e., 40, 80, 200, and 300),

training the models for a total of 300 iterations with

batch size 128 and learning rate 50 on 16GB Nvidia

Tesla V100 GPU. The multi-relational models are

optimised via a Bernoulli negative log-likelihood

loss:

L(y, p) = −1

1

N

N

X

i=1

(y

(i)

log(p

(i)

)

+(1 − y

(i)

)log(1 −p

(i)

))

(6)

where

p

(i)

represents the predictions made by the

model and y

(i)

represents the actual label.