Wisconsin – ACT

®

Technical Manual

Prepared by: WDPI & ACT

Date: December 2022

ACT Technical Manual 2

Preface

The purpose of this manual is to provide the state of Wisconsin and their stakeholders with

technical information regarding the ACT assessment. Additional information can be found in the

ACT

®

Technical Manual, which provides technical information about the ACT assessment,

including national-level reliability, scaling and equating, and validity evidences. This technical

report provides Wisconsin-specific information based on the 2021–2022 academic year.

While students and educators continue overcoming challenges with unfinished learning due to

the COVID-19 pandemic, past academic years may have still had an impact on the 2021-2022

academic year. Despite lingering effects of the global pandemic, Wisconsin Department of

Public Instruction (WDPI) and local school districts supported approximately 62,000 Wisconsin

11th graders to be able to participate in the state-funded administration of the ACT in their

classrooms during their normal school hours.

The principal purpose of The ACT

®

Technical Manual is to document technical characteristics of

the ACT

®

test in light of its intended uses and interpretations. The ACT Technical Manual

documents the collection of validity evidence that supports appropriate interpretations of test

scores and describes various content-related and psychometric aspects of the ACT. Multiple

test design and development processes are articulated documenting how ACT builds the

assessment in line with the validity argument and how concepts like construct validity, fairness,

and accessibility are attended to throughout the process. Also described are routine analyses

designed to support continuous improvement and research intended to ensure that the program

remains both psychometrically and educationally sound.

We encourage individuals who want more detailed information on a topic discussed in this

manual, or on a related topic, to contact WDPI or ACT.

ACT Technical Manual 3

Commitment to Fair Testing

ACT endorses and is committed to complying with The Standards for Educational and

Psychological Testing (AERA, APA, & NCME, 2014). ACT also endorses the Code of Fair

Testing Practices in Education (Joint Committee on Testing Practices, 2004), which is a

statement of the obligations to test takers of those who develop, administer, or use educational

tests and test data in the following four areas: developing and selecting appropriate tests,

administering and scoring tests, reporting and interpreting test results, and informing test takers.

ACT endorses and is committed to complying with the Code of Professional Responsibilities in

Educational Measurement (NCME Ad Hoc Committee on the Development of a Code of Ethics,

1995), which is a statement of professional responsibilities for those involved with various

aspects of assessments, including development, marketing, interpretation, and use.

ACT Technical Manual 4

Table of Contents

PREFACE ..................................................................................................................................................... 2

COMMITMENT TO FAIR TESTING .................................................................................................................... 3

CHAPTER 1 THE ACT

®

............................................................................................................................. 1-1

ACT’s Mission ..................................................................................................................................... 1-1

1.1 Philosophical Basis for the ACT ............................................................................................. 1-1

1.2 Overview of the ACT .............................................................................................................. 1-2

1.3 Purposes, Claims, Interpretations, and Uses of the ACT ....................................................... 1-3

1.4 Evidence-Based Design of the ACT Test ............................................................................... 1-5

1.5 ACT’s Commitment to Fair Testing ........................................................................................ 1-7

1.6 The Population Served by the ACT ........................................................................................ 1-8

1.7 Test Preparation ..................................................................................................................... 1-9

CHAPTER 2 THE ACT TEST DEVELOPMENT ............................................................................................... 2-1

2.1 Overview ................................................................................................................................. 2-1

2.2 Description of the ACT Tests .................................................................................................. 2-1

2.3 The ACT National Curriculum Survey .................................................................................... 2-2

2.4 Test Development Procedures ............................................................................................... 2-4

2.5 Test Development Procedures for the Writing Test ................................................................ 11

2.6 ACT Scores ............................................................................................................................. 13

CHAPTER 3 CONTENT SPECIFICATIONS ..................................................................................................... 3-1

3.1 Overview ................................................................................................................................. 3-1

3.2 English Test ............................................................................................................................ 3-1

3.3 Mathematics Test ................................................................................................................... 3-3

3.4 Reading Test .......................................................................................................................... 3-8

3.5 Science Test ......................................................................................................................... 3-11

3.6 Writing Test........................................................................................................................... 3-14

CHAPTER 4 TEST ADMINISTRATION, TEST SECURITY, AND ACCESSIBILITY AND ACCOMMODATIONS .............. 4-1

4.1 Test Administration Overview ................................................................................................. 4-1

4.2 Test Security ........................................................................................................................... 4-3

4.3 Test Administration and Accessibility Levels of Support ........................................................ 4-7

CHAPTER 5 SCORING AND REPORTING...................................................................................................... 5-1

5.1 Overview ................................................................................................................................. 5-1

5.2 Test Section, Composite, STEM, and ELA Scores ................................................................ 5-1

5.3 Detailed Performance Description ........................................................................................ 5-14

5.4 Progress Toward the ACT WorkKeys National Career Readiness Certificate Indicator ...... 5-18

5.5 ACT College and Career Readiness Standards................................................................... 5-19

5.6 ACT College Readiness Benchmarks .................................................................................. 5-26

CHAPTER 6 SCALING, EQUATING, AND TECHNICAL CHARACTERISTICS ........................................................ 6-1

6.1 Scaling and Equating of the ACT English, Mathematics, Reading, and Science Tests ......... 6-1

6.2 Scaling and Equating of the ACT Writing Test for ACT ELA Score Calculation .................... 6-4

6.3 Reliability and Measurement Error ......................................................................................... 6-5

6.4 Mode Comparability for Online Testing ................................................................................ 6-16

CHAPTER 7 VALIDITY EVIDENCE FOR THE ACT TESTS ............................................................................... 7-1

7.1 Using ACT Scores to Measure Educational Achievement ..................................................... 7-1

REFERENCES ........................................................................................................................ REFERENCES-1

APPENDIX A WISCONSIN STATE SUPPLEMENT: 2021‒2022 .......................................................... A-1

ACT Technical Manual 5

List of Tables

Table 1.1. Demographic Characteristics of Wisconsin State Contract Spring 2022 ACT Testers ............ 1-8

Table 2.1. ACT National Curriculum Survey 2020 Respondents............................................................... 2-4

Table 2.2. Difficulty

a

Distributions and Mean Discrimination

b

Indices for ACT Test Items, 2021–2022 .... 2-9

Table 2.3. ACT Test Items Exhibiting Dif based on 2022 Wisconsin Student Data ................................ 2-11

Table 3.1. DOK Level Descriptions for English .......................................................................................... 3-2

Table 3.2. Specification Ranges by Reporting Category for English ......................................................... 3-3

Table 3.3. DOK Level Descriptions for Mathematics ................................................................................. 3-4

Table 3.4. Specification Ranges by Reporting Category for Mathematics ................................................ 3-8

Table 3.5. DOK Level Descriptions for Reading ........................................................................................ 3-9

Table 3.6. Specification Ranges by Reporting Category for Reading ..................................................... 3-10

Table 3.7. DOK Level Descriptions for Science ....................................................................................... 3-12

Table 3.8. Specification Ranges by Reporting Category for Science ...................................................... 3-13

Table 3.9. Specification Ranges by Science Content Area ..................................................................... 3-14

Table 3.10. DOK Level Description for Writing ........................................................................................ 3-14

Table 5.1. Writing Test Analytic Scoring Rubric......................................................................................... 5-5

Table 5.2. Sample of Quality Reports ........................................................................................................ 5-9

Table 5.3. Summary Statistics of the ACT Test Score Distributions for the Primary Form

Administered in Wisconsin in Spring 2022 ....................................................................................... 5-12

Table 5.4. Summary Statistics of the ACT Test Score Distributions for the Accommodated Form

Administered in Wisconsin in Spring 2022 ....................................................................................... 5-12

Table 5.5. Scale Score Covariances for Multiple-Choice Tests from The Primary ACT Test Form

Administered in Wisconsin in Spring 2022 ....................................................................................... 5-14

Table 5.6. Range of Effective Weights of the ACT Tests Administered in Wisconsin in Spring 2022..... 5-14

Table 5.7. Correlations among the ACT Test Scores Administered in Wisconsin in Spring 2022 .......... 5-14

Table 5.8. Summary Statistics of the ACT Writing and Writing Domain Score Distributions

for the Wisconsin Spring 2022 Administration ................................................................................. 5-17

Table 5.10. Illustrative Listing of Mathematics Item Difficulties by Score Range .................................... 5-22

Table 5.11. Number of ACT Items reviewed during the 1997 National Review ...................................... 5-22

Table 5.12. Percentage of Agreement of 1997 National Expert Review ................................................. 5-25

Table 5.13. ACT College Readiness Benchmarks .................................................................................. 5-27

Table 6.1. Scale Score Reliability and SEM for the Wisconsin Spring 2022 ACT Test Scores ................ 6-6

Table 6.3. Agreement Rates for the ACT Writing Domain Scores for the

Wisconsin Spring 2022 Administration ............................................................................................ 6-10

Table 6.4. Classification Consistency for the Wisconsin Spring 2022 Performance Level Cut Scores... 6-14

Table 6.5. Classification Consistency for the Wisconsin Spring 2022 ACT Readiness Ranges ............. 6-15

Table 6.6. Composite Score Ranges for the ACT NCRC Levels............................................................. 6-16

ACT Technical Manual 6

List of Figures

Figure 1.1. The Full Picture: Evidence and Validity ................................................................................... 1-7

Figure 5.1. Overall Score and Percentile Rank on a Sample Interactive Score Report on MyACT .......... 5-2

Figure 5.2. Overall Score and Percentile Rank on a Sample ACT High School Score Report ................. 5-3

Figure 5.3. Detailed Results on a Sample Interactive Score Report on MyACT ..................................... 5-15

Figure 5.4. Detailed Results on a Sample ACT High School Score Report ............................................ 5-16

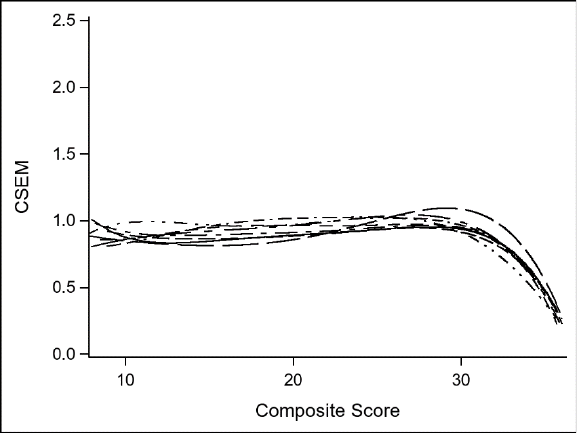

Figure 6.1. CSEM for Multiple-Choice Test Scores ................................................................................... 6-8

Figure 6.2. Average and Fitted CSEMs for ACT Writing Test Scale Scores ............................................. 6-9

Figure 6.3. CSEM for Composite Scores ................................................................................................. 6-11

Figure 6.4. CSEM for Stem Scores .......................................................................................................... 6-12

Figure 6.5. CSEM for ELA Scores ........................................................................................................... 6-13

Chapter 1

The ACT

®

ACT’s Mission

ACT has been dedicated to improving college and career readiness for all students since its

inception in 1959. ACT’s renowned longitudinal system of assessments, with the ACT

®

test as a

capstone, has provided students, educators, and policymakers with unparalleled measures of

college and career readiness. ACT’s mission is helping people achieve education and

workplace success.

1.1 Philosophical Basis for the ACT

Underlying the ACT is the belief that students’ preparation for college and the workplace is best

assessed by measuring, as directly as possible, the skills learned in high school that are

required for success in college-level courses. The required academic skills can be assessed

most directly by reproducing, as faithfully as possible, the complexity of the work students do in

the classroom. Therefore, ACT’s tests of educational achievement are designed to determine

how skillfully students solve problems, grasp implied meanings, draw inferences, evaluate

ideas, and make judgments in subject-matter areas important to success in college.

The ACT is oriented toward the general content areas of college and high school instructional

programs. The test questions require students to integrate the knowledge and skills they

possess in major curriculum areas with the information provided by the test. Thus, scores on the

test are directly related to the students’ educational progress in curriculum-related areas and

possess meaning that is readily grasped by students, parents, and educators.

The constructs measured by the ACT section tests are supported by multiple sources of validity

evidence (see Chapter 7). For example, ACT has, for many years, collected longitudinal

statistical evidence backing the strong relationship between student performance on the section

tests and student performance in entry-level courses in the corresponding subjects. More recent

methodologies such as cognitive labs have served to further confirm this evidence.

Because tests of educational achievement measure many of the skills taught in high school, the

best preparation for achievement tests is rigorous high school coursework. Long-term learning

in school, rather than short-term cramming and coaching, becomes the obvious best form of test

preparation. Thus, educational achievement tests serve as motivators by sending students a

clear message that high test scores reflect not simply innate ability but a level of achievement

that has been reached as a result of hard work.

The ACT requires students to apply critical thinking skills when comprehending complex texts,

analyzing data displays showing the results of scientific experiments, producing effective

argumentative writing, and solving sophisticated mathematics problems. Therefore, in order to

acquire such skills and achieve high scores on the ACT, students may be influenced to choose

challenging coursework in high school. In this way, the ACT may help high schools develop their

ACT Technical Manual 1-2

students’ critical thinking skills, which will be important for success in college and later life. Thus,

the ACT is designed not only to accurately reflect educational goals that are widely considered

important by educators, but also to emphasize the importance of a student’s educational

decisions.

1.2 Overview of the ACT

The ACT emphasizes students’ academic preparedness by directly addressing the content

domains students must master to achieve college and career readiness. The main component

of the ACT is a standardized battery of four tests of educational achievement—English,

mathematics, reading, and science—along with an optional writing test. Through ACT’s online

registration and data collection system (MyACT), ACT also collects information about students’

high school courses and grades, educational and career aspirations, extracurricular activities,

and educational needs.

The ACT provides information about how well a student performs compared to other students. It

also provides standards-based interpretations through ACT’s College and Career Readiness

Standards (CCRS)—empirically derived descriptions of the essential skills and knowledge

students need in order to become ready for college and career success. Using the CCRS,

secondary educators can pinpoint the skills students have and those they are ready to learn

next. The CCRS clarify college expectations in terms that high school teachers understand. The

CCRS also offer teachers guidance for improving instruction to help correct student deficiencies

in specific areas. ACT’s College Readiness Benchmarks are the minimum scores associated

with a high likelihood of postsecondary success in each content area. Together, the CCRS and

the Benchmarks provide students specific insights to support success in college and career.

Chapter 5 gives details about the CCRS and Benchmarks.

ACT Technical Manual 1-3

1.3 Purposes, Claims, Interpretations, and Uses of the ACT

The purposes, claims, interpretations, and uses of the ACT are reflected in a theory of action

that integrates evidence supporting content validity (academic research, curriculum information,

and academic standards) with predictive validity (empirical data). The theory of action begins by

answering fundamental questions about the purpose, users, uses, benefits, claims,

interpretations, and outcomes of the test.

Intended Purpose. The primary purpose of the ACT is to measure students’ level of college

and career readiness in core academic areas. ACT testing is intended to help high school

students develop postsecondary educational plans and to help postsecondary educational

institutions meet the needs of their students.

In service of the intended purpose, the ACT provides an overall Composite score and scores for

each of the section tests and the optional writing test. The test also provides a measure of

students’ STEM (science, technology, engineering, and math) skills (by combining mathematics

and science scores), an Understanding of Complex Texts (UCT) indicator, and an ELA (English

language arts) score (by combining English, reading, and writing scores; only students who take

the writing test can receive an ELA score). The test also provides information about student

achievement at a more detailed level through the reporting category scores on each test

section.

Intended Users. Primary intended users of the ACT test include high school students

(typically in Grades 11 and 12), the educational agencies or organizations supporting the

academic preparation of these students (i.e., schools, districts, and states), postsecondary

institutions, and talent recognition and scholarship agencies.

Intended Uses. ACT test data, test scores, and score interpretations have several intended

uses. Students use their results to plan for further education and explore careers based on their

skills, interests, and aspirations. High schools use ACT data in academic advising and

counseling, evaluation studies, accreditation documentation, and public relations.

Postsecondary institutions use ACT results to support admission and course placement

decisions. States use the ACT as part of their statewide assessment systems to measure

students’ educational achievement, to monitor educational improvement and achievement gaps

over time, and to meet federal accountability requirements. Many private, state, and national

agencies that provide scholarships, loans, and other types of financial assistance use ACT test

scores to help assess students’ academic qualifications. Agencies also use ACT data to identify

academically talented students as early as middle school.

Intended Benefits. The ACT test benefits its users by

• allowing students to demonstrate the knowledge and skills gained throughout

educational coursework in English, mathematics, reading, science, and writing;

ACT Technical Manual 1-4

• providing students with a profile of their relative strengths and weaknesses in the

subject areas assessed by the test, thereby informing students about what they know

and can do (based on the College and Career Readiness Standards);

• providing parents with insights about their students’ knowledge and skills;

• providing educators (in schools, districts, and states) with information about their

students’ knowledge and skills;

• encouraging students to better prepare for college and careers by taking courses

linked to positive postsecondary outcomes;

• indicating whether a student is likely ready for college-level coursework or a work

training program (based on the College and Career Readiness Benchmarks and the

Progress Toward the ACT

®

WorkKeys

®

National Career Readiness Certificate

®

(NCRC

®

) indicator); and

• providing colleges and talent identification and scholarship agencies with information

about students’ level of achievement in the subject areas assessed by the test.

Interpretations and Claims. The interpretations and claims of the ACT include the following:

• The ACT measures academic knowledge and skills that are acquired in high school

and are important for college-level coursework in English, mathematics, reading,

science, and writing.

• ACT scores can be used in combination with other relevant measures to estimate

students’ likelihood of success in college during the first year and beyond and to help

inform college admission, course placement, and remediation decisions.

• ACT scores can be used in aggregate for monitoring educational improvement and

achievement gaps over time, as well as assisting with evaluating the effectiveness of

school and district programs when a school administers the ACT to all its students.

• MyACT includes the ACT Interest Inventory (ACT, 2009b), which is based on

research about career planning, to point students toward a range of good-fit options

to consider. In the process of exploration, students can focus on educational and

occupational options that are relevant to future satisfaction and success. The ACT

Interest Inventory results, when used in conjunction with ACT test scores, provide a

more holistic picture of the student’s educational development and career-relevant

motivations.

Intended Outcomes. Using the results of the ACT in conjunction with other academic and

non-academic measures can help

ACT Technical Manual 1-5

• students, parents, and educators to identify academic knowledge and skills in which

students might benefit from additional instruction and supports while still in high

school to better prepare for college and career and avoid taking remedial or

developmental courses in their first year of college;

• students to expand their educational and occupational exploration beyond options

initially considered based on students’ academic strengths and weaknesses and

interests measured by the ACT Interest Inventory (ACT, 2009b) or through ACT’s

Educational Opportunity Service (Moore & Cruce, 2017);

• schools and districts to raise college awareness and exposure when all students take

the ACT through state or district testing;

• schools and districts to evaluate student growth and identify gaps in educational

achievement in order to better understand which school programs are effective in

preparing all students for college and career;

• postsecondary institutions to select students for admission who are likely to enroll at

the institution and, once enrolled, likely to succeed in their college courses and

complete a college degree at the institution;

• postsecondary institutions to place students in first-year college courses in which

they are most likely to be successful; and

• postsecondary institutions to identify students early on who are most likely to

struggle academically, who may be at risk of dropping out of college, and who may

benefit from institutional academic services and supports in order to successfully

transition from high school to college.

1.4 Evidence-Based Design of the ACT Test

The design of the ACT test emerges from an evidence-based research and data collection

process that ensures that items and test forms elicit the evidence necessary to support the

claims of the ACT. For example, content and item specifications and test blueprints influence

the technical quality of test items and forms. The ACT design is informed by several factors,

including the following:

• Subject-matter experts (SMEs)

• Academic research on skill targets, sequencing of skills, and grade placement

• Data and evidence of student understanding collected from the ACT test

• The ACT

®

National Curriculum Survey

®

ACT Technical Manual 1-6

• A survey of standards frameworks—including, but not limited to, the ACT College

and Career Readiness Standards, the Next Generation Science Standards, and

other college and career readiness standards

The validity argument is further supported with criterion-related longitudinal evidence from

students who complete the ACT and then go on to colleges (two-year and four-year) and

career-training programs.

While SMEs can identify copious skills covered by a typical high school curriculum, not all skills

and knowledge are essential for postsecondary success, nor will measuring every skill help

identify lower- and higher-achieving students. For example, some skills essential for success

may be attained by more than 95 percent of students continuing on to postsecondary education,

and including items that measure such skills on a test only increases test length without

contributing to predicting postsecondary success.

Similarly, ACT research demonstrates that there are often discrepancies between skills high

school educators see as relevant to success and the expectations and experience of college

faculty. Again, ACT uses data from a national sample of institutions, academic programs, and

college majors to prioritize the skills and knowledge clearly linked to student success.

ACT supplements these other sources of data with subject-matter expertise. ACT’s test

development staff has extensive classroom experience in the subjects tested by the ACT.

The first step in developing the ACT was to synthesize research on high-value skill targets—the

skill targets that can be shown to offer the most useful evidence of college and career

readiness. This evidence was obtained by organizing the knowledge and skills identified by

educators and contained in educational standards into the assessment content framework.

The next step was to use this research to develop content specifications and task models that

articulated the evidence needed to monitor student progress. Tasks were then generated from

these specifications and assembled into test forms based on test blueprints.

The test blueprints specify constraints on various factors, including, but not limited to, content

coverage, item difficulty, cognitive complexity, reading load, and the time required for an item.

Test forms are then administered, and student performance data are collected.

Figure 1.1 helps illustrate how a validity argument is composed of multiple sources of research,

empirical data, and other forms of evidence. Content validity is shown to be based in research.

Predictive validity information flows in primarily from the ACT and, to a lesser extent, the ACT

®

WorkKeys

®

assessments. Both channels supply information about which knowledge and skills

are needed to perform well on the ACT, thus supporting an iterative model of refinement that

serves the common goal of determining whether a student is college and career ready.

ACT Technical Manual 1-7

Figure 1.1. The Full Picture: Evidence and Validity

1.5 ACT’s Commitment to Fair Testing

Fairness is an essential quality of testing related to issues such as testing experience, possible

measurement bias, equitable score interpretations, and students’ ability to accurately

demonstrate the extent of their knowledge and skills (i.e., accessibility). Since publication of the

original edition in 1988, ACT has endorsed the Code of Fair Testing Practices in Education

(Code; Joint Committee on Testing Practices, 2004), a statement of the obligations to test

takers of those who develop, administer, or use educational tests and test data. The

development of the Code was sponsored by a joint committee including the American

Counseling Association, the American Educational Research Association, the American

Psychological Association, the American Speech-Language-Hearing Association, the National

Association of School Psychologists, the National Association of Test Directors, and the

National Council on Measurement in Education, to advance, in the public interest, the quality of

testing practices.

The Code sets forth fairness criteria in four areas: developing and selecting appropriate tests,

administering and scoring tests, reporting and interpreting test results, and informing test takers.

Separate standards are provided for test developers and for test users in each of these four

areas. According to the Code, for example, test developers should provide “tests that are fair to

all test takers regardless of age, gender, disability, race, ethnicity, national origin, religion,

sexual orientation, linguistic background, or other personal characteristics” (p. 2). Test

developers should “avoid potentially offensive content or language” (p. 4) and “evaluate the

evidence to ensure that differences in performance are related to the skills being assessed” (p.

4). ACT’s endorsement of the Code represents a commitment to vigorously safeguarding the

rights of individuals participating in its testing programs.

ACT Technical Manual 1-8

Similarly, ACT endorses, and is committed to complying with, the Code of Professional

Responsibilities in Educational Measurement (NCME Ad Hoc Committee on the Development of

a Code of Ethics, 1995), a statement of professional responsibilities for those who develop

assessments; market and sell assessments; select assessments; administer assessments;

interpret, use, and communicate assessment results; educate about assessments; and evaluate

programs and conduct research on assessments. One of those responsibilities is to “develop

assessment products and services that are as free as possible from bias due to characteristics

irrelevant to the construct being measured” (Section 1.2).

Ensuring fairness in a test is a critically important goal. Lack of fairness must be detected,

eliminated, and prevented at all stages of test development, test administration, and scoring.

The work of ensuring fairness starts with the design of the test and test specifications. It then

continues through every stage of the test development process, including item writing and

review, item pretesting, item selection and forms construction, and forms review. ACT makes

every effort to see that ACT tests are fair to the populations for which the tests are intended and

is committed to participating in ongoing dialogues about assessment fairness.

1.6 The Population Served by the ACT

During the Spring of 2022, WDPI contracted with ACT to provide the ACT with writing to public

school and parental choice 11th-grade students during regular school hours at schools certified

as ACT state testing sites. The analyses reported in this technical manual are based on 61,666

student records from the spring 2022 administration of the ACT in the state-sponsored school-day

testing in Wisconsin.

Table 1.1. Demographic Characteristics of Wisconsin State Contract Spring 2022 ACT Testers

Demographic

Percentage

N

Gender

Female

46

28,555

Male

48

29,837

Other Gender

1

669

No Response

2

1,034

Prefer Not to Respond

3

1,571

Racial/Ethnic Background

American Indian/Alaska Native

1

500

Asian

4

2,270

Black/African American

6

3,612

Hawaii Native/Other Pacific Islander

<1

60

Hispanic/Latino

12

7,389

White

66

40,651

Two or More Races

4

2,640

Prefer Not to Respond

7

4544

Notes: Due to rounding, some values may not add to exactly 100%. Information in this table can also be

found in Appendix Table 1.

ACT Technical Manual 1-9

1.7 Test Preparation

Awareness of and exposure to an assessment prior to taking it is important in order for students

to feel comfortable and confident. ACT offers a variety of free and affordable test preparation

solutions for students, parents, and educators.

• Preparing for the ACT Test. Includes a full-length practice test, test-taking

strategies, and information about what to expect on test day. This publication is

available in English and Spanish as a free download for teachers, students, parents,

and others.

o English: www.act.org/content/dam/act/unsecured/documents/Preparing-for-the-

ACT.pdf

o Spanish: https://www.act.org/content/dam/act/unsecured/documents/Preparing-

for-the-ACT-Spanish.pdf

• ACT Official Online Practice Test. ACT provides free access to a full-length

practice test that simulates an online testing experience. Students may access both

timed and untimed practice tests for each test section. Students may sign into each

of the section tests as often as they wish in order to become comfortable with the

testing.

• Alternate Assessment Format Samples. Students who will test with alternate

formats of the assessment can prepare by practicing with one of our alternate format

samples. Braille, large print, audio, and reader’s script formats are available at no

cost and contain a full-length practice test.

• ACT Online Prep. Provides students with an interactive test preparation

experience that can be accessed anytime online and includes both structured and

adaptive paths. It includes personalized learning paths, practice tests with real ACT

test questions, and comprehensive content review.

• ACT Question of the Day. We post a daily test question to MyACT to provide

students with an opportunity for quick daily practice. Students and teachers can opt

to receive a weekly email reviewing the questions posted the week before.

• Powered by Kaplan. ACT has partnered with Kaplan to publish three official test

preparation products:

o Self-Paced Course: Delivers bite-sized video lessons on demand so students

can learn anywhere, anytime.

o Live Online Classes: Our top-rated teachers show students what to study and

how to study during a series of engaging live classes.

o Tutoring: Led by expert tutors, students learn test content and strategies in these

one-on-one online tutoring sessions. Our instructors adapt to the student’s needs

and provide each student with personalized attention and recommendations.

Chapter 2

The ACT Test Development

2.1 Overview

This chapter describes ACT’s test development process—including item and form development

procedures. The following principles have shaped and will continue to drive ACT’s development

agenda:

1. Report results in instructionally relevant ways that support clear interpretation within

content areas.

2. Maintain reasonable testing times by assessing what research and evidence show to be

the most critical factors for success after high school.

3. Leverage technology to enhance student engagement, produce more meaningful

results, and share results in a timely fashion.

4. Increase the emphasis on evidence-centered design, implement best practices as they

mature, and improve ACT’s capabilities to enact the highest-quality design and

development processes.

5. Include science as a core academic domain in ACT’s assessment batteries.

6. Reflect the research-validated reality that there are multiple dimensions of readiness and

success.

As a nonprofit educational research organization, ACT uses these principles to drive the

development and continuous improvement of ACT’s education and workplace solutions, as well

as the research agenda associated with them, thereby enabling ACT to fulfill its mission of

helping all individuals achieve education and workplace success.

This chapter provides brief overviews of the ACT

®

National Curriculum Survey

®

, the content and

bias review process, and the statistical criteria for selecting operational items and assembling

forms. This chapter concludes with a high-level explanation of the ACT scoring procedures,

including descriptions of additional scores and indicators.

2.2 Description of the ACT Tests

The ACT

®

test contains four sections—English, mathematics, reading, and science—and an

optional writing test. These tests measure important content, skills, and concepts taught in high

school and needed for success in college and career. The content specifications describing the

knowledge and skills to be measured by the ACT were determined through a detailed analysis

of relevant information. ACT uses direct feedback from current high school and postsecondary

teachers (via the ACT National Curriculum Survey, as well as through external review of test

items) and student data from the ACT and from grades earned in postsecondary courses.

ACT Technical Manual 2-2

These data are used to verify that the ACT measures knowledge and skills empirically linked to

postsecondary and career success. The ACT National Curriculum Survey is described in the

subsequent section of this chapter. Information about the specific knowledge and skills

measured by each test is provided in Chapter 3. Chapter 7 describes sources of validity

evidence supporting the interpretation of ACT scores.

2.3 The ACT National Curriculum Survey

The ACT National Curriculum Survey is a one-of-a-kind nationwide survey, conducted by ACT

every few years, of educational practices and college and career readiness expectations (ACT,

2007, 2009a, 2013a, 2016a, 2020). The ACT National Curriculum Survey embodies ACT’s

commitment to ensuring not only that the assessments are consistently valid and relevant but

also that they provide information enabling students and workers to be fully ready to embark

successfully on rewarding college and career journeys.

ACT surveys thousands of K–12 teachers and college instructors in English and writing,

mathematics, reading, and science, as well as a national cross section of workforce supervisors

and employees, for the purpose of determining which skills and knowledge in these subjects are

currently being taught at each grade level and which skills and knowledge are currently

considered essential aspects of college and career readiness.

Questions are also included about which skills from the ACT

®

Holistic Framework

®

—a research-

based framework that integrates behavioral skills, education and career navigation skills, core

academic skills, and cross-cutting capabilities (such as teamwork and critical thinking)—are

most integral to college and career success.

ACT uses the results of the ACT National Curriculum Survey to guide the development of ACT

assessment solutions, including the ACT test, the PreACT

®

, and ACT

®

WorkKeys

®

. ACT

conducts the survey to ensure that its assessments are measuring the knowledge and skills that

instructors of credit-bearing, first-year college courses identify as important for success in each

content area or that workforce supervisors identify as important for readiness for targeted

workforce training and for success on the job.

ACT makes the results of each ACT National Curriculum Survey public to help education and

workforce stakeholders make more informed decisions about the skills needed to be successful

in postsecondary education and the workplace.

2.3.1 The Purpose of the ACT National Curriculum Survey

The ACT National Curriculum Survey is a crucial step in the process of building and regularly

updating a suite of ACT assessments that is empirically aligned to college readiness standards.

Survey results help address a critical question: Does the test measure knowledge and skills

currently relevant to college and career success? Ultimately, the survey data inform the

blueprints for the assessments. Subsequently, results from the assessments are used to

validate ACT’s College and Career Readiness Standards as well as its College and Career

Readiness Benchmarks.

ACT Technical Manual 2-3

Equally important is predictive validity. Using postsecondary course performance data, ACT

answers a second critical question: Does the test accurately predict postsecondary

performance? Constant monitoring allows ACT to ensure that the answer to both questions is

“yes.”

ACT uses the findings from the ACT National Curriculum Survey to monitor the test blueprints.

This process ensures that the assessments measure not only what is being taught in schools

around the country but also what demonstrably matters most for college and career readiness.

To maintain relevancy and currency, it is important that assessments be built upon up-to-date

evidence of what knowledge and skills matter most according to the assessment context and

purpose.

The science behind ACT assessments—that is, the evidence base and ongoing research—is

critical to answering the key question of what matters most for college and career readiness.

The ACT National Curriculum Survey represents ACT’s commitment to

• use evidence and research to develop and validate ACT standards, assessments, and

benchmarks;

• maintain a robust research agenda to report on key educational metrics; and

• develop assessments, reports, and interventions that will help individuals navigate their

personal path to success along the kindergarten-through-career continuum.

2.3.2 Survey Sample and Process

For the 2020 ACT National Curriculum Survey, ACT recruited participants via various print and

electronic methods (e.g., advertisements, email, social media) and invited participation from

educators at the early elementary school, late elementary school, middle school, high school,

and college levels who teach courses in English and writing, mathematics, reading (including

English language arts and social studies), and science (including biology, chemistry, physics,

and earth and space science) in public and private institutions across the United States. ACT

also invited participation from supervisors and employees at a large variety of businesses. Table

2.1 gives the number of survey respondents in each area.

ACT Technical Manual 2-4

Table 2.1. ACT National Curriculum Survey 2020 Respondents

Area

Number of

Respondents

Early Elementary School

1,214

Late Elementary School

1,213

Middle School

1,623

High School

1,619

K–12 Administrators

405

College Instructors

2,883

Workforce Supervisors

405

Workforce Employees

406

Total

9,768

Education participants were asked to rate discrete content knowledge and skills with respect to

how important each is to student success in the content area. Specifically, K–12 teachers were

asked to rate the importance of content knowledge and skills in a given class they teach, while

college instructors were asked to rate the importance of content knowledge and skills as

prerequisites to success in a given class they teach.

ACT also asked the K–12 teachers to indicate whether they teach particular content knowledge

or skills and, if so, whether those knowledge or skills are taught as standard parts of their

courses or as part of a review of materials that should have been learned earlier. Some

education participants were also asked other content-related questions depending on the grade

level they taught.

Workforce participants were asked to rate discrete skills with respect to how important each is to

success in entry-level positions. ACT also asked workforce participants to indicate how often

employees in their workplace use each of these skills on the job.

Finally, ACT asked all participants questions relevant to current education policy issues (e.g.,

assessments, technology, standards, student characteristics, and obstacles to success). All

results are discussed in the report for the ACT National Curriculum Survey 2020 (ACT, 2020).

To ensure that no single content area would have more influence than another on results, the

educational-level totals were averaged across English language arts, mathematics, and

science.

2.4 Test Development Procedures

2.4.1 Test Specifications Overview

As described below, two major types of test specifications are used in developing the ACT tests:

content specifications and statistical specifications. Several other considerations are made

when new test forms are created, such as meeting passage and item word count requirements,

avoiding very long strings of the same response option, and preventing extreme imbalance in

the distribution of response options.

ACT Technical Manual 2-5

Content specifications. Content specifications for the ACT tests were developed through the

curricular analysis discussed above. Those specifications define the approximate number of

items from each reporting category and cognitive complexity level on a test form. They also set

expectations for diverse representation in passages in terms of gender, ethnicity, region, and

community type (urban or rural). To support validity and fairness, ACT ensures that the content

specifications include only knowledge and skills aligned to the intended purposes of the test. To

include anything else in the content specifications would invite construct-irrelevant variance that

could unfairly impact students’ scores. While care is taken to ensure that the basic structure of

each ACT test remains the same from year to year, the specific characteristics of the test items

used in each specification category are reviewed regularly. While the general content of the test

remains constant, the particular kinds of items in a specification category may change slightly.

The basic content structure of each ACT test is provided in Chapter 3.

Statistical specifications. Statistical specifications for the tests indicate the average level of

item difficulty (proportion correct), the distribution of item difficulties, and the minimum

acceptable level of discrimination (biserial correlation) of the test items to be used.

The tests are constructed with a certain target mean item difficulty for the ACT population in

each subject area. Individual item difficulty must fall within a range from about 0.15 to 0.89 for

mathematics and about 0.20 to 0.85 for English, reading, and science. The difference mainly

reflects the fact that mathematics items have five answer options, but other items offer only four

answer options. The statistical specifications also prescribe approximate numbers of items with

difficulties falling in certain ranges (0.10–0.19, 0.20–0.29, and so forth), which ensures that each

test form includes a mix of low-, moderate-, and high-difficulty items. This specification helps

ensure that test scores are reliable for students across the spectrum of achievement levels.

With respect to discrimination indices, items should have a biserial correlation of 0.20 or higher

with test scores measuring comparable content. Thus, for example, performance on

mathematics items should correlate 0.20 or higher with overall performance on the mathematics

test. Such items help identify students with lower and higher levels of achievement, thereby

contributing to the reliability of test scores.

2.4.2 Item Writers

ACT relies primarily on internal content specialists to develop items. Content specialists are

subject matter experts, trained in the disciplines for which they write items. Most have

experience in teaching at various levels, from high school to university, and at a variety of

institutions, from small private schools to large public institutions. ACT makes every attempt to

include item writers who represent the diversity of the population of the United States with

respect to ethnic background, gender, and geographic location.

Each content specialist is familiar with an item writer’s guide that is specific to the content area.

The guides include example items, test specifications, and ACT’s requirements for content and

style. Also included are specifications for the fair portrayal of all groups, which includes

avoidance of subject matter that may be unfamiliar to members of certain groups within society,

ACT Technical Manual 2-6

a balanced representation of race/ethnicity, and gender-neutral language. Item development

assignments are balanced among content specialists to ensure a diversity of material.

Depending on development needs, ACT may contract with external item writers or make use of

automated item generation. Externally contracted item writers are also specialists in the content

areas measured by the test and typically have teaching experience. Each potential item writer is

required to submit a sample set of materials (written using the item writer’s guide) for ACT’s

evaluation. Item writers contracted with ACT are held to the same high-quality standards as

internal content specialists, and the same attempts to maintain diversity of material and security

of the testing program are made. Automated item generation makes use of models with

interchangeable elements based on items that were administered in the past and exhibited

desirable statistical properties (e.g., difficulty and discrimination).

2.4.3 Item Writing

Item-writing assignments are driven by the test blueprint and item pool analyses, with the goal

of attaining a wide range of high-quality items to elicit evidence of the knowledge, skills, and

abilities measured in each test. A typical assignment is tied to an evidenced-based item

template and focuses on a skill statement that the item needs to assess. Included in each

template is a set of statements describing what evidence of students’ knowledge and skills

should be elicited by the item.

Assignments are constructed through ACT’s item authoring system. This system also contains

item metadata, information about the item flow through the stages of development, comments

from reviewers, and item quality metrics.

All items must be educationally important and psychometrically sound. Many items must be

constructed because, even with good writers, many pretested items fail to meet ACT’s

standards.

Each item writer submits a set of items in a given content area. All mathematics items

developed recently are discrete (not passage based); some older items belong to a set (i.e.,

several items based on the same paragraph or chart). All items on the English and reading tests

are related to prose passages. Some reading items may be related to visual or quantitative

information, such as graphs and tables, attached to a passage. All items on the science test are

related to passages that contain data presentations such as graphs and tables.

2.4.4 Review of Items

Content Review

After an item (or set of items) is written, it is reviewed several times by numerous content

specialists to verify that it meets all of ACT’s standards. It is edited to meet ACT’s specifications

for content accuracy, word count, item classification, item format, and language. During the

review and editing process, all test materials are reviewed for fair portrayal and balanced

representation of groups within society and for gender-neutral language.

ACT Technical Manual 2-7

After internal item reviews are completed, ACT invites external reviewers with knowledge and

experience in those content areas, including practicing secondary and postsecondary

educators, to participate in refining items and verifying that they should elicit evidence of the

intended constructs. During external review, every item is independently reviewed by four to six

subject matter experts from across the United States, each of whom has extensive experience

with students at or around the grade levels at which the test content is typically taught. During

the external content review, items are evaluated for content accuracy, item format, and the

effectiveness of language in terms of leveling, precision, and fairness.

Fairness Reviews

Fairness reviews play an essential role in the development of ACT assessments. In order to

help ensure that content is fair, unbiased, and accessible, we conduct external fairness reviews

for all items prior to pretesting and for entire test forms before they become operational. In this

context, “accessible” means that examinees can access the construct measured by the

assessment and accurately demonstrate their construct-relevant knowledge and skills when

responding to test items. Avoiding content that is potentially biased is one important aspect of

accessibility. Chapter 4 describes ACT’s approach to another aspect of accessibility: designing

tests and providing testing accommodations for English learners and students with disabilities.

The external fairness review panel consists of experts in diverse areas of education who have

experience working with diverse populations. Passages and items are reviewed to help verify

that content is not unfair, biased, or insensitive. All comments are reviewed by ACT content

specialists, and appropriate changes are made. For both content reviews and fairness reviews,

we select reviewers so that no one state is overrepresented, because our stakeholders count on

national representation to maintain the comparability of test scores.

2.4.5 Item Tryouts

ACT pretests every item before it appears on an operational form to verify that the item

functions properly—that is, the item is not too easy or difficult, the item contributes to precise

measurement of the intended construct, and there are no problems with the correct response or

distractors. Items and passages that are judged to be acceptable in the review process are

assembled into tryout units (compilations of items and any associated passages). These tryout

units are then appended to paper test booklets administered during Saturday national testing

events. Each examinee is administered a tryout unit from one of the four academic areas

covered by the ACT tests, with the exception of the writing test, which is pretested in a separate

standalone tryout. The tryout unit is sometimes referred to as the fifth test in the ACT battery,

though performance on the tryout items does not affect examinees’ ACT scores. The tryout

units are spiraled so that each unit is administered to a random sample of examinees

participating in a given administration, which helps ensure that the psychometric properties of

the items—especially item difficulty—are comparable across items and that all item statistics

reflect performance from representative samples of examinees.

ACT Technical Manual 2-8

Item Analysis of Tryout Units

Item analyses are performed on the tryout units. For a given unit, the sample is divided into

low-, medium-, and high-performing groups by the individuals’ scores on the ACT test in the

same content area (taken at the same time as the tryout unit). The cutoff scores for the three

groups are the 27th and the 73rd percentiles in the distribution of those scores. These

percentiles maximize the critical ratio of the difference between the mean scores of the upper

and lower groups, assuming that the standard error of measurement in each group is the same

and that the scores for the entire examinee population are normally distributed (Millman &

Greene, 1989).

Proportions of students in each of the groups correctly answering each tryout item are

tabulated, as are the proportions in each group who select each of the incorrect options. The

biserial and point-biserial correlation coefficients of each tryout item are also computed.

Item analyses identify statistically effective test items. Items that are either too difficult or too

easy are eliminated or revised for future item tryouts, as are items that fail to discriminate

between students of high and low educational achievement (as measured by their

corresponding ACT test scores). The biserial and point-biserial correlation coefficients, as well

as the differences between proportions of students answering the item correctly in each of the

three groups, are used as indices of the discriminating power of the tryout items.

Additionally, differential item functioning (DIF) analysis is conducted on the tryout data. DIF can

be described as a statistically significant difference between the odds of a certain group (the

focal group) answering the item correctly and the odds of a comparison group (the reference

group) answering the item correctly when students in the two groups have similar levels of

achievement with respect to the content being tested. Items exhibiting DIF that is large in

magnitude and statistically significant are examined by a diverse panel of external fairness

reviewers, who evaluate whether there is a content-based explanation for the DIF.

Each item is reviewed following the item analysis. ACT staff members scrutinize items flagged

for statistical reasons or DIF to identify possible problems. In some cases, items may be revised

and sent through the tryout process again. The review process also provides feedback that

helps to improve the quality of future items.

2.4.6 Assembly of New Forms

Items that are judged acceptable in the review process following item tryouts are placed in an

item pool. Preliminary forms of the ACT tests are constructed by selecting from this pool items

that match the content and statistical specifications (described in Chapter 3).

Table 2.2 displays the distributions of item difficulty levels on 8 forms administered during the

2021–2022 academic year. In addition, mean point-biserial correlations and completion rates

are reported. Table 2.2 indicates that the ACT forms included a small number of items with p-

values falling outside the desired range of 0.15–0.89 for mathematics and 0.20–0.85 for English,

ACT Technical Manual 2-9

reading, and science. Such items were slightly easier or slightly more difficult than expected

based on data from the item tryout stage.

The completion rate is an indication of whether a test is speeded for a group of students. A test

is considered speeded if many students do not have sufficient time to answer the items in the

time allotted. The completion rate reported in Table 2.2 for each test is the average completion

rate for 8 national test dates during the 2021–2022 academic year. The completion rate for each

test is computed as the average percentage of examinees who answered all of the last five

items.

Table 2.2. Difficulty

a

Distributions and Mean Discrimination

b

Indices for ACT Test Items, 2021–2022

Statistic

English

Mathematics

Reading

Science

Difficulty Range

.00–.09

0.0%

0.0%

0.0%

0.0%

.10–.19

0.3%

4.0%

0.3%

0.3%

.20–.29

1.0%

10.2%

2.2%

4.4%

.30–.39

3.8%

12.7%

4.1%

10.3%

.40–.49

8.8%

16.0%

8.4%

14.7%

.50–.59

17.7%

18.1%

19.7%

20.3%

.60–.69

23.5%

15.0%

31.3%

22.8%

.70–.79

26.0%

16.0%

20.9%

15.6%

.80–.89

16.0%

7.7%

12.5%

11.3%

.90–1.00

2.8%

0.2%

0.6%

0.3%

No. Items

c

600

480

320

320

Mean Difficulty

0.66

0.53

0.63

0.58

Mean Discrimination

0.43

0.43

0.43

0.42

Mean Completion Rate

d

94%

94%

96%

97%

a

Item difficulty is the proportion of examinees who correctly answered the item.

b

Item discrimination is the point-biserial correlation coefficient, which is also known as the item-total

correlation.

c

Each test form consists of 75 items for English, 60 for mathematics, 40 for reading, and 40 for science.

d

Completion rate is the percentage of examinees who answered all of the last five items (averaged

across forms).

2.4.7 Content and Fairness Review of Test Forms

The preliminary versions of the test forms are subjected to several reviews to ensure item

quality and that the overall test forms meet content and statistical specifications and exemplify

best practices supporting fair and accessible testing. ACT staff performs the first review. Items

are checked for content accuracy and conformity to ACT style. The items are also reviewed to

ensure that they are free of clues that could allow test-wise students to answer the items

correctly even though they lack the required subject-area knowledge or skills. All ACT test forms

go through an external content review. Each form is reviewed by four to six educators from

around the United States, each of whom has extensive experience with students at or around

the grade levels at which the test content is typically taught. These reviews follow a process

similar to the item development external content review. In addition to focusing on individual

ACT Technical Manual 2-10

items, however, the reviewers also consider the quality of the form as a whole. They judge the

form’s distributions of content and cognitive complexity to make sure that there is no over- or

under-representation in any category. Reviewers also look for the presence of cluing between

items and other issues that could lessen the usefulness of the resulting scores.

Additionally, all newly developed ACT forms must go through external fairness reviews to

support fair, equitable, and inclusive assessments that are accessible to all regardless of

differences in background or perspective. As with the earlier fairness review, reviewers are

experts in diverse areas of education who have experience working with diverse populations. At

this stage, reviewers examine individual items and passages, but they also consider the

preliminary form as a whole. That form should be balanced in multicultural and gender

representation. While it is impossible, given the limited amount of material in each test form, to

represent every group in every form, a good-faith effort to represent diversity should be

discernable.

After the external reviews are complete, ACT summarizes the results. All comments from the

consultants are reviewed by ACT content specialists, and appropriate changes are made to the

test forms. Whenever significant changes are made, items and/or passages are replaced and

are again reviewed by the appropriate consultants and by ACT staff. If no further changes are

needed, the test forms are prepared for publishing.

2.4.8 Review Following Operational Administration

After each operational administration, item analysis results are reviewed for any anomalies,

such as substantial changes in item difficulty and discrimination indices between tryout and

operational administrations. Only after all anomalies have been thoroughly checked and the

final scoring key approved are score reports produced. Examinees may challenge any items

they feel are questionable. Once a challenge to an item is raised and reported, the item is

reviewed by content specialists in the content area assessed by the item. In the event that a

problem is found with an item, actions are taken to eliminate the influence of the problem item

as necessary and appropriate. In all cases, each person who challenges an item is sent a letter

indicating the results of the review.

Also, after each operational administration, differential item functioning (DIF) analysis is

conducted on the test data. The procedure currently used for the analysis is the Mantel-

Haenszel common odds ratio procedure (MH), which is also used during the pretest item

analysis. The examinees’ scores on each item are analyzed using the procedure to identify

evidence of potential item bias. Items with MH statistics exceeding certain tolerance levels—

determined based on pre-established criteria—are flagged. The flagged items can then be

reviewed by content specialists for possible explanations of the MH results. In the event that a

problem is found with an item, actions can be taken to eliminate the influence of the problem

item.

Table 2.3 lists the number of ACT items that exhibited DIF according to the MH procedure for

forms administered during the 2021–2022 academic year based on Wisconsin students taking

the state-sponsored ACT administration. Analyses were conducted to compare item

ACT Technical Manual 2-11

performance for female and male students as well as racial/ethnic groups. Table 2.3 indicates

which group was favored by the DIF, which means that the group performed better than

expected on the item when controlling for performance on the test overall. Note that although

DIF is statistical evidence that an item may be biased, approximately 5% of items are expected

to be flagged even when there is truly no DIF. In general, DIF flagging rates are near or below

the expected 5% when there is no DIF.

Table 2.3. ACT Test Items Exhibiting DIF based on 2022 Wisconsin Student Data

Subject

Reference

Group

Focal

Group

N of

Items

N

Y

Male

Female

75

75

0

Never EL

English Learner

75

75

0

White

African-American

75

74

1

English

White

Asian

75

72

3

White

Hispanic

75

74

1

White

Two or More Races

75

75

0

Male

Female

60

60

0

Never EL

English Learner

60

60

0

White

African-American

60

59

1

Mathematics

White

Asian

60

60

0

White

Hispanic

60

60

0

White

Two or More Races

60

60

0

Male

Female

40

40

0

Never EL

English Learner

40

40

0

White

African-American

40

40

0

Reading

White

Asian

40

40

0

White

Hispanic

40

40

0

White

Two or More Races

40

40

0

Male

Female

40

40

0

Never EL

English Learner

40

40

0

White

African-American

40

40

0

Science

White

Asian

40

40

0

White

Hispanic

40

40

0

White

Two or More Races

40

40

0

Note: Information in this table can also be found in Appendix Table 11.

2.5 Test Development Procedures for the Writing Test

This section describes the procedures for developing essay prompts for the ACT writing test.

These include many of the same steps used to develop the multiple-choice tests.

ACT Technical Manual 2-12

2.5.1 Prompt Writers

ACT writing prompts are produced by internal content specialists. ACT writing specialists have

broad professional experience in secondary and postsecondary classrooms and in the field of

writing assessment.

2.5.2 Prompt Construction

Prompts developed for the writing test provide topics with enough complexity and depth that

examinees can write thoughtful and engaging essays. Topics are carefully chosen so that they

are neither too vast nor too simplistic and do not require specialized prior knowledge. In

constructing prompts, ACT writing specialists take into account that a student must be able to

respond within the 40-minute time constraint of the test.

2.5.3 Content and Fairness Review of Prompts

After writing test prompts are developed and refined by ACT writing specialists, the prompts go

through a rigorous review process with external experts. These fairness and bias experts

carefully review each prompt to ensure that neither the language nor the content of a prompt will

be offensive to a test taker and that no prompt will disadvantage any student from any

geographic, socioeconomic, or cultural background. Reviewers also help ensure that prompts

are accessible and engaging to students by evaluating prompt content in relation to student

knowledge, experience, and interests.

2.5.4 Field Testing of Prompts

ACT conducts a special field test study periodically to evaluate new ACT writing prompts and to

select those suitable for operational use. Students from across the United States—from rural

and urban settings, small and large schools, and public and private schools—write responses to

the new prompts, which are then read and scored by ACT-trained readers.

Prompts are evaluated from both content and statistical perspectives to ensure that scores

(reported on a scale of 2 to 12) are comparable across different test forms and different

administrations. In each field test study, anchor prompts and new prompts are administered to

randomly equivalent groups of approximately 1,000 students per prompt.

Each student takes two prompts, and the order in which the prompts are taken is

counterbalanced. Prompts are spiraled within classrooms so that, across all participating

students, randomly equivalent groups of students take each prompt, with about half of the

students taking a prompt first and the rest taking it second.

2.5.5 Review of Field Tests and Operational Administration

Once scoring of the new writing test prompts has been completed, the prompts are statistically

analyzed to judge their acceptability. ACT applies the acceptability criteria after examining the

relationships among scores on newly field-tested prompts and older (anchor) prompts.

Specifically, the 2-to-12 score distributions should align, and there should be students scoring at

the top of the score scale. Also, equating results should show that equating errors are within

ACT Technical Manual 2-13

expected ranges at all score points, and the raw-to-scale score conversion tables, which are

used to generate scores (from 1 to 36) that contribute to the ACT ELA score, exhibit desirable

properties (see Chapter 6.2 for more information about writing equating).

2.6 ACT Scores

This section briefly introduces the scores generated from student responses to the ACT test.

Chapter 5 provides additional information about these scores and ACT score reports. This

section concludes with a summary of ACT policies concerning scoring appeals and inquiries.

2.6.1 ACT Scale Scores

For each test section on the ACT (English, mathematics, reading, and science), the raw scores

(number of correct multiple-choice responses) are converted to scale scores ranging from 1 to

36. The Composite score is the average of the four content test scale scores rounded to the

nearest whole number (fractions of 0.5 or greater round up). The minimum Composite score is

1; the maximum is 36. See Chapter 6 for more details about the creation and maintenance of

the 1-to-36 ACT scales.

If the student took the writing test, the student’s essay is read and scored independently by two

trained raters. Essays are scored analytically—that is, on the basis of traits in the essay that

correspond to four domains of writing identified in the scoring rubric: Ideas and Analysis,

Development and Support, Organization, and Language Use and Conventions. Each reader

rates an essay on a scale ranging from 1 to 6 for each of the four domains. The sum of the

readers’ ratings for each domain is the domain score, reported on a scale ranging from 2 to 12.

The subject-level writing test score, also 2 to 12, is the rounded average of the four domain

scores. Writing scores are converted to a 1-to-36 scale only for the purpose of calculating the

ELA score; the 1-to-36 writing scores are not reported.

2.6.2 STEM and ELA Scores

Since fall 2015, ACT has reported a Science, Technology, Engineering, and Math (STEM)

score, which is calculated as the average of the 1-to-36 mathematics and science scale scores