Wisconsin Educator Effectiveness System

User Guide for Teachers,

Teacher Supervisors, and Coaches

Developed by

Jennifer Kammerud

Director, Licensing, Educator Advancement and Development Team

Cynthia Hoffman

Licensing, Educator Advancement and Development

Jacob Hollnagel

Licensing, Educator Advancement and Development

Laura Ruckert

Licensing, Educator Advancement and Development

Courtney Spitz

Licensing, Educator Advancement and Development

This guide is adapted from the prior version developed by Katharine Rainey (formerly with DPI), Steven

Kimball, Kris Joannes, Jessica Arrigoni, and Herbert G. Heneman, III (UW-Madison, Wisconsin Center for

Education Research), Billie Finco (formerly with CESA 4), and Allen Betry (formerly with CESA 9).

Wisconsin Department of Public Instruction

Jill K. Underly, PhD, State Superintendent

Madison, Wisconsin

.

This document is available from:

Licensing, Educator Advancement and Development

Jennifer Kammerud, Director

Wisconsin Department of Public Instruction

201 West Washington Avenue

Madison, WI, 53703

(608) 267-3750

https://dpi.wi.gov/ee

© August 2024 Wisconsin Department of Public Instruction

The Wisconsin Department of Public Instruction does not discriminate on the basis of sex,

race, color, religion, creed, age, national origin, ancestry, pregnancy, marital status or

parental status, sexual orientation, or ability and provides equal access to the

Boy Scouts of America and other designated youth groups.

Table of Contents

Five Principles of the WI Learning-Centered Educator Effectiveness System ........... 1

Teacher Evaluation Overview ...................................................................................................... 5

The Educator Effectiveness (EE) Cycle ...................................................................................... 9

Appendix A: Research Informing Teacher Evaluation

& the Framework for Teaching ............................................................ 21

Appendix B: Professional Conversations and Coaching ...................................... 24

Appendix C: Observations and Evidence .................................................................. 29

Appendix D: SLO Resources ........................................................................................... 45

Appendix E: Strategic Assessments:

Evidence to Support the SLO Process ............................................... 49

Appendix F: Features of the 2022 Danielson

Framework for Teaching ........................................................................ 51

Appendix G: Continuous Improvement ..................................................................... 52

Appendix H: Tips for Conducting Required Conferences .................................... 55

Appendix I: Sample 3-Year Cycle ................................................................................ 56

Appendix J: Legal Reference ......................................................................................... 57

Introduction

This guide provides teachers, teacher supervisors, coaches, and peers with the

necessary information to plan and conduct learning-centered teacher evaluations.

• Section one briefly describes the five principles of Wisconsin’s (WI)

learning-centered Educator Effectiveness (EE) approach.

• Section two provides an overview of the Danielson Framework for

Teaching (FfT), the evaluation process, and its elements.

• Section three illustrates the use of the evaluation process as a cycle of

continuous improvement across the year.

• Section four summarizes how to use the end-of-cycle conversation to

plan for the coming year and move learning forward.

• Optional appendices provide additional information.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 1

Five Principles of Wisconsin’s Learning-

Centered Educator Effectiveness System

Evaluation must be meaningful to educators for the system to produce professional practice and

student learning growth. The greatest potential for evaluation systems to improve both practice

and student outcomes happens when the following learning-centered conditions are in place:

1. A foundation of trust that encourages educators to take risks and learn

from mistakes;

2. A common, research-based framework of effective practice;

3. Implementation of and regular reflection on educator-developed, data-based goals;

4. Cycles of continuous improvement guided by timely and specific feedback through

ongoing collaboration; and

5. Integration of evaluation processes with school and district

improvement strategies.

1

1

Appendix A provides research references for the 5 Principles and other aspects of

the Wisconsin EE process.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 2

Creating and maintaining these conditions helps move an evaluation system to a learning-centered,

continuous improvement process. This section provides an explanation of each principle of

learning-centered evaluation and its purpose in the Wisconsin Educator Effectiveness (EE) System.

Foundation of Trust

Evaluators should be transparent by discussing all the following with their teachers:

• The evaluation criteria and rubric the evaluator will use to evaluate

the teacher;

• The evaluation process, or how and when the evaluator will observe the

teacher’s practice;

• The use of evaluation results; and

• Any remaining questions or concerns.

The evaluator plays an essential role in building a foundation of trust. Evaluators should encourage

teachers to stretch themselves in ways that foster professional growth and set rigorous goals for

both student learning and their own professional growth. The evaluator supports the continuous

improvement process by reinforcing that learning happens through effort and mistakes as well

as successes.

Training and regular calibration of evaluators on the accurate use of the practice rubric provides

teachers with a basic assurance about the accuracy of evaluators’ observations and feedback.

Evaluators should cultivate a growth mindset through open conversations to help teachers build

on strengths and learn from mistakes.

A foundation of trust is critical to the implementation of the EE system. Each of the following

principles relies on and serves to reinforce the foundation of trust. More information:

Building a Foundation of Trust

A Common, Research-Based Framework

Wisconsin uses the 2022 Framework for Teaching by Charlotte Danielson as the common,

research-based framework of teacher professional practice for the EE System. The Framework for

Teaching is a performance rubric consisting of four performance levels that helps teachers and

their observers identify current practice and map a path for growth based on reflection. It provides

a common language for best teaching practices and allows for deep and transparent professional

conversations about practice. The framework can be accessed from the Danielson Group website

.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 3

Data-Driven, Educator-Developed Goals

In the Wisconsin EE System, teachers are active participants in their own evaluations and

professional growth. Teachers set goals—student learning objectives (SLOs)—based on analyses of

classroom, school, and other data, as well as self-reviews of their own practice using the

Framework for Teaching. These goals have the most impact when they connect and mutually

reinforce teacher practice and student learning (e.g., “I will _____ so that students can _____).

Information and feedback relevant to the development and strengthening of goals can be solicited

from evaluators, teachers’ peers, school staff, and parents. Teachers and their evaluators or peers

and coaches regularly check in on goals throughout the evaluation cycle to reflect on progress

and adjust.

Educator-developed goals provide a common focus point for teachers and evaluators, aligning the

professional growth needs of the teacher, the academic needs of students, and the priorities of the

school, district, and community.

Continuous Improvement Supported by Professional Conversations

A learning-centered approach facilitates ongoing improvement through regularly repeated

continuous improvement cycles. Continuous improvement cycles represent intentional instruction

and involve goal setting, collection of evidence related to goals, reflection, and revision. People

sometimes refer to this process as “Plan-Do-Study-Act” or “Plan-Do-Check-Act.” Each step in a

continuous improvement cycle should seamlessly connect to the next step and be repeated

as needed.

Professional conversations (i.e., coaching and timely feedback from evaluators, observers, coaches,

or peers) strengthen continuous improvement cycles. With effective training, evaluators, coaches,

and peers can establish a shared understanding and common language with teachers about best

practices through the Framework for Teaching and help ensure consistent and accurate use of the

rubric when selecting evidence, identifying levels of practice, and having professional

conversations to facilitate professional growth. See Appendix B for additional information about

professional conversations.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 4

Integration with District and School Priorities

Self-identified goals based on rigorous data analysis help personalize the continuous improvement

process and create ownership of the results. The improvement process becomes strategic when it

aligns with identified school and district priorities.

Wisconsin designed the EE System to support principal, teacher, and school effectiveness by using

measures, structures, and improvement cycles that are consistent and have integral connections

with each other. For example, the Wisconsin Framework for Principal Leadership includes a focus

on leadership components and critical attributes that relate to principals’ support of effective

teaching through actions like school staffing decisions, professional development, teacher

evaluation activities, and support of collaborative learning opportunities. In another example of

this connectedness, the Student Learning Objective (SLO) processes for teachers and principals

also mirror each other.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 5

Teacher Evaluation Overview

This section provides an overview of the various aspects of the Wisconsin Educator Effectiveness

(EE) system for teachers. This section covers 1) a brief overview of the Danielson Framework for

Teaching, 2) the essential elements of the Wisconsin EE System evaluation process, and 3) the

continuous improvement process of the EE system.

Overview of the Danielson Framework for Teaching

Wisconsin uses Charlotte Danielson’s 2022 Framework for Teaching (FfT). This framework is

designed to support educator learning and growth and is supported by research.

Structure of the Framework for Teaching

The FfT organizes 22 components of teaching into four thematic domains. Five or six distinct skills

(i.e., components) define each domain. Together, the domains represent all aspects of a teacher’s

responsibilities and form a sequence that illustrates how teachers plan, teach, reflect, and apply

their knowledge in the process of teaching and learning. (See Appendix C).

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 6

Levels of Performance

Levels of performance exist for each of the 22 components and provide a roadmap to elevate

teaching. Teachers, evaluators, and coaches should study the levels of performance for each

component to gain a solid understanding of the evaluation rubric. Each component contains

Elements of Success across each level of performance. The Elements of Success are defined by

critical attributes for each level of performance and provide guidance to identify the differences

between the components, levels of performance, Elements of Success. Appendix C provides a list of

suggested evidence sources to support assessments of levels of performance.

Overview of the Educator Effectiveness (EE) Process

Wisconsin designed its learning-centered educator effectiveness process as a cycle of continuous

improvement. The EE System and its processes are ongoing and based on continuous improvement

with each year building on the last.

The EE System defines the elements, processes, and methods for completing a teacher’s evaluation,

but Wisconsin law defines the timeframe for completing an evaluation. Wis Stat. 121.02(1)(q)

requires that “all certified school personnel” be evaluated, in writing, “at the end of their first year

and at least every 3rd year thereafter.” As a result, teachers typically complete an EE System

evaluation on a regular cycle of one to three years.

The essential elements of a complete EE cycle, no matter whether the cycle lasts just one year or up

to three, are described below:

Evaluator Certification and Calibration

New evaluators of teachers (or those with expired certification) must certify in

the use of the Danielson Framework for Teaching using the DPI-provided

certification tool.

Certified evaluators must calibrate using the DPI provided calibration tool

at least once annually.

Evaluators must certify to demonstrate their competency in the use of the

Framework for Teaching in evaluation and calibrate to prevent their assessment of

teacher practice from drifting in accuracy or fairness over time.

Orientation

Teachers must receive EE orientation training in their first year with the district. EE

orientation ensures both evaluators and teachers have a basic understanding of the

WI EE System and any variations in local EE policy.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 7

Self-Review

Teachers complete a self-review using the Framework for Teaching to identify areas

of strength and growth for the period of the evaluation. The self-review informs

goal setting, observations and evidence collection, and professional conversations

with evaluators and peers.

Observations

Observations provide evaluators with necessary evidence of practice to inform

feedback, goal progress, and the overall evaluation of teacher practice.

• One formal, announced observation, including a pre-observation conference to

establish expectations and a post-observation conference to provide feedback.

• And at least two mini-observations with post-observation feedback or 5-6 mini-

observations with a pre-observation conference to establish expectations and

feedback delivered regularly and promptly after each observation.

Conferences

Required conferences provide regular opportunities for professional conversations,

feedback, and goal monitoring between teachers and their evaluators. Conferences

should be conducted among peers or with coaches when a teacher is not being

directly evaluated by their evaluator.

• Planning Session with the evaluator to discuss the self-review and any proposed

Student Learning Objectives or Professional Practice Goals and establish focal

points and expectations for the evaluation period. The evaluator must complete the

Planning Session with the teacher in the year the EE cycle will close. In other years,

teachers should meet with coaches or peers to conduct Planning Sessions.

• Mid-Year Review to discuss progress toward goals, feedback on evidence collected

thus far on practice and student outcomes, and any adjustments to instructional

strategies or the SLO. Like the Planning Session, the evaluator must complete the

Mid-Year Review in the year that the EE cycle will be completed. Coaches or peers

should support teachers in other years.

• End-of-Year (or Cycle) Conference to discuss progress toward goals, feedback on

overall evidence of practice and student learning, and accomplishments and areas

for growth moving forward.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 8

Goals

Teachers write and complete at least one Student Learning Objective.

SLO goal writing and monitoring provide teacher agency in the evaluation process,

alignment between evaluation and student learning needs, and alignment between

student learning needs and teacher practice.

Elements like evaluator certification and calibration and EE orientation occur outside the regular

evaluation cycle and must be completed before evaluation begins. Teachers and their evaluators

complete the remaining elements (self-review, observations, conferences, and goal setting) during

a typical EE cycle.

The table in Appendix I provides an example of the essential EE elements when conducting the

process over a three-year cycle.

Evidence in the EE System

Both the teacher and evaluator collect evidence of practice and student growth throughout the

year. Teachers and their evaluator or peer should have discussed, agreed upon, and planned for

evidence collection at the Planning Session. See Appendix C for evidence collection suggestions.

Evaluators also collect evidence during observations. More information about evidence collection

during observations is included in the next section.

Artifacts

Artifacts provide evidence of professional practice that may not be apparent through observation

alone. The evidence identified in artifacts demonstrates levels of professional practice related to

the components of the Framework for Teaching (FfT) or quality indicators of the SLO rubric.

Evaluators and teachers use evidence from individual artifacts to inform goal monitoring and

feedback, as well as discussions about levels of performance for related FfT components. Table 2

in Appendix C provides example evidence sources and indicators related to an FfT component.

Student Learning Objective (SLO) Evidence

The teacher plans for and executes practices to accomplish the SLO by monitoring student

progress and revising strategies as needed. Teachers collect data related to the SLO within mini-

improvement cycles across the SLO interval through the assessment methods identified in the SLO.

Critically, teachers, evaluators, and peers must set aside time to analyze and reflect on ongoing

data and results and identify ways to appropriately adjust instruction to improve student learning.

These conversations can help identify what is working and what is not.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 9

The Educator Effectiveness (EE) Cycle

This section provides a step-by-step walkthrough of the Wisconsin EE System process for teachers,

including steps taken by both teachers and their evaluators.

Orientation

Steps to complete the orientation:

1. Provide training on EE to new and new-to-district teachers.

2. Make available and regularly update local EE resources for teachers.

School districts must provide teachers (and evaluators) who are new to the district with an

orientation to the local EE System. Orientation ensures teachers and their evaluators share

a common understanding of these items:

• The evaluation criteria of the Framework for Teaching (FfT);

• The evaluation process and the ongoing continuous improvement cycles informed

by evidence of teacher practice collected throughout;

• The use of evaluation results; and

• Any remaining questions or concerns.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 10

During orientation, the school or district identifies resources available to teachers to answer

questions about their evaluation process (e.g., process manuals, district handbooks, district

training, etc.), and highlights key components of the evaluation process that support the teacher

in continuous improvement (e.g., structures for regular data review, reflection, action planning,

mentors, and coaches).

Orientation provides an opportunity for evaluators to build a foundation of trust. Administrators

should encourage teachers to set goals that foster professional growth. Evaluators may want to

communicate that learning often happens through struggle and error. Evaluators can effectively

communicate this by modeling and sharing their own continuous learning processes, and how they

have learned from their own struggles and mistakes.

The Self-Review

Steps to complete the self-review:

1. Review the 22 Framework for Teaching components.

2. Identify levels of performance for each of the 22 components

using reflection questions and the critical attributes of the rubric.

3. Document the self-review to share with the evaluator for future planning

sessions, goal setting and monitoring, and identification of focus components.

Teachers reflect on their past performance on each of the 22 components, using the critical

attributes to help identify and differentiate their practice. Teachers document their self-review to

provide a foundation for the Planning Session with their evaluator, helping them identify areas of

practice to focus on during observation and evidence collection, SLO goal writing, and professional

development opportunities over the course of their evaluation cycle.

Experienced educators (not on plans of improvement) can use the self-review as evidence of

practice for most FfT components, creating a core set of at least 3 components to focus on during

observations and evidence collection throughout the evaluation cycle.

Evaluators and teachers should collaboratively decide 1) whether to use the self-review as

evidence of practice, 2) which components to focus on during the EE cycle, and 3) how many

components should be focused on (no less than 3).

Completing an annual self-review helps provide focus for the goal-setting processes, professional

conversations, and evidence collection. Self-review is required as part of a teacher’s evaluation and

should occur at least once per evaluation cycle, ideally at the beginning of each new cycle. The

teacher’s self-review is based on the FfT and should focus on the critical attributes, rather than just

the components’ performance level descriptors. Teachers who analyze and reflect on their own

practice understand both their professional strengths and areas in need of development. Such

reflection provides an opportunity for the teacher to consider how the needs of the students in an

individual classroom connect to the larger goals of the school.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 11

Educator-Developed Goals: The SLO

Teachers create a student learning objective (SLO) annually. Teachers develop the SLO at the

beginning of the school year. The SLO contains two main components: 1) the data, rationale, and

the academic goal and 2) the identification of instructional strategies that focus on the job duties of

teacher as outlined in the Framework for Teaching (FfT).

The teacher develops the goal after self-reflection and analyses of past student learning and

professional practice data. The teacher should develop goals distinctive to their professional

practice and relevant to school priorities. As with any continuous improvement or inquiry cycle,

data analysis and goal development serve as the initial steps.

Prior to the 2022-23 school year, DPI required teachers and principals to also write a

professional practice goal (PPG) to accompany the SLO. As of the 2022-23 school year,

teachers and principals no longer need to write a separate PPG. They may now focus on

identifying, implementing, and iterating on their professional practice goals using the

instructional or leadership strategies through the SLO or combining the goals. Districts

wishing to implement a standalone PPG may continue to do so.

The Student Learning Objective (SLO)

Teachers write at least one SLO each year. Within the SLO process, the teacher works

collaboratively with peers, coaches, and evaluators to:

• Determine an essential learning target for the year (or

appropriate interval);

• Review student data to identify differentiated student starting points and growth

targets associated with the learning target for the year;

• Review personal instructional practice data (i.e., self-reflection and feedback from prior

years’ learning-centered evaluations) to identify strong instructional practices as well

as practices to improve upon to support students in meeting the growth targets;

• Determine authentic and meaningful methods to assess students’ progress toward the

targets, as well as how to document resulting data;

• Review evidence of student learning and progress, as well as evidence of the teacher’s

own instructional practices;

• Reflect and determine whether evidence of instructional practices points to strengths

that support students’ progress toward the targets or to instructional practices that

need reconsideration;

• Adjust accordingly;

• Repeat regularly.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 12

At the end of each year, the teachers reflect on their students’ progress and their own practice

across the year using the SLO rubric (see SLO rubric in Appendix D). Teachers draw upon this

reflection, in addition to reflections on practice, to inform student and practice goals for the

coming year.

At the end of an EE cycle, the teacher’s evaluator reviews all SLOs and the teacher’s continuous

improvement practice across the EE Cycle. The evaluator uses the SLO Rubric to provide feedback

at the critical attribute level to inform areas of strength, and to create a strategic plan for any areas

needing growth.

Steps to Writing the Student Learning Objective (SLO)

The SLO writing process addresses the following key components:

• Baseline Data and Rationale

• Learning Content/Grade Level

• Student Population

• Evidence Sources

• Time Interval

• Targeted Growth

• Instructional Strategies and Supports

Teachers should reference the SLO Quality Indicator Checklist as they write and monitor the SLO

(see SLO Quality Indicator Checklist in Appendix D). Teachers can also use the SLO Quality

Indicator Checklist to support collaborative conversations regarding the SLO. Writing a Quality

SLO on the DPI website includes how-to walkthroughs for each of these key SLO planning

considerations related to a specific example.

Baseline Data and Rationale

Teachers explain their chosen SLO focus and justify their rationale through narrative and data.

The rationale begins with a review of past school and student data to gain a clear understanding of

the school and student learning reality and culminates with a review of previous years’ classroom

student learning data.

Analysis and reflection of prior classroom data helps teachers identify their own strengths and

challenges related to improving student learning. Reviewing trends allows the teacher to make

connections between their own instructional practices and recurring trends regarding

student progress.

Importantly, elementary and middle school teachers must include school-wide reading scores in

their analysis, and high school teachers must include school graduation rates. Analysis of these

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 13

required data may not present a specific need or warrant setting the SLO based on them, but state

statute requires teachers at least include these data in their baseline analysis (See Appendix J:

Legal Reference).

Learning Content/Grade Level

Teachers link the focus of the SLO to the appropriate academic content standards and confirm that

the focus content is taught or reinforced throughout the interval of the SLO. SLOs should focus on

high-level skills or processes rather than rote or discrete learning.

Student Population

A thorough data analysis will almost always point to more than one potential area of focus for the

goal’s student population. Ultimately, the teacher has discretion in choosing the population and the

appropriately responsive focus for the SLO.

A teacher’s ability to set and achieve goals for improved levels of student learning closely aligns

to experience and instructional expertise, and teachers will be at varying degrees of readiness to

engage in this process. Those newer to the work may find it helpful to focus on a subgroup of

students as the basis of the population in the SLO.

Evidence Sources (Assessment)

Using grade level and school-centered assessment practices, the teacher analyzes the progress

the students make relative to the identified growth goals.

• Interim assessment. An interim assessment is designed to monitor progress by

providing multiple data points across the instructional period. The interim assessment

does not have to be a traditional test. Teachers can use rubrics to measure skills

displayed through writing, performance, portfolios, etc. Teachers use interim

assessments strategically (baseline, mid-point, and end of interval) across the SLO

interval to measure student growth. Near the beginning of the interval, the teacher

administers an interim assessment to the students identified as the population for

the SLO.

Teacher-designed or teacher-team-designed assessments can be created and are

appropriate for use within the SLO. Interim assessments can be performance-based as

measured by a rubric and do not need to be traditional or standardized tests. Most

importantly, the assessment must align with the content or skills being taught.

• Formative assessment. Teachers also build in methods to monitor student learning

throughout the SLO interval. Effective teachers use informal, formative practices in an

ongoing way to determine what their students know and can do.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 14

Formative assessment practices serve two functions. They remind teachers to

implement the strategies and action steps identified in the SLO, and they allow teachers

to regularly monitor student progress and adjust instructional strategies to respond to

student needs. Teachers can quickly identify and leverage successful instructional

strategies and practices as well as adjusting or abandoning less successful or

unsuccessful practices. This real-time adjustment within mini-improvement cycles

allows teachers to have a greater impact on student learning. Teachers may find it

helpful to consult with peers to identify formative ways to monitor student learning

throughout the interval.

For more information on strategic assessment systems see

Appendix E.

Targeted Growth

SLO goals reflect anticipated student academic growth over the course of time students are with

a teacher. To set appropriate, rigorous growth targets, teachers use data, including the baseline

interim assessment and historical data, to set an end goal (target) for student learning. Growth is

the improvement in, rather than the achievement of, specific knowledge or skills. The target

identifies the amount of growth relative to specific knowledge or skills expected of students as

measured using an identified assessment.

Time Interval

The duration of the SLO, referred to as the interval, extends across the entire time that the learning

focus of the SLO occurs. For many teachers, the interval will span an entire school year (e.g.,

modeling in 3rd grade math, argumentative writing in U.S. history). For others, the interval might

last a semester or cover multiple years

.

Instructional Strategies

This section of the SLO provides the plan of action the teacher will use to meet their goal.

Strategies and supports reflect new actions that will ultimately result in higher levels of

learning for students. School leaders should support teachers’ effective implementation of

identified instructional strategies to achieve successful student growth. District and school

leaders can support strategies by aligning professional development, district and school

improvement plans, and local policies to support, rather than hinder, successful implementation

of the identified strategies.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 15

SLO Goal Statement (SMART Criteria)

Teachers must focus on student academic learning and should use the SMART goal approach when

constructing an SLO. A SMART goal is simply a type of goal statement written to include the

following specific components:

• Specific - Identify the focus of the goal.

• Measurable - Identify the evidence source.

• Attainable – Determine whether a goal can reasonably be achieved considering all

relevant factors.

• Results-based - The goal statement should include the baseline and target for all

students/groups covered by the SLO.

• Time-bound - The goal is bound with a clear begin and end time.

Planning Session and Ongoing Conversations

Wisconsin’s learning-centered process provides multiple opportunities for collaborative,

professional conversations. Teachers meet with their evaluators formally in the beginning, middle,

and end of the year, but these conversations should also happen informally throughout the year

with the evaluator, peers, and/or team members.

The planning session serves as the first formal check-in and allows for conversations around goal

development and goal planning. At the planning session, teachers receive support and feedback

regarding their SLO and related processes. These collaborative conversations encourage reflection

and promote a culture of professional growth.

Teachers prepare for these collaborative conversations by sharing their SLO with their peer or

evaluator. When preparing for a planning session, teachers reflect on their self-review, SLO, and

professional goals, and identify where they need support. Evaluators or peers prepare by reviewing

the SLO in advance to develop feedback related to the goal and to identify questions that will foster

collaborative discussion and reflection. Peers and evaluators should use a coaching protocol to

structure these professional, collaborative conversations (see Appendix B: Professional

Conversations).

An effective coaching protocol has three key elements:

1) Validate: Identify strengths of the teacher. What makes sense about their self-

reflection and proposed SLO? What can be acknowledged?

2) Clarify: Paraphrase to check for and demonstrate understanding, and ask questions to

gather information, clarify reasoning, and eliminate confusion.

3) Stretch and apply: Raise questions or pose statements to foster thinking, push on beliefs

and stretch goals and/or practices.

During the Planning Session, the evaluator and teacher discuss and agree on evidence sources for

the SLO goal. The evaluator and teacher also plan possible observation opportunities and related

artifacts that will provide adequate evidence for the evaluation.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 16

Reflection and Refinement

Following the Planning Session, teachers reflect further on their goals, make refinements as

needed, and then begin to implement their instructional strategies. Teachers revisit the SLO

over the course of the year.

Observations

Observations provide a shared experience between a teacher and their evaluator (or peer

reviewer). Observations allow evaluators to see teachers in action and directly obtain evidence of

practice. Skilled observers understand that conducting high-quality observations requires ongoing

training and calibration so that teachers receive accurate, growth-oriented feedback. Training and

calibration also ensure that evidence collected from observations is used to accurately assess

current professional practice, and that the FfT is used as a tool to improve practice.

Classroom observations take place over the course of the EE cycle. Multiple observations occur to

collect evidence of teaching practice and provide teachers with ongoing feedback. Ideally, the

educator receives regular and ongoing feedback from peers, coaches, and team members

throughout the year and ongoing EE cycle.

Announced Observation

Steps to completing an announced observation:

• Evaluators schedule the announced observation with the teacher.

• Evaluators schedule a pre-observation conference (for discussion) and

a post-observation conference (for feedback).

• Evaluators conduct the pre-observation conference with the teacher to

discuss the lesson plan, SLO or instructional strategy information, and

any other relevant and useful context.

• Evaluators conduct the observation and collect evidence.

• Evaluators complete evidence collection tasks (such as aligning evidence

statements to rubric components or critical attributes) and reflect on the

observation to generate feedback for the teacher.

• Evaluators conduct the post-observation conference with the teacher

and provide feedback for improvement.

The announced observation provides a comprehensive picture of teaching and opportunity for

formative feedback. A minimum of one formal, announced observation must occur during the EE

cycle. This is typically one 45- to 60-minute classroom observation (generally the length of a

class period).

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 17

A pre-conference and a post-conference support formal, announced observations:

• Pre-conference: The pre-conference allows teachers to provide context for the

observation and provides essential evidence related to a teacher’s skill in planning a

lesson. The pre-conference discussion allows the teacher to identify potential areas that

might benefit from feedback and sets the stage for the evaluator to better support the

teacher following the observation.

• Post-conference: The post-conference provides immediate, actionable feedback to the

teacher. Wiggins (2012) defines actionable feedback as neutral (judgment free), goal-

related facts that provide useful information about what specifically to do differently

next time. The post-conference discussion allows the evaluator to learn about the

teacher’s thinking and reflection about the lesson, what went well, and how the lesson

could be improved. The coaching protocol (see Appendix B: Professional Conversations)

can help the evaluator or peer to plan questions that both support and stretch the

teacher’s thinking and instructional practices.

Mini-Observation

Mini-observations are short, unannounced observations, lasting about 15 minutes. Typically,

four to five mini-observations occur over the course of a full, three-year EE cycle.

2

Mini-

observations, combined with the announced observations, allow for a more detailed and timelier

portrait of teaching practice and offer multiple opportunities for feedback and improvement.

Feedback needs to be formative: actionable and aligned with the FfT critical attributes embedded

within each component.

2

Unless the school or district chooses to use more frequent, but shorter, mini-observations across the EE

cycle. For options related to type and frequency of observations, see Table 4, Appendix C, Observations.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 18

Mid-Year Review and Ongoing Conversations

The mid-year review is the second of three formal check-ins built into the Wisconsin learning-

centered EE process. At the mid-year review, teachers converse with their evaluator about

collected or observed evidence of professional practice and student growth, as well as resulting

reflections and strategy adjustments made to date.

Teachers prepare for the mid-year review by reviewing progress toward goals based on evidence

collected, assessing strategies used to date, and identifying any adjustments to the goal or

strategies used. They then provide their peer or evaluator with a mid-year progress update. The

professional conversation should include an candid discussion about the teacher’s learning process

and practice. A discussion based solely on completing forms will not impact the learning of teachers

or students.

Peers and evaluators prepare for the mid-year review by reviewing the teacher’s progress toward

goals, including evidence collected and strategies used to date, as well as developing formative

feedback questions related to the goals. Evaluators or peers should consider using a coaching

protocol (Appendix C) to structure mid-year conversations.

Reflection and Revision

The Mid-Year review culminates with reflection, the identification of strengths and weaknesses,

and appropriate adjustments to both strategies and growth goals, as necessary.

Closing Out the EE Cycle

This section describes the process of closing out an evaluation cycle for a teacher, including steps

conducted by the evaluator or peer and the teacher to:

• Finalize evidence collection;

• Complete and evaluate the SLO goal;

• Engage in professional conversations at the end-of-cycle conference; and

• Plan for next steps.

End-of-Cycle Conference and Conversation

Steps to completing the end-of-cycle conference

1. The teacher finalizes all SLO and professional practice evidence collection and

shares it with their evaluator. The teacher must conduct a final assessment of

students using an evidence source identified in the SLO.

2. The teacher and the evaluator review SLO and professional practice evidence

in advance of the conference to inform their professional conversation.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 19

3. The evaluator assesses and prepares to share level of practice information for

the SLO and FfT with the teacher at the conference.

4. The evaluator conducts the end-of-cycle conference with the teacher, shares

summary information, engages in a professional conversation focused on feedback

and improvement, and plans for the next upcoming cycle.

The end-of-cycle conference provides an opportunity for deep learning, reflection, and planning

for next steps. The conference provides the teacher and evaluator an opportunity to align future

goals and initiatives at the building and classroom level. Teachers prepare for the end-of-cycle

conference by sharing results of their SLO and practice aligned to the FfT with their evaluator

or peer.

Completing the SLO

After collecting and reviewing evidence, teachers self-score each of the six SLO critical attributes

using the SLO rubric and quality indicators checklist (Appendix D). Assessing the SLO requires the

teacher to reflect on evidence of the student population’s progress relative to the target, as well as

their own SLO process. The teacher’s engagement in the SLO process and their self-reflection

become evidence of the teacher’s ability to meaningfully reflect on their practice and its impact on

student progress. The evaluator will use this as evidence to support feedback and discussion at the

End-of-Cycle Conference with the teacher.

The evaluator reviews all available SLOs and identifies the level of performance for each of the six

SLO critical attributes using the SLO rubric and quality indicators checklist (Appendix D), which

best describes practice across years. Evaluators may assign a single holistic score by identifying the

level of performance selected for most of the six SLO critical attributes.

Evidence Collection

At the end of each year, teachers review the evidence collected during the cycle and consider the

relationship of the evidence to their SLO.

Teachers in all years of the cycle ensure that they have collected evidence that demonstrates their

progress and successes in achieving their SLO. SLO evidence will include the results of the final

interim assessment given to the population identified in the SLO.

Evaluators and peers prepare for the End-of-Cycle Conference by reviewing goal results, including

evidence collected, and planning feedback related to the goals. Preparing ahead of time will help

the evaluator or peer align feedback related to goals and professional practice to structure the

End-of-Cycle conference more effectively and efficiently.

WI EE User Guide for Teachers, Teacher Supervisors, and Coaches · AUGUST 2024 20

During the conference, the evaluator and teacher collaboratively review evidence, goal results, and

possible next steps. The evaluator shares identified levels of performance for the SLO and relevant

FfT components and provides feedback. By discussing feedback at the critical attribute level, the

evaluator and teacher not only identify areas of focus (components) for the coming EE cycle, but

also develop a strategic plan based on actionable changes (strengths to leverage and areas to

improve). Note that evaluators must evaluate all 22 components, but the WI EE System does not

require numeric scoring. Evaluators can opt to keep the evaluation feedback at the critical

attribute level.

Reflections and Next Steps

Reflection includes the identification of both performance successes and areas for performance

improvement. Teachers should review performance successes to identify factors that contributed

to success, which of those factors they can control, and how to continue those in the next cycle.

Likewise, teachers should reflect on areas that need improvement to identify possible root causes

and explore teaching strategies to address those challenges in the future.

Appendix 21

Appendix A:

Research Informing the Teacher Evaluation Process

and the Framework for Teaching

Trust

Trust between educators, administrators, students, and parents is an important organizational

quality of effective schools.

Bryk, A.S., & Schneider, B. (2002). Trust in schools: A core resource for improvement. New York,

NY: Russell Sage Foundation.

Tschannan-Moran, M., & Hoy, W. (2000). A Multidisciplinary Analysis of the Nature, Meaning,

and Measurement of Trust. Review of Educational Research, 70(4), 647-93.

Goal setting

Public and private sector research emphasizes the learning potential through goal setting.

Locke, E. & Latham, G.P. (1990). A theory of goal setting and task performance. New York:

Prentice Hall.

Latham, G.P., Greenbaum, R.L., and Bardes, M. (2009). "Performance Management and Work

Motivation Prescriptions", in R.J. Burke and C.L. Cooper (Eds.), The Peak Performing

Organization. London: Routledge. pp. 33-49.

Locke, E.A., & Latham, G.P. (2013). New Developments in Goal setting and Task Performance.

London: Routledge.

Observation and Evaluation training

Research and evaluation studies on teacher evaluation have pointed to the need for multiple

observations, evidence sources, and training to provide reliable and productive feedback.

Archer, J., Cantrell, S., Holtzman, S.L., Joe, J.N., Tocci, C.M., & Wood. J. (2016). Better feedback

for better teaching: A practical guide to improving classroom observations. San Francisco, CA:

Jossey-Bass.

Gates Foundation, (2013). Measures of effective teaching project, Ensuring fair and reliable

measures of Effective Teaching: Culminating findings from the MET Project’s three-year study.

Available at: Gates Foundation (http://k12education.gatesfoundation.org/teacher-

supports/teacher-development/measuring-effective-teaching/)

Appendix 22

Coaching, Support and Feedback

Aguilar, Elena (2013). The Art of Coaching: Effective Strategies for School Transformation.

Jossey-Bass, San Francisco, CA.

Bloom, G., Castagna, C., Moir, E., & Warren, B. (2005). Blended coaching: Skills and strategies to

support principal development. Thousand Oaks, CA: Corwin Press.

Danielson, C. (2016). Talk about Teaching: Leading Professional Conversations. Thousand Oaks,

CA: Corwin Press.

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to

achievement. New York: Routledge.

Kluger, A.N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A

historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological

Bulletin, 119(2), 254-284.

Knight, J. (2016). Better Conversations. Thousand Oaks, CA. Corwin Press.

Kraft, M.A., Blazar, D., Hogan, D. (2016). The Effect of Teaching Coaching on Instruction and

Achievement: A Meta-Analysis of the Causal Evidence. Brown University Working Paper.

Lipton, L., Wellman, M. (2013). Learning-focused supervision: Developing professional expertise

in standards-driven systems. Charlotte, VT: MiraVia, LLC.

Wiggins, Grant (2012, September) Seven Keys to Effective Feedback, Educational Leadership,

Volume 7, pp.10-16. Retrieved from https://www.ascd.org/el/articles/seven-keys-to-effective-

feedback

Framework for Teaching

Danielson, C., & McGreal, T.L. (2000). Teaching evaluation to enhance professional practice.

Alexandria, VA: Association for Supervision and Curriculum Development.

Danielson, C. (2007). Enhancing professional practice: A framework for teaching, 2nd Edition.

Alexandria, VA: ASCD.

Gates Foundation (2013). Measures of effective teaching project, Ensuring fair and reliable

measures of Effective Teaching: Culminating findings from the MET Project’s three-year study.

Available at: https://usprogram.gatesfoundation.org/What-We-Do/K-12-Education

Appendix 23

Milanowski, A. T., Kimball, S.M., & Odden, A.R. (2005). Teacher accountability measures and links

to learning. In R. Rubenstein, A.E. Schwartz, L. Stiefel, and J. Zabel (Eds.), Measuring school

performance & efficiency: Implications for practice and research, 2005 Yearbook of the American

Education Finance Association. Larchmont, NY: Eye on Education.

Sartain, L., Stoelinga, S. R., & Brown, E.R. (2011). Rethinking teacher evaluation in Chicago:

Lessons learned from classroom observations, principal-teacher conferences, and district

implementation. Consortium on Chicago School Research, University of Chicago.

Taylor, E.S., & Tyler, J.H. (2012). The effect of evaluation on teacher performance. American

Economic Review, 102(7), 3628-3651.

Student Learning Objectives

Kanold, T. (2011). Five Disciplines of PLC Leaders. Bloomington, IN: Solution Tree Press.

Reeves, D. (2002). The Leader’s Guide to Standards: A Blueprint for Educational Equity and

Excellence. San Francisco, CA: Jossey-Bass

Appendix 24

Appendix B:

Professional Conversations and Coaching

Timely, specific, and ongoing feedback is critical to a learning-centered system. Wisconsin

designed the EE process to grow and develop teachers and school leaders. Whether acting as an

evaluator or peer, professional conversations present the opportunity to provide feedback that

can change practice and improve outcomes for students. Charlotte Danielson (2016) stresses

the importance of professional conversations, stating, “Of all the approaches available to

educators to promote teacher learning, the most powerful (and embedded in virtually all others)

is that of professional conversations” (p. 5). While the intent of feedback from an evaluator may

differ from feedback coming from a peer or coach, the way in which the participants engage in

dialogue is the same. Likewise, while most recognize feedback as part of a formal observation

and evaluation process, feedback can be equally effective in informal instances.

Formal Feedback Opportunities within the EE Process

Whenever possible, evaluators and peers should review data from classroom observations

and goal information prior to meeting with an educator. Prior review of the data for the

Planning, Mid-Year, and End-of-Cycle Conferences allows the evaluator to 1) ensure effective

use of meeting time, 2) plan for reflective questions, and 3) identify potential resources and

determine next steps. Some find it helpful to use a coaching protocol to plan for and lead these

conversations. Appendix Figure 1 below represents a protocol with components common to

coaching models.

Appendix Figure 1: Coaching Protocol

Appendix 25

Professional conversations between teacher and evaluator or coaching peer should be both

flexible and responsive to the needs of the teacher. Appendix Figure 1 shows that the various

stages of the coaching protocol do not happen sequentially. Instead, participants move between

the stages in whatever way is appropriate and needed for productive conversation.

Opening the conversation with validation statements affirms what is going well and validates

the skills and expertise the teacher brings to their practice and the conversation. Clarifying

questions help the evaluator understand the teacher’s thinking while providing additional

context and evidence.

Since the goal of a learning-centered system is to grow teachers professionally, the stretch and

apply portion of the conversation is meant to challenge and explore existing dispositions and

beliefs, build autonomy, encourage reflective practice, and cultivate meaningful commitment to

change. Example statements for each of the EE conferences are provided below.

Planning (or peer review) session:

Validate - “I see you have done a thorough analysis of your school and classroom data.

You clearly have dug into the Framework for Teaching and have been thinking about…”

Clarify - “Tell me more about your focus of student engagement. You have included the

idea of learning ways to engage these students in the Strategies section of your SLO.

What does that look like?”

Stretch and Apply - “Looking at your assessment data, what learning gaps do you see in

your student population? What might you do to make the content more accessible to

your ELL students?”

Mid-Year Conference:

Validate - “Your lesson planning consistently details how you expect to monitor student

learning progress both through ongoing formative steps during instruction and at key

points across lessons.”

Clarify - “What are some ways you have incorporated what you are learning from those

assessments into your instruction?”

Stretch and Apply - “How has the fourth-grade team been using formative assessments

to inform their real-time instruction?” “What might you do to engage the students who

have already mastered the content and are ready for more?”

Appendix 26

End-of-Cycle Conversations:

Validate - “You’ve done a lot of specific reflecting about your SLO ”

Clarify - “If I’m understanding correctly, you are finding it difficult to find common time

to meet with your literacy PLC to achieve some of your goals. What might be another

way to arrive at the solution?”

Stretch and Apply - “You’ve talked about the challenges you faced by using the post-

course assessment as the growth measure for your SLO. What assessment approaches

might you use in your next SLO planning?” “How might those changes improve student

outcomes?” “What are your next steps to make that happen?”

Developmentally Appropriate Supports

Evaluators and peers use the evidence collected in classroom observations and related artifacts

and alignment of that evidence to the critical attributes of the FfT to determine the current

performance level of the teacher. Moving educator practice from a basic to distinguished level in

one feedback session is unrealistic. The goal should be to move the teacher forward in

developmentally appropriate increments so as not to overwhelm them. If evidence supports

current practice at the basic level, then feedback designed to move toward the proficient level is

appropriate.

Remember that a teacher may perform at different levels for each critical attribute within a

component—for example, one critical attribute within component 2c. Managing Classroom

Procedures may currently be basic and need to move to proficient, another critical attribute in

the same component may be proficient and need to move to distinguished, and a third in the

same component may be distinguished and not need to move. With this information, the

evaluator and teacher can create a strategic plan for moving practice forward. See Appendix

Table 1 on the next page.

Appendix 27

Appendix Table 1: Critical Attributes Used in Feedback (Component 2c: Maintaining Purposeful Environments)

Basic Proficient

Description:

Classroom routines and procedures, established or

managed primarily by the teacher, support

opportunities for student learning and development.

Description:

Shared routines and efficient procedures are largely

student-directed and maximize opportunities for

student learning and development.

Critical Attribute: Purposeful Collaboration:

Students are partially engaged in group work.

Critical Attribute: Purposeful Collaboration:

Students are productively engaged during small

group work, working purposefully and

collaboratively with their peers.

Critical Attribute: Student Autonomy

and Responsibility:

Routines and procedures partially support student

autonomy and assumption of responsibility.

Critical Attribute: Student Autonomy

and Responsibility:

Routines and procedures allow students to

operate autonomously and take responsibility

for their learning.

Critical Attribute: Equitable Access to

Resources and Supports:

Resources and supports are managed somewhat

efficiently and effectively, though students may not

have equitable access.

Critical Attribute: Equitable Access

to

Resources and Supports:

Resources and supports are deployed efficiently

and effectively; all students are able to access what

they need.

Critical Attribute: Non-Instructional Tasks:

Non-instructional tasks are completed with some

efficiency, but instructional time is lost.

Critical Attribute: Non-Instructional Tasks:

Most non-instructional tasks are completed

efficiently, with little loss of instructional time.

In this example, the evaluator uses evidence collected in the observation to engage the teacher

in conversations related to the degree to which time was spent in transition and the degree to

which the students were responsible for their learning. For example:

Validate: “It was evident that the students are familiar with and respond quickly

to the visual and auditory transition cues you are using. They were actively

involved in the activity within two minutes of transition.”

Clarify: “As you signaled a transition, the time it took for groups to settle and

engage with the practice problems varied (show data). Was that aligned with

planning for timing and pacing?”

Stretch and Apply: “Students within the groups completed tasks at different

times, and those that finished early were asked on two occasions to find some

quiet work. What might you build into the independent practice portion of your

lesson to challenge these advanced learners?”

Appendix 28

Building Autonomy

Effective professional conversations support the differentiated needs of the teacher. Coaching

models (Aguilar, 2013; Hall and Simeral, 2008; Kraft et al., 2016) describe varying degrees of

coaching support, ranging from more direct, instructional coaching to just acting as a guide for

reflective thinking. Appendix Figure 2, below, demonstrates the continuum of coaching

supports and their relationship to increasing teacher autonomy. Early in the coaching

relationship, the coach may direct most of the professional conversation. As the relationship

progresses, the teacher becomes more autonomous in their practices and reflection and begins

to lead more of the conversations.

Appendix Figure 2: Continuum of Supports

Instances where the teacher is feeling challenged or is unable to reflect or construct ideas

independently (perhaps in the case of a new teacher) call for a direct approach. In these

instances, the evaluator or peer leads the conversation and offers direct support.

Example: “Maria became less resistant when you presented the rationale…”

Over time, and when appropriate, evaluators or peers engage the teacher in a more collegial

exchange of ideas and feedback. Rather than direct statements, they engage the teacher in a

mutual exploration of data. As the teacher becomes more of an equal contributor, autonomy

is increasing.

Example: “Let’s explore the student work, and analyze the results together…”

Prior planning for professional conversations helps to build a foundation of trust as well as

teacher capacity. Evaluators or peers nurture a teacher’s capacity for reflection and continued

learning by preparing for the conversation ahead of time and developing probing questions that

encourage the teacher to reflect. Increased autonomy becomes evident in the connections the

teacher makes between student learning and their instructional practice. As teacher autonomy

is developed, teachers lead conversations primarily, with the evaluator or peer encouraging

deeper analysis and reflection.

Example: “The analysis of students’ work indicates your students with learning

disabilities are still performing well below grade level on this standard. How does this

influence your planning and delivery of content? What would make the content more

accessible to these students?”

Appendix 29

Appendix C:

Observations and Evidence

Tips and Considerations for Conducting Classroom Observations

Focus on what is important and immediate:

• To maximize impact and relevance of feedback, ask teachers what they most desire

feedback on and what practices they would most like the evaluator to observe.

• An evaluator can draw upon previous evidence of practice (past EE cycles or

observations) to identify areas for growth.

• The evaluator can focus efforts during the observation on finding evidence of the

identified components.

Manipulate time or remain invisible:

• The presence of an evaluator may affect how the teacher or the teacher’s students

behave. Evaluators can avoid this by using a variety of observation methods,

including asking teachers to record themselves in action and submit videos for their

evaluators to review. This method not only removes anxiety for the teacher but can

also address scheduling and capacity of the principal by removing the requirement

for the evaluator to observe the practice in real-time.

Use High-Leverage Evidence Sets:

High-leverage evidence sets result from intentional and strategic collection and use of

observations and artifacts. These evidence sources differ from a random collection of artifacts

or observations retroactively aligned to rubric components (i.e., lists of parent phone contacts

without describing the impetus or results; lesson plans with no context or reflection; PD session

attendance record with no agenda or evidence of utilizing the learning).

High-leverage evidence sources differ from isolated or random evidence sources that may

provide little insight about professional practice, contribute insufficient information to evaluate

individual components, and have little strategic value in and of themselves. High-leverage

evidence sources illustrate professional practice as they deeply inform instruction, providing a

rich basis for reflection and growth.

A high-leverage evidence set covers multiple components. Thus, teachers may potentially

collect fewer evidence examples, which can ease the burden for the teacher. Additionally, high-

leverage sets ease the burden of the evaluator, who otherwise must try to figure out what all the

disparate artifacts demonstrate about instruction. Appendix Table 2 on the next page offers

examples of high-leverage evidence sources.

Appendix 30

Appendix Table 2: Artifact and Observation Evidence and Associated FfT Components

Evidence from Observations & Artifacts Relevance to Multiple Components

Lesson plan; assessment used during the related unit

or lesson; classroom observation of the lesson; pre-

and post-conference conversations addressing the

lesson, the assessment, data from the assessment,

and next steps; teacher reflections

1a: Applying Knowledge of Content and Pedagogy

1b: Knowing and Valuing Students

1c: Setting Instructional Outcomes

1d: Using Resources Effectively

1e: Planning Coherent Instruction

1f: Designing and Analyzing Assessments

3c: Engaging Students in Learning

3d: Using Assessment for Learning

Observation of PLC participation during assessment

design; formative/summative assessment tools;

lesson plan; and reflection

1c: Setting Instructional Outcomes

1f: Designing and Analyzing Assessments

4d: Contributing to School Community and Culture

4e: Growing and Developing Professionally

4f: Acting in Service of Students

AND may provide evidence toward the SLO process.

Table 3: Example Evidence Sources for 1f: Designing and Analyzing Assessments

Evidence Look-Fors

• Evaluator/teacher conversations

• Lesson/unit plan

• Observation

• Formative and summative assessments

and tools

• Uses assessment to differentiate instruction

• Students have weighed in on the rubric

or assessment design

• Lesson plans indicating correspondence

between assessments and

instructional outcomes

• Assessment types suitable to

the style of outcome

• Variety of performance opportunities

for students

• Modified assessments available for individual

students as needed

• Expectations clearly written with descriptors

for each level of performance

• Formative assessments designed to inform

minute-to-minute decision-making by the

teacher during instruction

Appendix 31

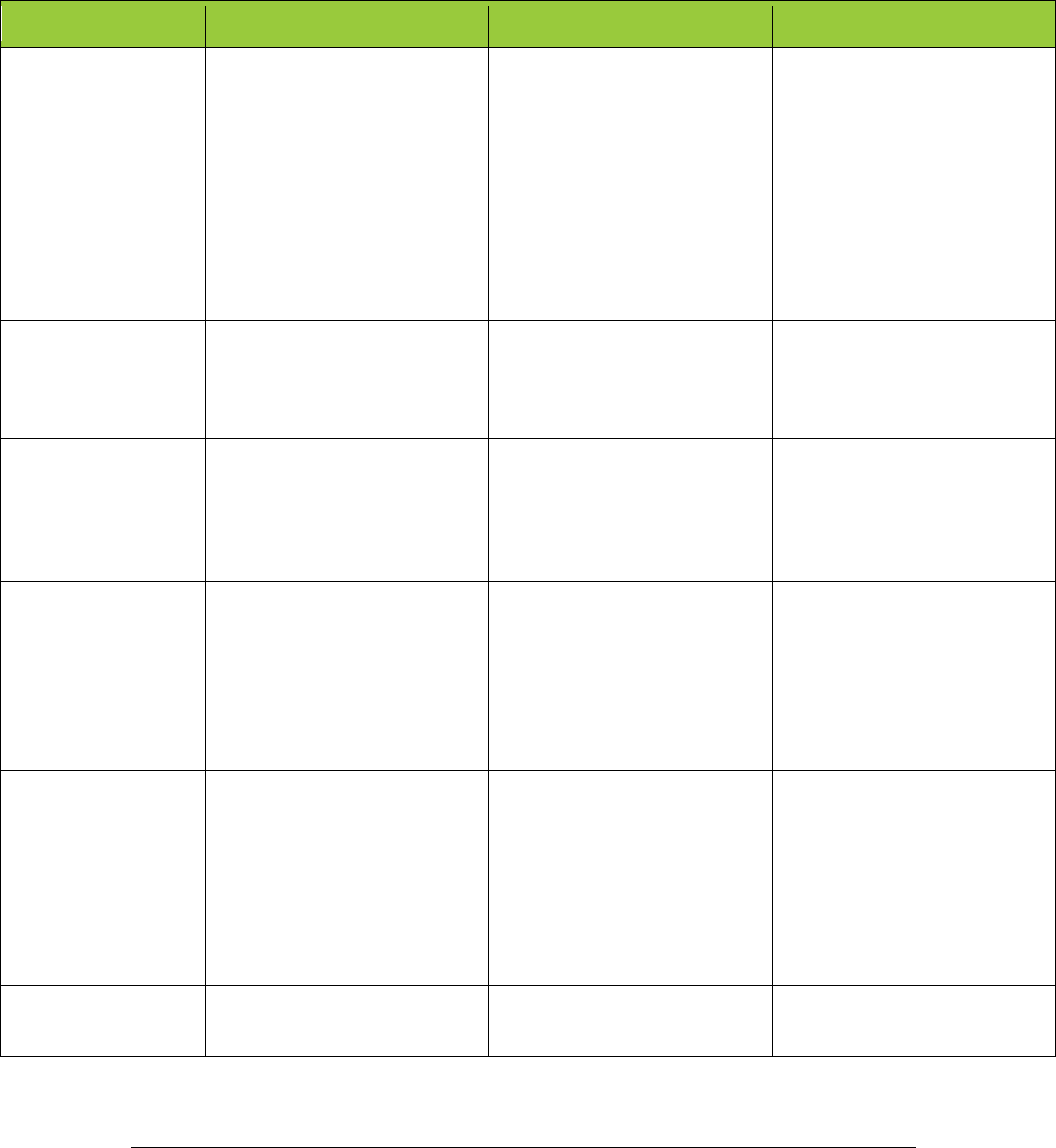

Type and Frequency of Observations & Artifacts

Appendix Table 4, below, outlines expected type and frequency of observations. Districts have

options in completing required observations, as noted in the options column. See also Tips for

Success on the next page.

Appendix Table 4 Frequency of Observations

Definition Options Specifics

Announced Observation(s): An

announced, formal observation of the

educator by their evaluator to gather

evidence of educator practice.

Approximately the length of a full class

session (45-60 minutes).

One (1) full-length,

announced observation.

or ______________________________________

Multiple (3-4) unannounced mini-

observations equal to that of a

full observation.

Pre-Observation Conference

Observations

Post-Observation Conference and

feedback

Mini-Observations: Unannounced,

informal observations of the educator by

their evaluator to gather evidence of

educator practice. Roughly 15 minutes

in length.

Required: Two (2) mini-observations (15

minutes) in addition to the one (1) full-

length, announced observation.

Additionally, a minimum of one (1) mini-

observation per year.

or ______________________________________

5-6 mini-observations.

And, a minimum of one (1)

mini-observation per year.

Unannounced observation. Feedback

provided following observation within

one week.

If using more frequent, shorter

observations:

• The evaluator and educator still

meet before conducting

observations to determine identified

focus components or practices,

rather than discussing a specific

lesson.

• Collaborative conversations still

occur based on observations to plan

next steps.

• Total observation time throughout

the cycle should still be met =

minimum 105 to 135 min.

Classroom Walk-Through: Observing a

specific idea, theme, trend, initiative, or

topic across multiple classroom or

contexts, usually building-wide, as

opposed to evidence of individual

practice.

5-10 min

As often as the building administrator or

other administrator feels is necessary

Evaluator uses a district-created or

approved tool.

Brief feedback after the walk-through is

a recommended practice.

Artifacts & High-Leverage

Artifact Sets: Documents or videos that

contain evidence of demonstrated

educator practice or the SLO.

DPI recommends grouping artifacts into

“high leverage artifact sets” to document

evidence contextually and efficiently.

Per school year:

• Evidence to support the SLO

• Evidence of educator practice

Per Effectiveness Cycle:

• Evidence of all 22 educator

practice components

• Evidence of all SLO’s completed

within the cycle

Upload as often as possible.

Appendix 32

Observations Tips for Success

Announced and Mini-Observations:

• Observations should generate evidence that is specific to the educator,

can be aligned to a component, and produce actionable feedback.

• Evaluators or teachers collect artifacts to support the observation and

related feedback before or after the event.

• Evidence may come from any part of the observation process (pre-

or post-conferences, observation, reflections on the observation).

• Peers may conduct mini-observations for formative feedback purposes.

• Districts may use district-created tools for collecting evidence..

Classroom Walk-Through:

• Supports a continuous improvement model but is not required as

• part of the EE system.

• Districts may use their own or an adapted walk-through tool.

Artifacts & High-Leverage Artifact Sets:

• No specific artifacts are required by the system. Teachers should consider collecting

high-leverage artifacts that support multiple domains and provide a rich

demonstration of educator practice and results.

• This process may be teacher- or evaluator-driven.

Appendix 33

Component-Related Evidence and Sources

The tables that follow below are designed to facilitate teacher collection of evidence for support

of professional practice. They identify indicators related to each component of the Danielson

Framework for Teaching and suggest sources that are likely to contain supporting evidence.

Domain 1: Planning and Preparation

1a: Applying Knowledge of Content and Pedagogy

Indicators/Look-Fors Evidence/Evidence Source

• Adapting to the students in the classroom

• Scaffolding based on student response

• Teachers using vocabulary of the discipline

• Lesson and unit plans reflect important

concepts in the discipline and knowledge of

academic standards

• Lesson and unit plans reflect tasks authentic

to the content area

• Lesson and unit plans accommodate

prerequisite relationships among concepts

and skills

• Lesson and unit plans reflect knowledge

of academic standards

• Classroom explanations are clear

and accurate

• Accurate answers to students’ questions

• Feedback to students that advances learning

• Interdisciplinary connections in plans and

practice

Evaluator/teacher conversations

Guiding questions, documentation of conversation

(e.g., notes, written reflection)

Teacher/student conversations

Lesson plans/unit plans

Observations

• Notes taken during observation

Appendix 34

1b: Knowing and Valuing Students

Indicators/Look-Fors Evidence/Evidence Source

• Artifacts that show differentiation and

cultural responsiveness

• Artifacts of student interests and

backgrounds, learning styles, out-of-school

commitments (work, family

responsibilities, etc.)

• Differentiated expectations based on

assessment data/aligned with IEPs

• Formal and informal information about

students gathered by the teacher for use

in planning instruction

• Student interests and needs learned by the

teacher for use in planning

• Teacher participation in community

cultural events

• Teacher-designed opportunities for families

to share their heritages

• Database of students with special needs

• Evaluator/teacher conversations

• Guiding questions

• Documentation of conversation (e.g., notes,

written reflection)

• Lesson plans/unit plans

• Observations

• Notes taken during observation

Optional

• Student / parent surveys

1c: Setting Instructional Outcomes

Indicators/Look-Fors Evidence/Evidence Source

• Same learning target,

differentiated pathways

• Students can articulate the learning target

when asked

• Targets reflect clear expectations that are

aligned to grade-level standards

• Checks on student learning and adjustments

to future instruction

• Use of formative practices and assessments

such as entry/exit slips, conferring logs,

and/or writer’s notebooks

• Outcomes of a challenging cognitive level

• Statements of student learning, not

student activity

• Outcomes central to the discipline and

related to those in other disciplines

• Outcomes permitting assessment of

student attainment

• Outcomes differentiated for students

of varied abilities

Evaluator/teacher conversations

• Guiding questions

• Documentation of conversation

(e.g., notes, written reflection)

Lesson plans/unit plans

Observations

• Notes taken during observation

Appendix 35

1d: Using Resources Effectively

Indicators/Look-Fors Evidence/Evidence Source

• Evidence of prior training

• Evidence of collaboration with colleagues

• Evidence of teacher seeking out resources

(online or other people)

• District-provided instructional, assessment,

and other materials used as appropriate

• Materials provided by professional

organizations

• A range of texts, internet resources,

community resources

• Ongoing participation by the teacher in

professional education courses or

professional groups

• Guest speakers

• Resources are culturally responsive

Evaluator/teacher conversations

• Guiding questions

• Documentation of conversation

(e.g., notes, written reflection)

Lesson plans/unit plans

Observations

• Notes taken during observation

1e: Planning Coherent Instruction

Indicators/Look-Fors Evidence/Evidence Source

• Grouping of students

• Variety of activities

• Variety of instructional strategies

• Same learning target, differentiated

pathways

• Lessons that support instructional outcomes

and reflect important concepts

• Instructional maps that indicate relationships

to prior learning

• Activities that represent high-level thinking

• Opportunities for student choice

• Use of varied resources—thoughtfully

planned learning groups